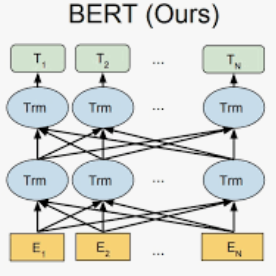

Pre-trained Transformer-based models are achieving state-of-the-art results on a variety of Natural Language Processing data sets. However, the size of these models is often a drawback for their deployment in real production applications. In the case of multilingual models, most of the parameters are located in the embeddings layer. Therefore, reducing the vocabulary size should have an important impact on the total number of parameters. In this paper, we propose to generate smaller models that handle fewer number of languages according to the targeted corpora. We present an evaluation of smaller versions of multilingual BERT on the XNLI data set, but we believe that this method may be applied to other multilingual transformers. The obtained results confirm that we can generate smaller models that keep comparable results, while reducing up to 45% of the total number of parameters. We compared our models with DistilmBERT (a distilled version of multilingual BERT) and showed that unlike language reduction, distillation induced a 1.7% to 6% drop in the overall accuracy on the XNLI data set. The presented models and code are publicly available.

翻译:培训前的变异器模型正在各种自然语言处理数据集上取得最新结果。 但是,这些模型的大小往往对它们在实际生产应用程序中的部署不利。 在多语言模型中,大多数参数位于嵌入层中。 因此,缩小词汇的大小应该对参数总数产生重大影响。 在本文中,我们提议产生较小的模型,根据目标子体处理较少语言的数量。我们用XNLI数据集对较小版本的多语言BERT进行了评估,但我们认为这一方法可能适用于其他多语言变异器。 获得的结果证实,我们可以产生较小模型,保持可比结果,同时将参数总数减少到45%。我们将我们的模型与DistillemBERT(多语种BERT的蒸馏版)进行比较,并表明,与语言减少不同,蒸馏导致XNLI数据集总体准确度下降1.7%至6%。 提供的模型和代码是公开的。