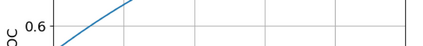

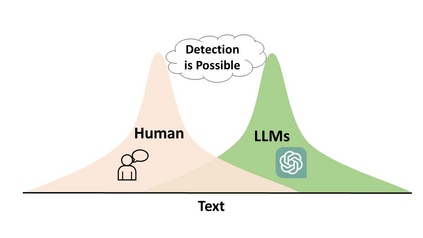

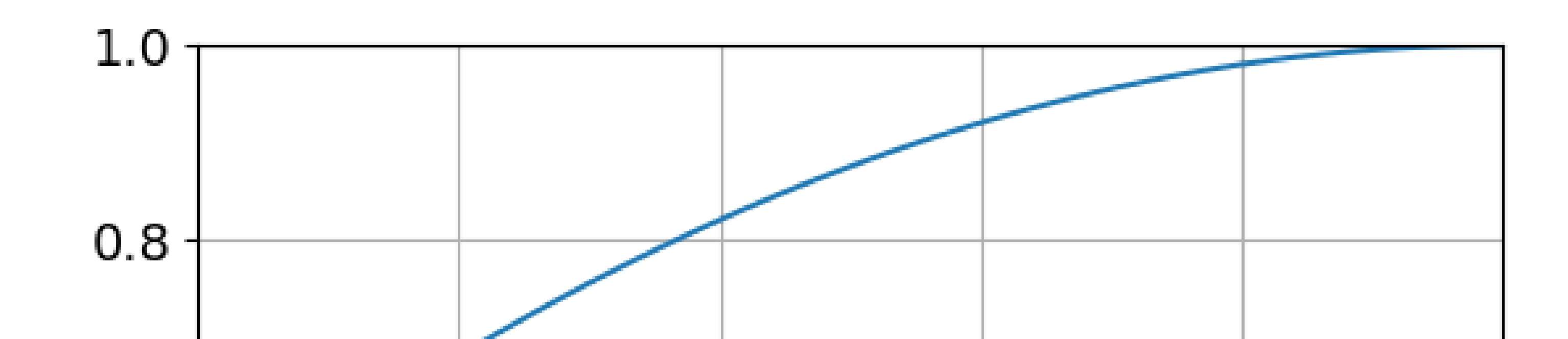

Our work focuses on the challenge of detecting outputs generated by Large Language Models (LLMs) from those generated by humans. The ability to distinguish between the two is of utmost importance in numerous applications. However, the possibility and impossibility of such discernment have been subjects of debate within the community. Therefore, a central question is whether we can detect AI-generated text and, if so, when. In this work, we provide evidence that it should almost always be possible to detect the AI-generated text unless the distributions of human and machine generated texts are exactly the same over the entire support. This observation follows from the standard results in information theory and relies on the fact that if the machine text is becoming more like a human, we need more samples to detect it. We derive a precise sample complexity bound of AI-generated text detection, which tells how many samples are needed to detect. This gives rise to additional challenges of designing more complicated detectors that take in n samples to detect than just one, which is the scope of future research on this topic. Our empirical evaluations support our claim about the existence of better detectors demonstrating that AI-Generated text detection should be achievable in the majority of scenarios. Our results emphasize the importance of continued research in this area

翻译:本文旨在探讨如何区分人类和大型语言模型(LLMs)生成的文本输出。这是许多应用程序中至关重要的能力。然而,在社区内讨论此类区别的可能性和不可能性是一个争议话题。因此,一个核心问题是我们是否能够检测到机器生成的文本,以及何时检测到。本文提供证据表明,除非人类和机器生成的文本分布在整个支持范围内完全相同,否则几乎始终可以检测到机器生成的文本。这一观察结果基于信息理论中的标准结果,并依赖于机器文本是否越来越像人类,我们需要更多样本来检测。我们导出了机器生成文本检测的精确样本复杂性界限,告诉需要多少样本才能检测。这引发了创建更复杂的检测器的进一步挑战,这些检测器需要将n个样本输入才能检测,而不仅仅是一个样本,这是未来研究的范围。我们的实证评估支持我们的结论,证明机器生成文本检测在大部分情况下是可行的。我们的结果强调了在这个领域继续开展研究的重要性。