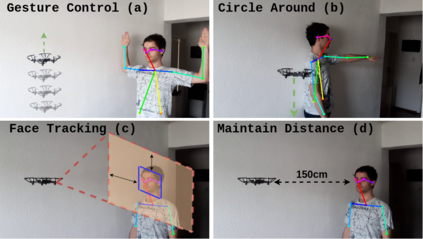

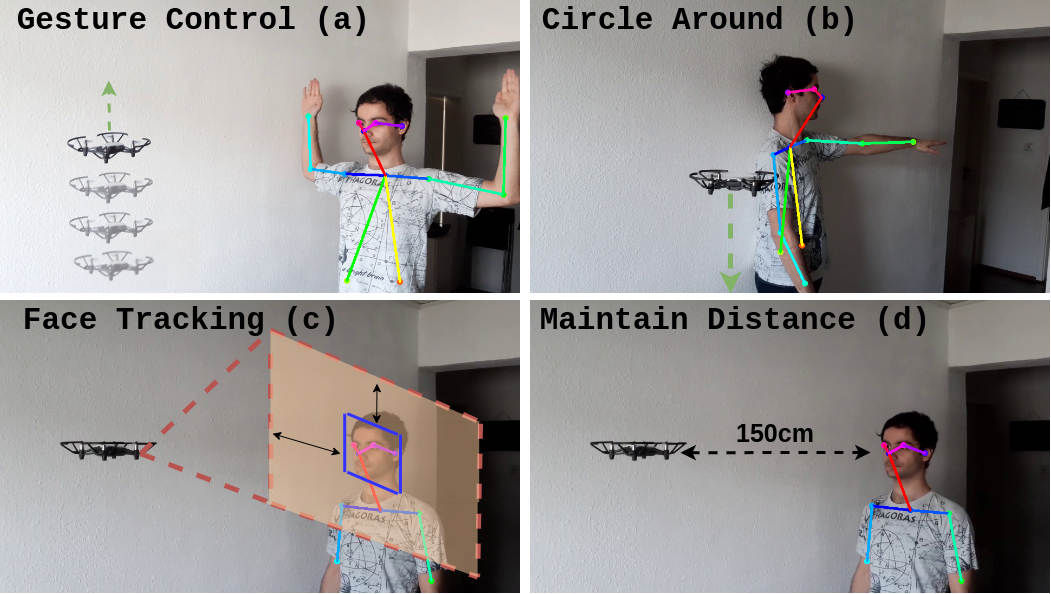

Drones have become a common tool, which is utilized in many tasks such as aerial photography, surveillance, and delivery. However, operating a drone requires more and more interaction with the user. A natural and safe method for Human-Drone Interaction (HDI) is using gestures. In this paper, we introduce an HDI framework building upon skeleton-based pose estimation. Our framework provides the functionality to control the movement of the drone with simple arm gestures and to follow the user while keeping a safe distance. We also propose a monocular distance estimation method, which is entirely based on image features and does not require any additional depth sensors. To perform comprehensive experiments and quantitative analysis, we create a customized testing dataset. The experiments indicate that our HDI framework can achieve an average of93.5% accuracy in the recognition of 11 common gestures. The code will be made publicly available to foster future research. Code is available at: https://github.com/Zrrr1997/Pose2Drone

翻译:无人驾驶飞机操作需要与用户进行越来越多的互动。人类-日光互动的自然和安全方法正在使用手势。本文介绍一个基于骨架的构成估计的人类发展倡议框架。我们的框架提供功能,以简单的手臂手势控制无人驾驶飞机的移动,并跟踪用户,同时保持安全距离。我们还提出了一个单目距离估计方法,完全基于图像特征,不需要额外的深度传感器。为了进行全面试验和量分析,我们创建了一个定制的测试数据集。实验表明,我们的人类发展倡议框架在承认11个共同手势后,平均可以达到93.5%的准确度。代码将公开提供,以促进未来的研究。代码见:https://github.com/Zrrr1997/Pose2Drone。