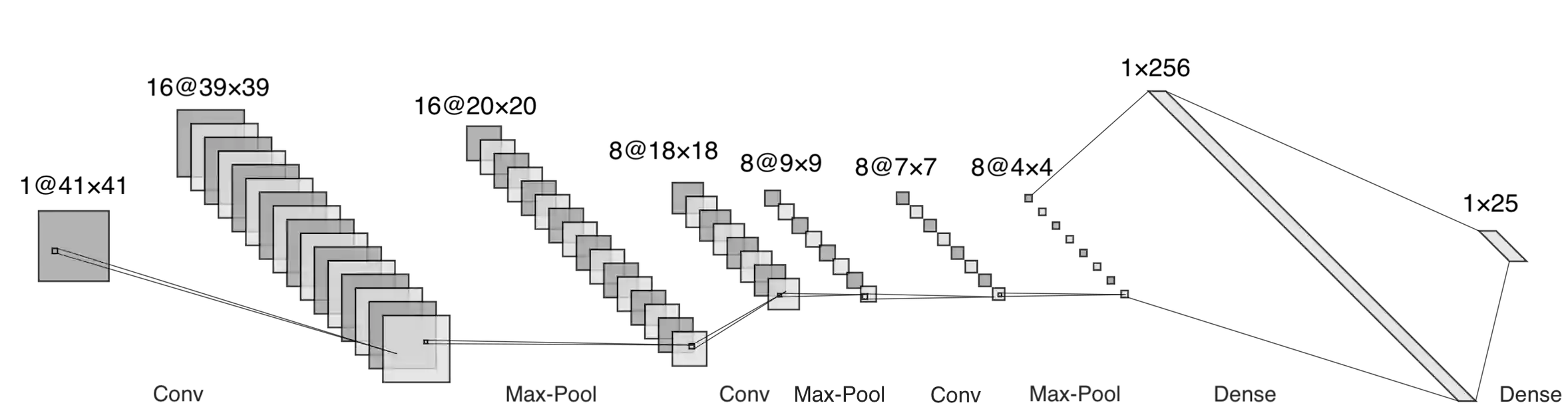

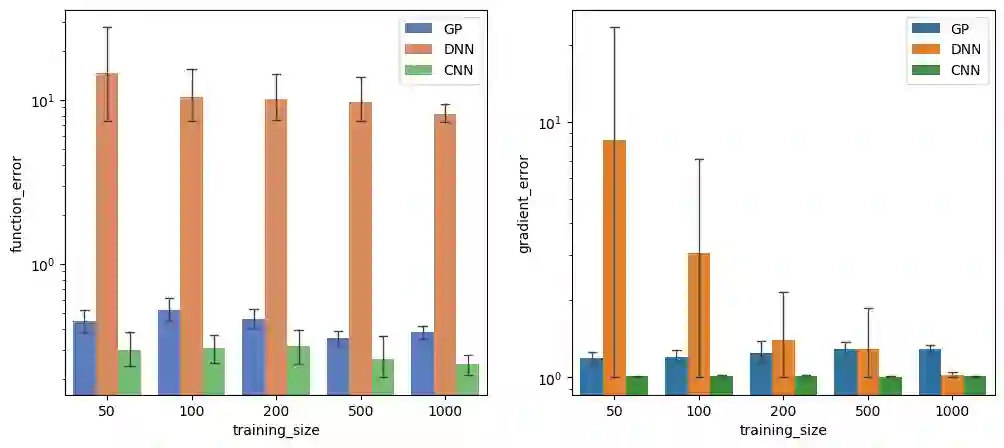

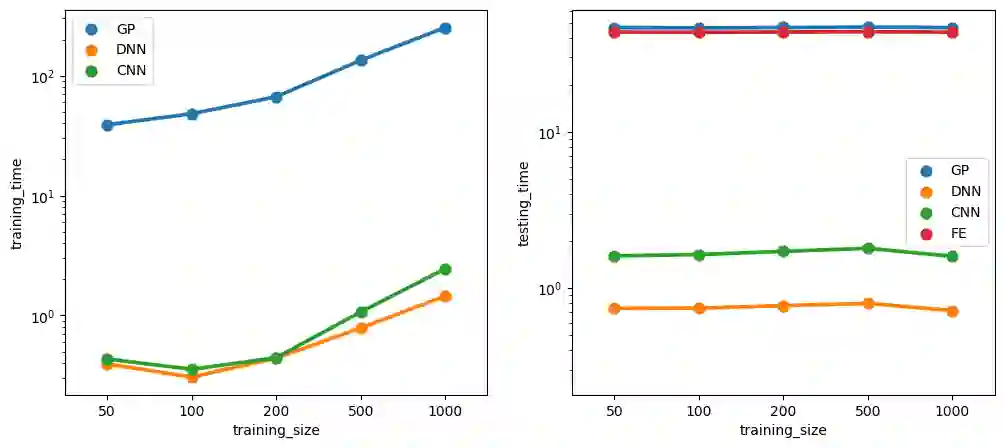

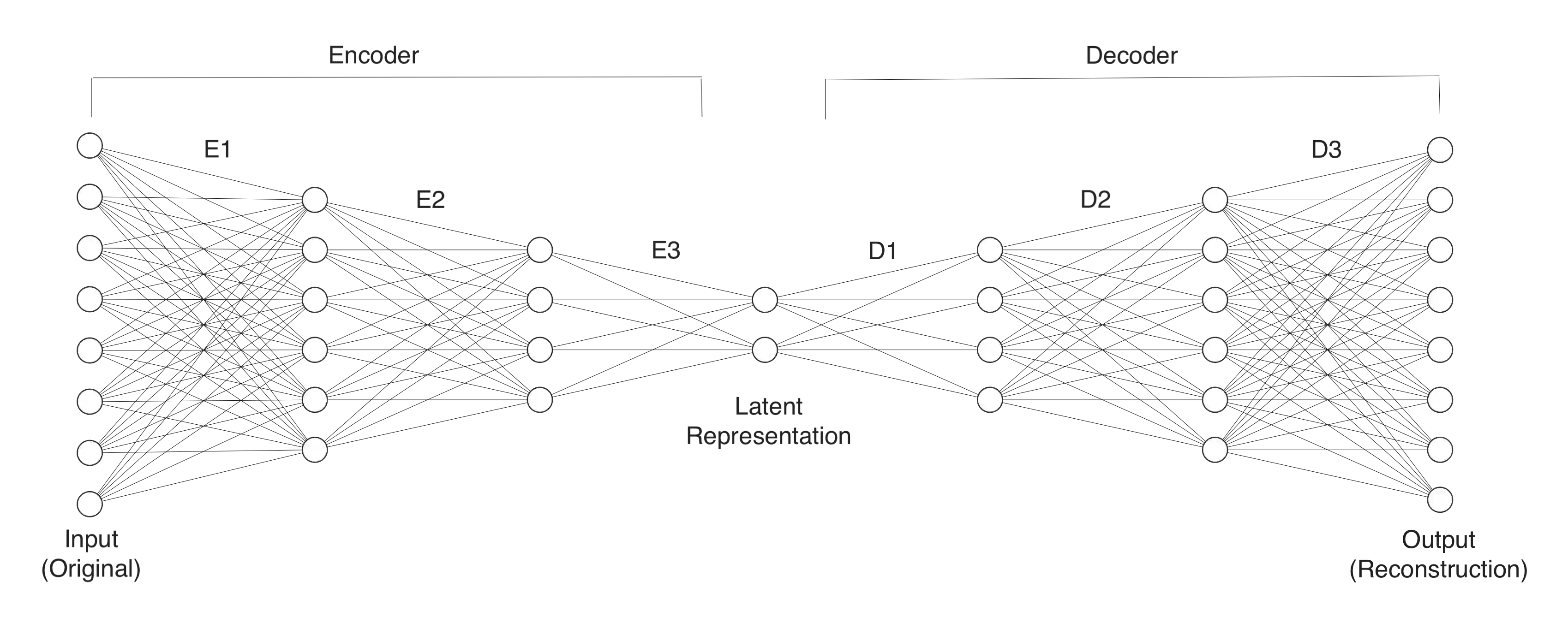

Due to the importance of uncertainty quantification (UQ), Bayesian approach to inverse problems has recently gained popularity in applied mathematics, physics, and engineering. However, traditional Bayesian inference methods based on Markov Chain Monte Carlo (MCMC) tend to be computationally intensive and inefficient for such high dimensional problems. To address this issue, several methods based on surrogate models have been proposed to speed up the inference process. More specifically, the calibration-emulation-sampling (CES) scheme has been proven to be successful in large dimensional UQ problems. In this work, we propose a novel CES approach for Bayesian inference based on deep neural network (DNN) models for the emulation phase. The resulting algorithm is not only computationally more efficient, but also less sensitive to the training set. Further, by using an Autoencoder (AE) for dimension reduction, we have been able to speed up our Bayesian inference method up to three orders of magnitude. Overall, our method, henceforth called \emph{Dimension-Reduced Emulative Autoencoder Monte Carlo (DREAM)} algorithm, is able to scale Bayesian UQ up to thousands of dimensions in physics-constrained inverse problems. Using two low-dimensional (linear and nonlinear) inverse problems we illustrate the validity this approach. Next, we apply our method to two high-dimensional numerical examples (elliptic and advection-diffussion) to demonstrate its computational advantage over existing algorithms.

翻译:由于不确定性量化的重要性(UQ),巴耶斯人对反问题的方法最近在应用数学、物理和工程应用中越来越受应用的数学、物理和工程学的流行程度。然而,基于Markov 链子蒙特卡洛(MCMC)的传统巴耶斯人的推论方法往往在计算上密集且效率低下,以应对如此高的层面问题。为了解决这一问题,提出了基于代用模型的几种方法,以加快推论过程的速度。更具体地说,校准模拟(CES)方法已证明在应用大维度UQ的问题中是成功的。在这项工作中,我们建议基于深神经网络(DNNN)模型的贝耶斯人的推论方法。由此产生的算法不仅在计算上效率更高,而且对培训设置不那么敏感。此外,通过使用一个自动编码(AE)来加速推导力推导力推导力推导力推导力推导力推导力推导力推至三大级。总体而言,我们的方法(Empenci-deci-deciation-decial-decial-degraphical-deal-nical Qal-deal-degraphal-deal-deal-deal-deal-deal-deal-degraducolviducolmaxx) 和在使用我们现有的方法中,我们现有的方法中,在使用低方法推算算算算算法的下两个级的推算法的推算法,在使用下,在运用了两个层次推力推力推力推力推力推力推力推力推力推力推论,在使用了。