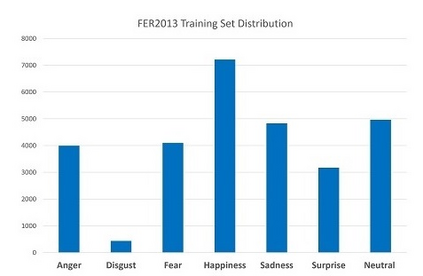

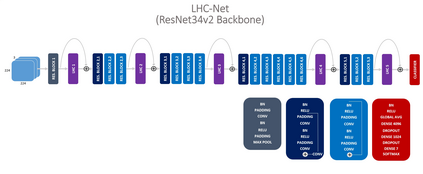

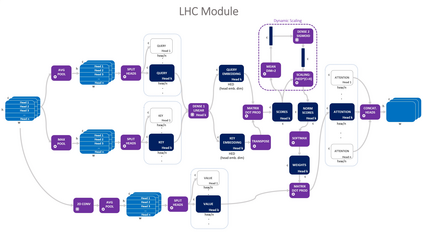

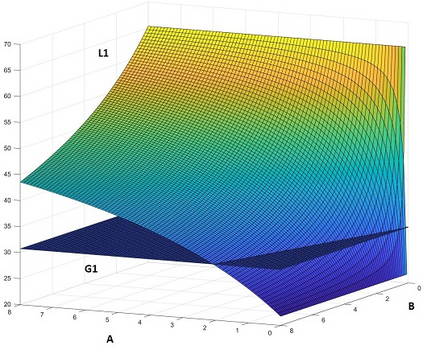

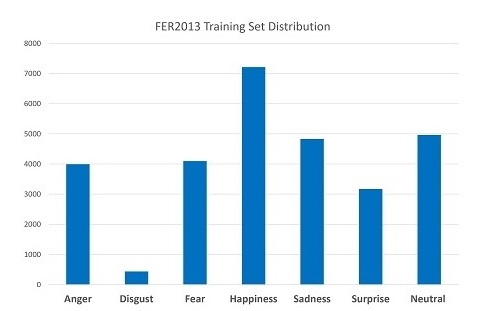

Since the Transformer architecture was introduced in 2017 there has been many attempts to bring the self-attention paradigm in the field of computer vision. In this paper we propose a novel self-attention module that can be easily integrated in virtually every convolutional neural network and that is specifically designed for computer vision, the LHC: Local (multi) Head Channel (self-attention). LHC is based on two main ideas: first, we think that in computer vision the best way to leverage the self-attention paradigm is the channel-wise application instead of the more explored spatial attention and that convolution will not be replaced by attention modules like recurrent networks were in NLP; second, a local approach has the potential to better overcome the limitations of convolution than global attention. With LHC-Net we managed to achieve a new state of the art in the famous FER2013 dataset with a significantly lower complexity and impact on the "host" architecture in terms of computational cost when compared with the previous SOTA.

翻译:自2017年引入变压器架构以来,人们多次尝试在计算机愿景领域引入自我关注模式。在本文件中,我们提出了一个新的自我关注模块,该模块可以很容易地融入几乎所有的进化神经网络,并专门为计算机愿景设计,即LHC:本地(多)主频道(自我关注 ) 。 LHC基于两个主要理念:首先,我们认为在计算机愿景中,利用自我关注模式的最佳方式是频道应用,而不是更多探索的空间关注,并且不会被关注模块取代,如NLP的经常性网络;其次,一种本地做法有可能更好地克服共振的局限性,而不是全球关注。 与前SOTA相比,我们设法在著名的FER2013数据集中实现了新的艺术状态,在计算成本方面,其复杂性和对“主机”结构的影响要大大降低。