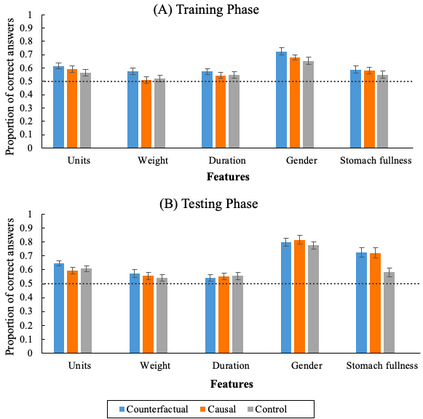

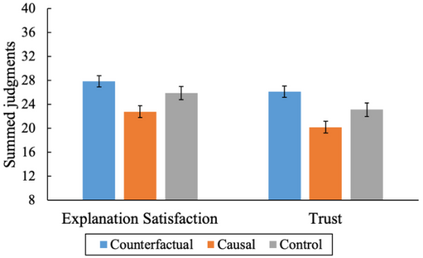

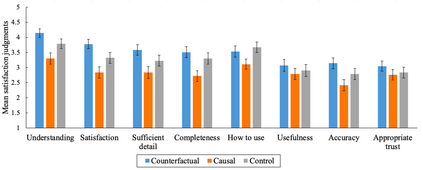

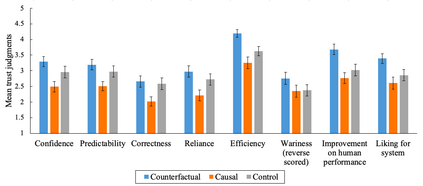

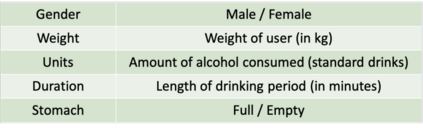

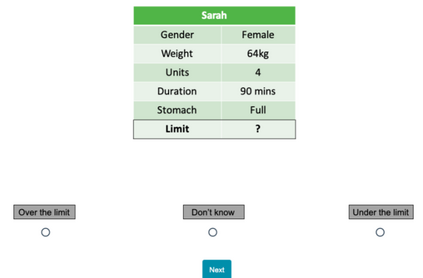

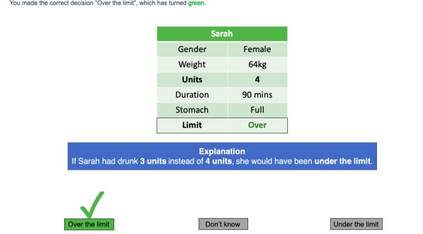

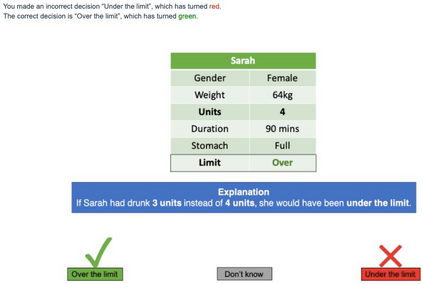

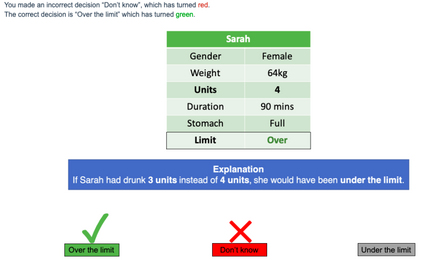

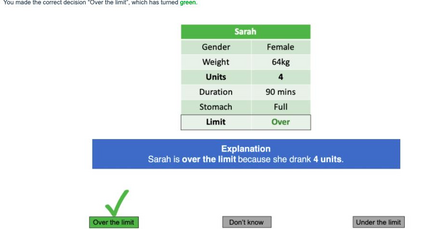

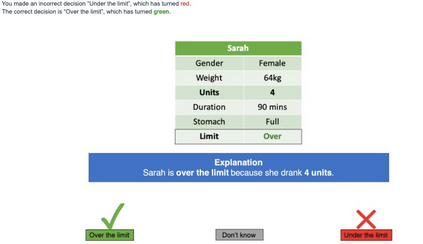

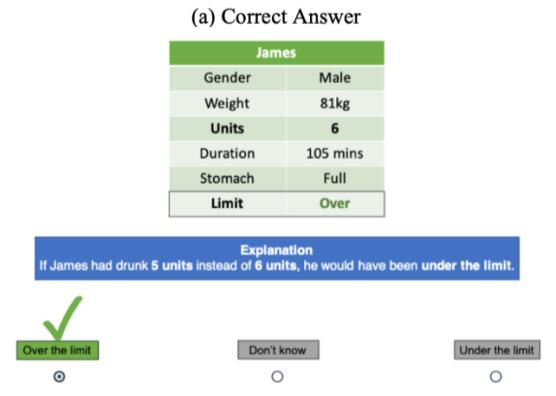

Counterfactual explanations are increasingly used to address interpretability, recourse, and bias in AI decisions. However, we do not know how well counterfactual explanations help users to understand a systems decisions, since no large scale user studies have compared their efficacy to other sorts of explanations such as causal explanations (which have a longer track record of use in rule based and decision tree models). It is also unknown whether counterfactual explanations are equally effective for categorical as for continuous features, although current methods assume they do. Hence, in a controlled user study with 127 volunteer participants, we tested the effects of counterfactual and causal explanations on the objective accuracy of users predictions of the decisions made by a simple AI system, and participants subjective judgments of satisfaction and trust in the explanations. We discovered a dissociation between objective and subjective measures: counterfactual explanations elicit higher accuracy of predictions than no-explanation control descriptions but no higher accuracy than causal explanations, yet counterfactual explanations elicit greater satisfaction and trust than causal explanations. We also found that users understand explanations referring to categorical features more readily than those referring to continuous features. We discuss the implications of these findings for current and future counterfactual methods in XAI.

翻译:在大赦国际的决定中,反事实解释越来越多地被用来解决可解释性、追索性和偏见问题,然而,我们不知道反事实解释如何有助于用户理解系统决定,因为没有大规模用户研究将其效力与其他种类的解释如因果解释(在基于规则和决策树模型中,反事实解释的使用记录较长)相比较,也不清楚反事实解释是否对连续性特征具有同等效力,尽管目前的方法假定它们具有连续性。因此,在一项由127名自愿参与者参与的受控用户研究中,我们测试了反事实和因果解释对用户对简单AI系统所作决定预测的客观准确性以及参与者对满意度和信任解释的主观判断的影响。我们发现客观和主观措施之间脱钩:反事实解释使预测的准确性高于不解释控制说明,但准确性不高于因果关系解释,反事实解释比因果解释更令人满意和信任。我们还发现,用户理解关于绝对特征的解释比持续特征的解释更容易理解。我们讨论了这些结论对XAI目前和今后反事实方法的影响。

相关内容

Source: Apple - iOS 8