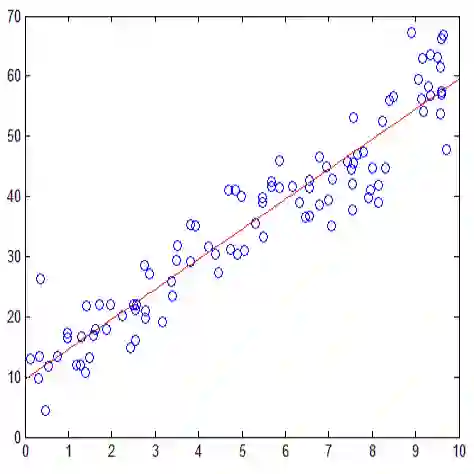

This note is meant to provide an introduction to linear models and the theories behind them. Our goal is to give a rigorous introduction to the readers with prior exposure to ordinary least squares. In machine learning, the output is usually a nonlinear function of the input. Deep learning even aims to find a nonlinear dependence with many layers which require a large amount of computation. However, most of these algorithms build upon simple linear models. We then describe linear models from different views and find the properties and theories behind the models. The linear model is the main technique in regression problems and the primary tool for it is the least squares approximation which minimizes a sum of squared errors. This is a natural choice when we're interested in finding the regression function which minimizes the corresponding expected squared error. We first describe ordinary least squares from three different points of view upon which we disturb the model with random noise and Gaussian noise. By Gaussian noise, the model gives rise to the likelihood so that we introduce a maximum likelihood estimator. It also develops some distribution theories for it via this Gaussian disturbance. The distribution theory of least squares will help us answer various questions and introduce related applications. We then prove least squares is the best unbiased linear model in the sense of mean squared error and most importantly, it actually approaches the theoretical limit. We end up with linear models with the Bayesian approach and beyond.

翻译:本说明旨在介绍线性模型及其背后的理论。 我们的目标是对先前接触普通最小正方形的读者进行严格的介绍。 在机器学习中, 输出通常是输入的非线性函数。 深层学习甚至旨在找到非线性依赖性, 多层需要大量计算。 然而, 这些算法大多建立在简单的线性模型上。 我们然后从不同的角度描述线性模型, 并找到模型背后的属性和理论。 线性模型是回归问题中的主要技术, 而对于它来说, 线性模型是最小正方形近似, 以最小的平方差总和。 在机器学习中, 输出通常是一个自然的选择, 当我们有兴趣找到回归函数, 从而尽量减少相应的平方差错误。 我们首先从三个不同的角度描述普通的最小正方形。 我们用随机噪音和高方形的噪音来扰乱模型。 我们用高方形的噪音来推断出可能性, 以便我们引入一个最大可能的估测算器。 它还通过最小的调调度模型来开发一些分配理论理论。 我们用最接近的正方平方的理论性理论性理论来解我们各种问题, 。 我们用最正方的平方的判的判的判的判法 。