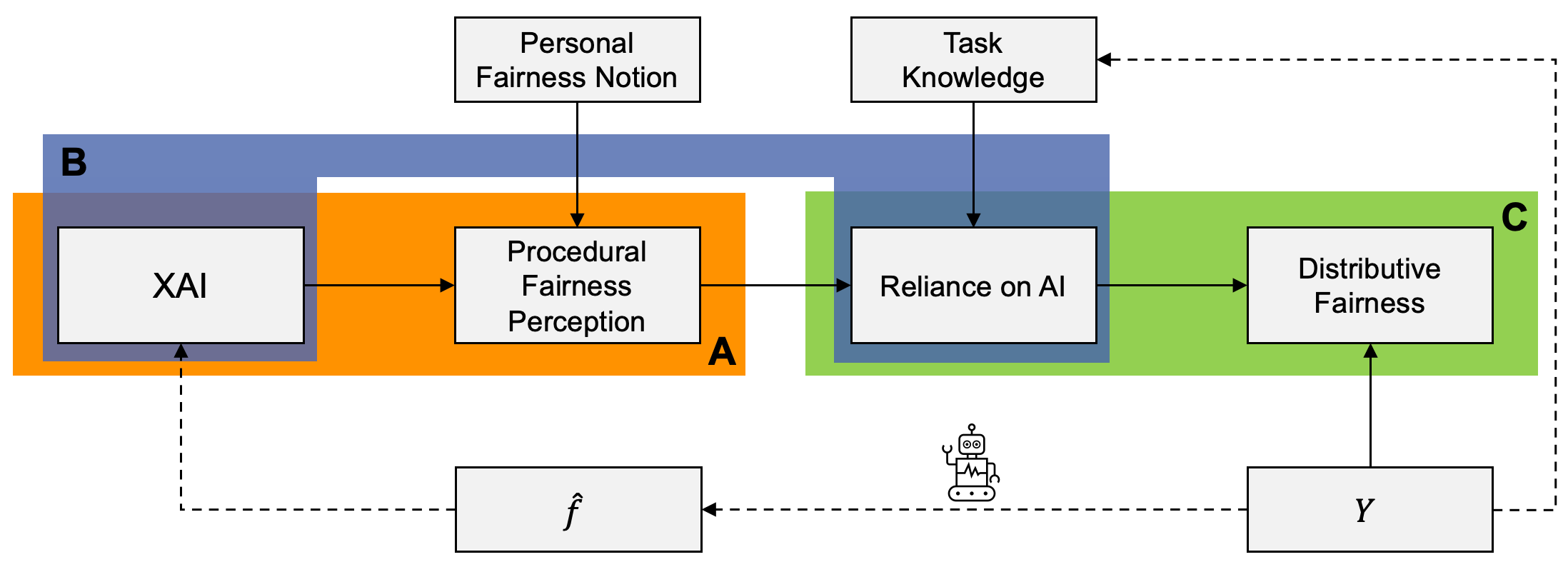

It is known that recommendations of AI-based systems can be incorrect or unfair. Hence, it is often proposed that a human be the final decision-maker. Prior work has argued that explanations are an essential pathway to help human decision-makers enhance decision quality and mitigate bias, i.e., facilitate human-AI complementarity. For these benefits to materialize, explanations should enable humans to appropriately rely on AI recommendations and override the algorithmic recommendation when necessary to increase distributive fairness of decisions. The literature, however, does not provide conclusive empirical evidence as to whether explanations enable such complementarity in practice. In this work, we (a) provide a conceptual framework to articulate the relationships between explanations, fairness perceptions, reliance, and distributive fairness, (b) apply it to understand (seemingly) contradictory research findings at the intersection of explanations and fairness, and (c) derive cohesive implications for the formulation of research questions and the design of experiments.

翻译:众所周知,基于AI系统的建议可能不正确或不公平,因此,人们常常建议,一个人是最终的决策者; 先前的工作认为,解释是帮助人类决策者提高决策质量和减少偏见,即促进人类-AI互补的重要途径; 为了实现这些好处,解释应使人类能够适当依赖AI建议,必要时推翻算法建议,以增加决策的公平分配; 然而,文献没有提供结论性经验证据,证明解释是否使实际中这种互补性成为可能; 在这项工作中,我们(a) 提供了一个概念框架,以阐明解释、公平认识、依赖和分配公平之间的关系;(b) 运用它来理解(似乎)在解释和公平之间相互矛盾的研究结果;(c) 对研究问题的拟订和试验的设计产生一致的影响。