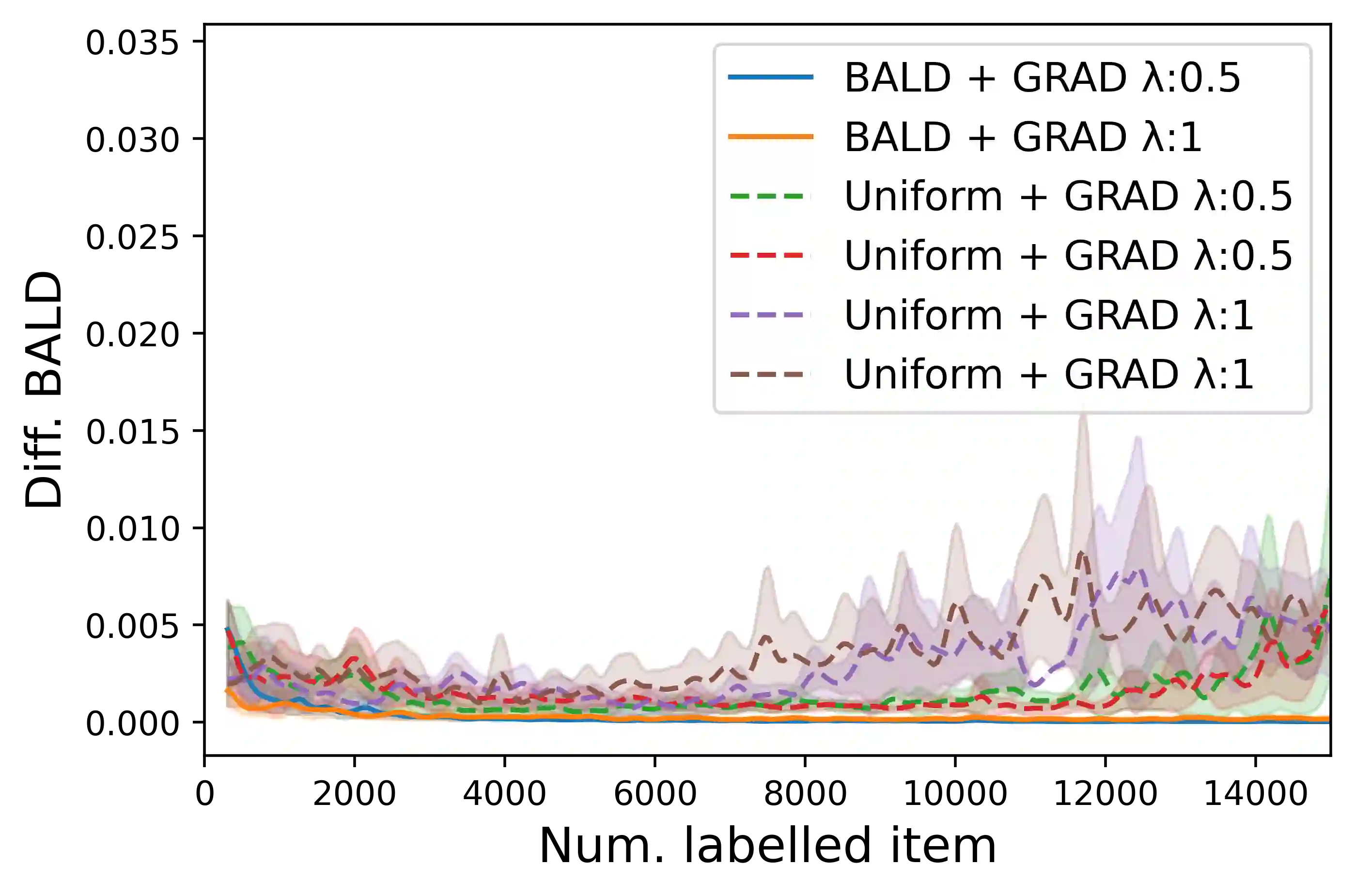

Dataset bias is one of the prevailing causes of unfairness in machine learning. Addressing fairness at the data collection and dataset preparation stages therefore becomes an essential part of training fairer algorithms. In particular, active learning (AL) algorithms show promise for the task by drawing importance to the most informative training samples. However, the effect and interaction between existing AL algorithms and algorithmic fairness remain under-explored. In this paper, we study whether models trained with uncertainty-based AL heuristics such as BALD are fairer in their decisions with respect to a protected class than those trained with identically independently distributed (i.i.d.) sampling. We found a significant improvement on predictive parity when using BALD, while also improving accuracy compared to i.i.d. sampling. We also explore the interaction of algorithmic fairness methods such as gradient reversal (GRAD) and BALD. We found that, while addressing different fairness issues, their interaction further improves the results on most benchmarks and metrics we explored.

翻译:处理数据收集和数据集编制阶段的公平问题因此成为培训更公平算法的一个重要部分。 特别是,积极学习(AL)算法通过对信息最丰富的培训样本的重视,显示了对这项任务的希望。然而,现有的AL算法和算法公平之间的效应和相互作用仍然没有得到充分探讨。在本文中,我们研究的是,受过基于不确定性的AL惯性培训的模型,如BALD, 是否比那些经过完全独立分布(i.d.)抽样培训的模型在决定受保护阶级方面更为公平。我们发现,在使用BALD时,在预测均等方面有了显著改进,同时也提高了与i.i.d.抽样相比的准确性。我们还探讨了梯度逆转(GRAD)和BALD等算法公平性方法的相互作用。我们发现,在解决不同的公平问题时,它们的互动是否进一步改进了我们所探讨的大多数基准和衡量标准的结果。