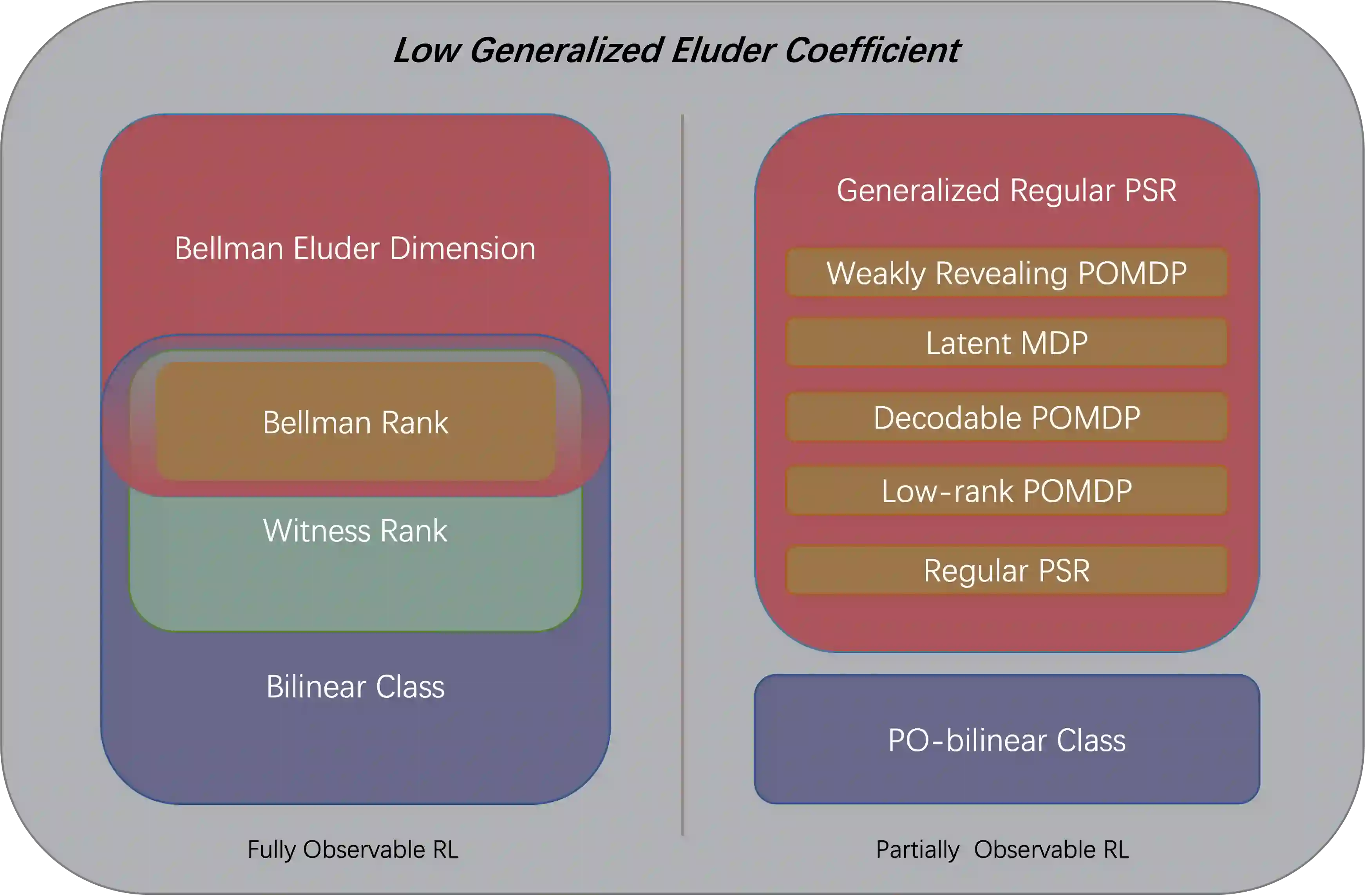

We study sample efficient reinforcement learning (RL) under the general framework of interactive decision making, which includes Markov decision process (MDP), partially observable Markov decision process (POMDP), and predictive state representation (PSR) as special cases. Toward finding the minimum assumption that empowers sample efficient learning, we propose a novel complexity measure, generalized eluder coefficient (GEC), which characterizes the fundamental tradeoff between exploration and exploitation in online interactive decision making. In specific, GEC captures the hardness of exploration by comparing the error of predicting the performance of the updated policy with the in-sample training error evaluated on the historical data. We show that RL problems with low GEC form a remarkably rich class, which subsumes low Bellman eluder dimension problems, bilinear class, low witness rank problems, PO-bilinear class, and generalized regular PSR, where generalized regular PSR, a new tractable PSR class identified by us, includes nearly all known tractable POMDPs. Furthermore, in terms of algorithm design, we propose a generic posterior sampling algorithm, which can be implemented in both model-free and model-based fashion, under both fully observable and partially observable settings. The proposed algorithm modifies the standard posterior sampling algorithm in two aspects: (i) we use an optimistic prior distribution that biases towards hypotheses with higher values and (ii) a loglikelihood function is set to be the empirical loss evaluated on the historical data, where the choice of loss function supports both model-free and model-based learning. We prove that the proposed algorithm is sample efficient by establishing a sublinear regret upper bound in terms of GEC. In summary, we provide a new and unified understanding of both fully observable and partially observable RL.

翻译:在互动决策的总体框架下,我们研究高效强化学习(RL),这包括Markov决策程序(MDP)、部分可见的Markov决策程序(POMDP)以及作为特例的预测状态代表(PSR)等互动决策总框架下的高效强化学习(RL)样本。为了找到增强样本高效学习能力的最低假设,我们提出了一种新的复杂度标准,即通用 Eluder 系数(GEC),这是在线互动决策中探索与开发之间的根本权衡。具体地说,GEC通过比较预测更新政策绩效与对历史数据进行评估的模拟培训错误(MDP)、部分可见的Markov决策程序(POMDP)以及预测状态代表(PSR)的难度。我们表明,低GEC的RL问题构成一个非常丰富的阶级,其次等分数是低的Bellman eluder层面问题、双线级、低级证人级别问题、PO-bilinear类以及通用的常规PSR(GSR),其中我们确定的一个新的可移动的PSR类别,包括几乎所有已知的POMDP。此外,我们建议的一种通用的后选算模型和高级(我们所拟在模型中,在模型中,可以完全地进行一个不偏差的排序中,在模型中采用一个不偏差的Sloralalal-lalalalalalalalalislisl),然后的计算。