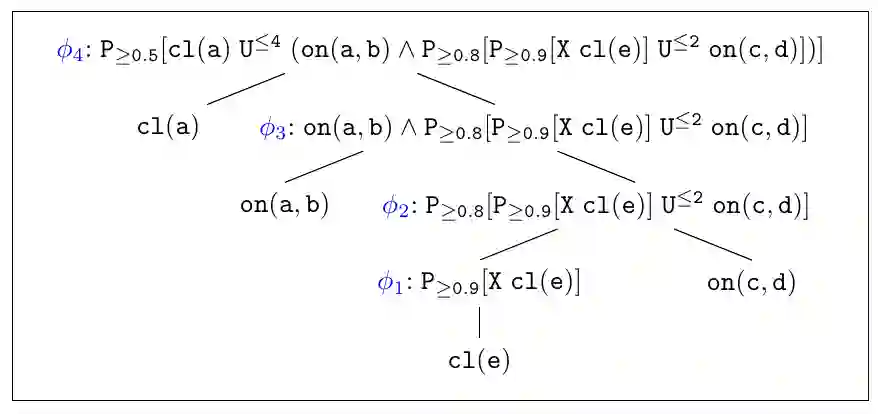

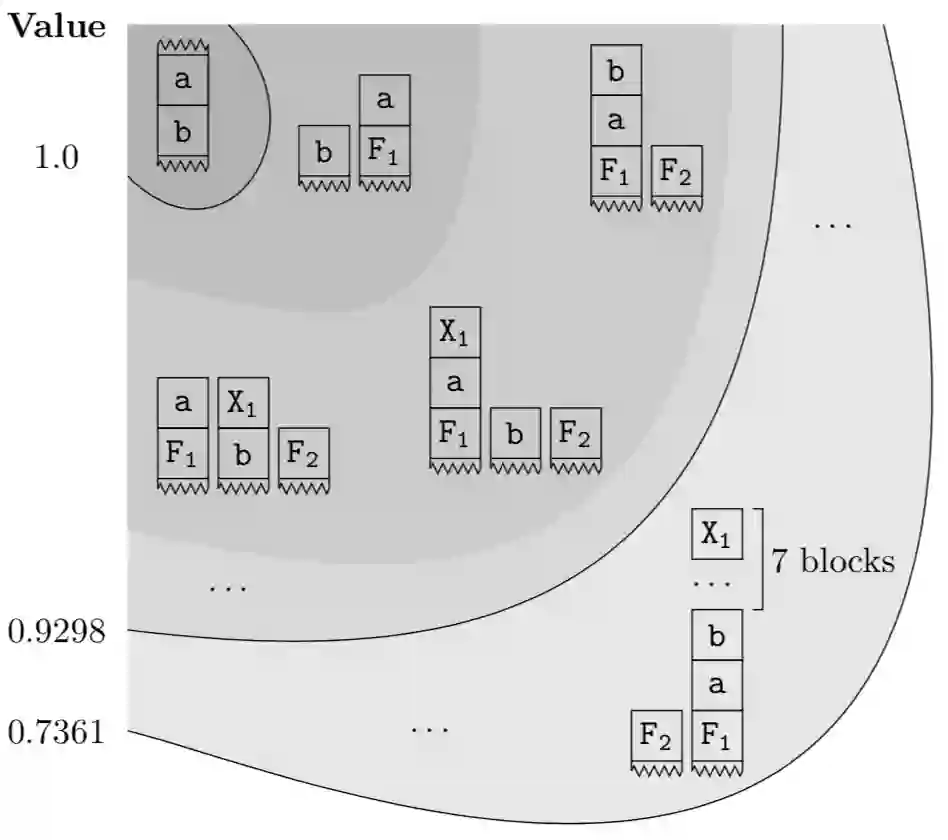

Model checking has been developed for verifying the behaviour of systems with stochastic and non-deterministic behavior. It is used to provide guarantees about such systems. While most model checking methods focus on propositional models, various probabilistic planning and reinforcement frameworks deal with relational domains, for instance, STRIPS planning and relational Markov Decision Processes. Using propositional model checking in relational settings requires one to ground the model, which leads to the well known state explosion problem and intractability. We present pCTL-REBEL, a lifted model checking approach for verifying pCTL properties on relational MDPs. It extends REBEL, the relational Bellman update operator, which is a lifted value iteration approach for model-based relational reinforcement learning, toward relational model-checking. PCTL-REBEL is lifted, which means that rather than grounding, the model exploits symmetries and reasons at an abstract relational level. Theoretically, we show that the pCTL model checking approach is decidable for relational MDPs even for possibly infinite domains provided that the states have a bounded size. Practically, we contribute algorithms and an implementation of lifted relational model checking, and we show that the lifted approach improves the scalability of the model checking approach.

翻译:已经开发了用于核查具有随机和非决定性行为的系统行为的模型检查方法,用于为这些系统提供保障。虽然大多数模型检查方法侧重于建模模型,但各种概率规划和强化框架涉及关系领域,例如STRIP规划和关系Markov决定程序。在关系环境中使用建模检查需要一对一的模型,这会导致众所周知的状态爆炸问题和可受吸引性。我们提出了PCTL-REBEL,这是用于核查关系MDP中PCTL属性的解除模型检查方法。它扩展了REBEL,即关系贝尔曼更新操作器,这是基于模型的关系强化学习的提高值复制方法,用于进行关系模型检查。PCTL-REBEL被解除了,这意味着模型不是定位,而是在抽象关系层面上利用了对等和原因。理论上,我们表明,PCTL模式检查方法对于关系MDP的属性是可以辨别的,甚至无限的域。它扩展了REBEL,这是用于模型强化关系学习的增强值复制方法,用于进行关系模型检查。PCTL-REBEL-REBEL, 提供了一种升级的升级和升级的进度,我们展示了升级的升级的系统。