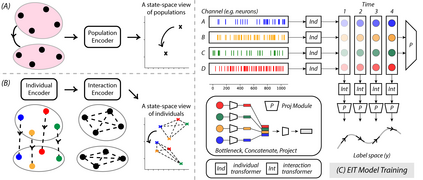

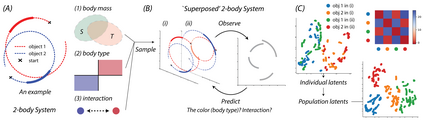

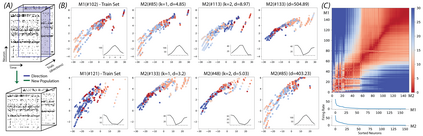

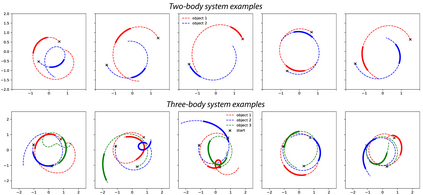

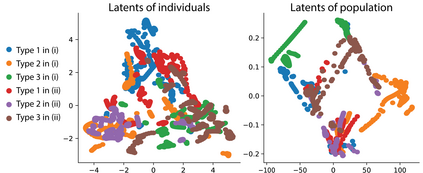

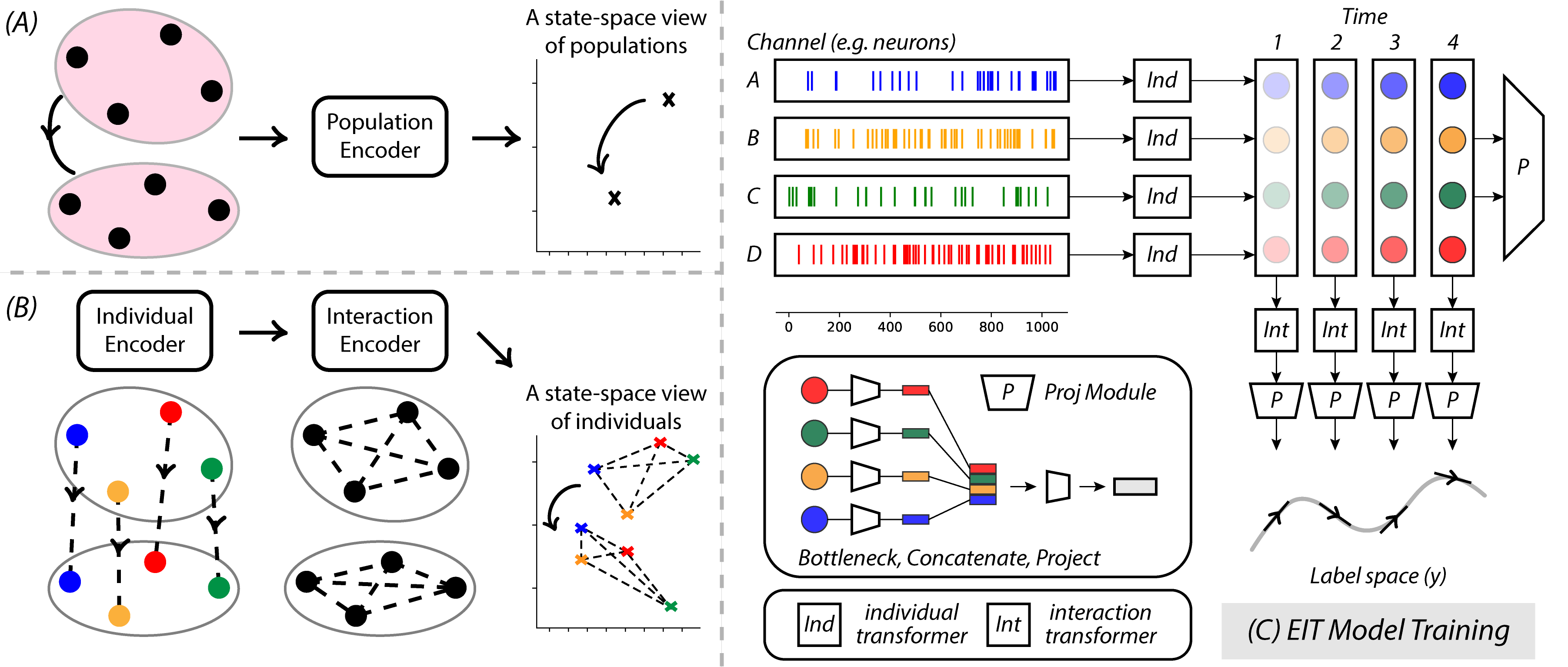

Complex time-varying systems are often studied by abstracting away from the dynamics of individual components to build a model of the population-level dynamics from the start. However, when building a population-level description, it can be easy to lose sight of each individual and how they contribute to the larger picture. In this paper, we present a novel transformer architecture for learning from time-varying data that builds descriptions of both the individual as well as the collective population dynamics. Rather than combining all of our data into our model at the onset, we develop a separable architecture that operates on individual time-series first before passing them forward; this induces a permutation-invariance property and can be used to transfer across systems of different size and order. After demonstrating that our model can be applied to successfully recover complex interactions and dynamics in many-body systems, we apply our approach to populations of neurons in the nervous system. On neural activity datasets, we show that our model not only yields robust decoding performance, but also provides impressive performance in transfer across recordings of different animals without any neuron-level correspondence. By enabling flexible pre-training that can be transferred to neural recordings of different size and order, our work provides a first step towards creating a foundation model for neural decoding.

翻译:复杂的时间变化系统往往通过从单个组成部分的动态中抽取出来,从一开始就通过抽取单个组成部分的动态来研究复杂的时间变化系统,以构建人口层面动态模型。 但是,在建立人口层面描述时,很容易忽略每个人以及他们如何为更广阔的图像作出贡献。 在本文中,我们提出了一个创新的变压器结构,从时间变化的数据中学习,这种数据既构建了个人和集体人口动态的描述。我们没有在开始时将我们的所有数据整合到模型中,而是发展了一个在个人时间序列上首先运行的分离结构;这产生了一种变异性属性,可以用于在不同大小和顺序的系统之间转移。在展示我们的模型可以成功恢复多种体系的复杂互动和动态之后,我们将我们的方法应用于神经系统中的神经元群体。关于神经活动数据集,我们显示我们的模型不仅产生强大的解码性能,而且还提供了在不同动物记录中进行转移的令人印象深刻的性能,而没有神经层面的对应性能。这可以促成一个灵活的模型前步骤,以创建不同的神经规模和神经系统的基础。