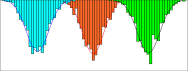

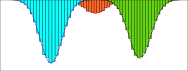

As an increasingly popular task in multimedia information retrieval, video moment retrieval (VMR) aims to localize the target moment from an untrimmed video according to a given language query. Most previous methods depend heavily on numerous manual annotations (i.e., moment boundaries), which are extremely expensive to acquire in practice. In addition, due to the domain gap between different datasets, directly applying these pre-trained models to an unseen domain leads to a significant performance drop. In this paper, we focus on a novel task: cross-domain VMR, where fully-annotated datasets are available in one domain (``source domain''), but the domain of interest (``target domain'') only contains unannotated datasets. As far as we know, we present the first study on cross-domain VMR. To address this new task, we propose a novel Multi-Modal Cross-Domain Alignment (MMCDA) network to transfer the annotation knowledge from the source domain to the target domain. However, due to the domain discrepancy between the source and target domains and the semantic gap between videos and queries, directly applying trained models to the target domain generally leads to a performance drop. To solve this problem, we develop three novel modules: (i) a domain alignment module is designed to align the feature distributions between different domains of each modality; (ii) a cross-modal alignment module aims to map both video and query features into a joint embedding space and to align the feature distributions between different modalities in the target domain; (iii) a specific alignment module tries to obtain the fine-grained similarity between a specific frame and the given query for optimal localization. By jointly training these three modules, our MMCDA can learn domain-invariant and semantic-aligned cross-modal representations.

翻译:由于多媒体信息检索中越来越受欢迎的任务,视频时刻检索(VMR)旨在将目标时刻从未剪裁的视频中根据特定语言查询将目标时刻本地化。 多数先前的方法主要依赖大量手动说明( 即时间界限), 这些说明实际上非常昂贵。 此外, 由于不同数据集之间的域间差异, 直接将这些预先培训的模型应用到一个看不见的域内, 导致显著的性能下降。 在本文中, 我们侧重于一个新颖的任务: 跨域的 VMRR, 在一个域( “ 源域” ) 中可以提供完全附加说明的数据集, 但兴趣域( “ 目标域” 目标域域域” ) 只包含不附加说明的数据集。 据我们所知, 我们首次介绍跨多域域内 VMMRRMR模式的研究。 为了应对这一新任务, 我们提议一个新颖的多模式交叉版本的对调校正( MMDA) 网络, 从源域域向目标域向目标域内传输注释知识。 然而, 由于源域与目标域域域域间和显示的精细校正的校正方向之间, 我们直接应用了一个特定的校正的模模型到三个域域域域域内的一个域域域内, 。