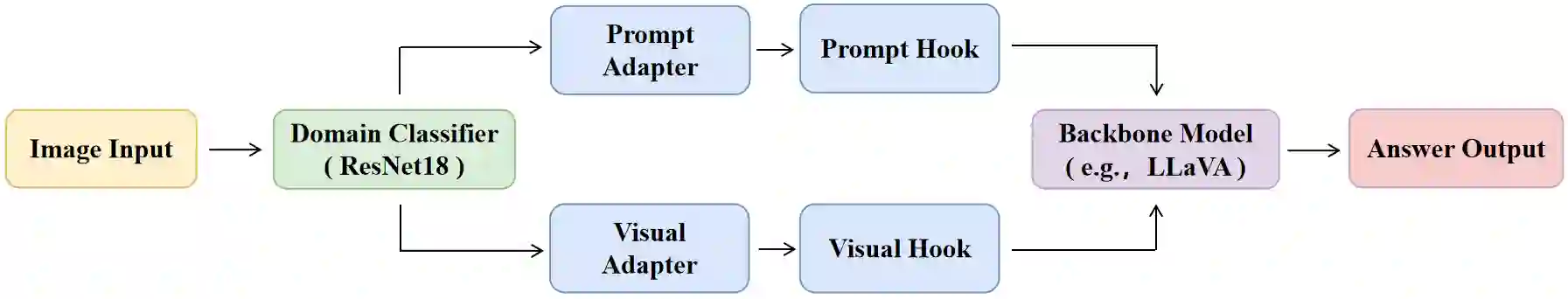

Recent advances in Visual Question Answering (VQA) have demonstrated impressive performance in natural image domains, with models like LLaVA leveraging large language models (LLMs) for open-ended reasoning. However, their generalization degrades significantly when transferred to out-of-domain scenarios such as remote sensing, medical imaging, or math diagrams, due to large distributional shifts and the lack of effective domain adaptation mechanisms. Existing approaches typically rely on per-domain fine-tuning or bespoke pipelines, which are costly, inflexible, and not scalable across diverse tasks. In this paper, we propose CATCH, a plug-and-play framework for cross-domain adaptation that improves the generalization of VQA models while requiring minimal changes to their core architecture. Our key idea is to decouple visual and linguistic adaptation by introducing two lightweight modules: a domain classifier to identify the input image type, and a dual adapter mechanism comprising a Prompt Adapter for language modulation and a Visual Adapter for vision feature adjustment. Both modules are dynamically injected via a unified hook interface, requiring no retraining of the backbone model. Experimental results across four domain-specific VQA benchmarks demonstrate that our framework achieves consistent performance gains without retraining the backbone model, including +2.3 BLEU on MathVQA, +2.6 VQA on MedVQA-RAD, and +3.1 ROUGE on ChartQA. These results highlight that CATCH provides a scalable and extensible approach to multi-domain VQA, enabling practical deployment across diverse application domains.

翻译:视觉问答(VQA)领域的最新进展在自然图像域中展示了令人印象深刻的性能,诸如LLaVA等模型利用大型语言模型(LLM)进行开放式推理。然而,当迁移到遥感、医学影像或数学图表等域外场景时,由于显著的分布偏移和缺乏有效的域适应机制,其泛化能力会大幅下降。现有方法通常依赖于针对每个域的微调或定制化流程,这些方法成本高昂、灵活性差,且难以跨不同任务扩展。本文提出CATCH,一种即插即用的跨域适应框架,旨在提升VQA模型的泛化能力,同时对其核心架构仅需最小改动。我们的核心思想是通过引入两个轻量级模块来解耦视觉与语言适应:一个用于识别输入图像类型的域分类器,以及一个包含用于语言调制的提示适配器和用于视觉特征调整的视觉适配器的双重适配机制。这两个模块通过统一的钩子接口动态注入,无需对骨干模型进行重新训练。在四个特定领域的VQA基准测试上的实验结果表明,我们的框架在不重新训练骨干模型的情况下实现了持续的性能提升,包括在MathVQA上提升+2.3 BLEU,在MedVQA-RAD上提升+2.6 VQA分数,在ChartQA上提升+3.1 ROUGE分数。这些结果突显了CATCH为多域VQA提供了一种可扩展且可扩展的方法,使得在不同应用领域的实际部署成为可能。