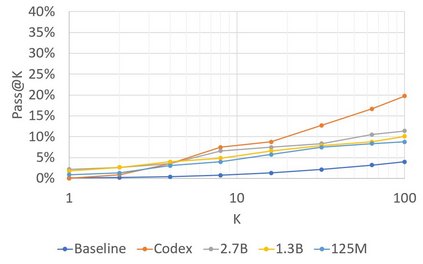

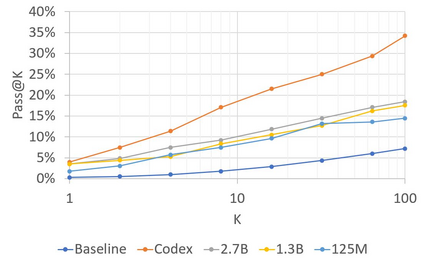

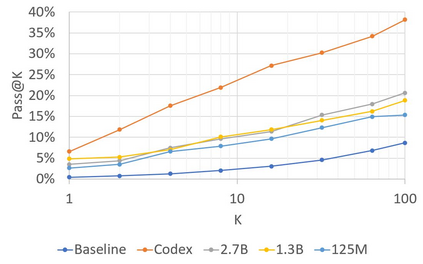

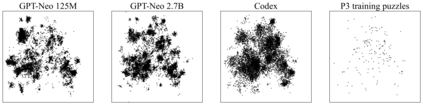

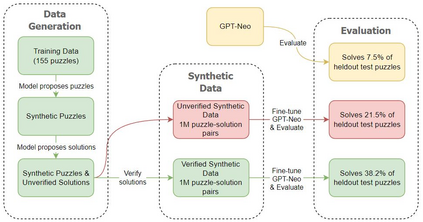

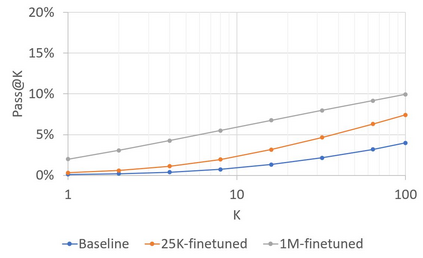

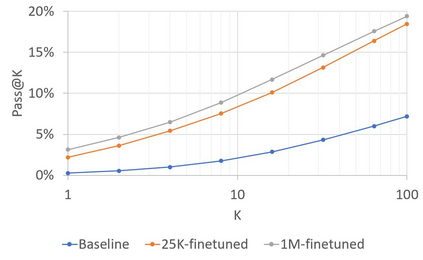

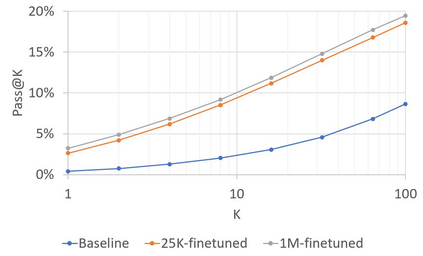

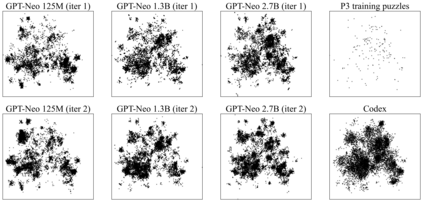

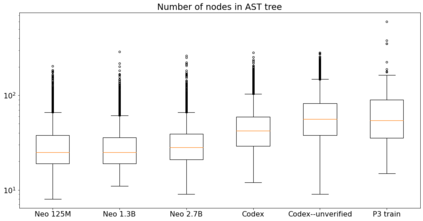

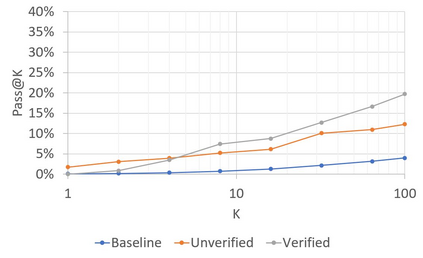

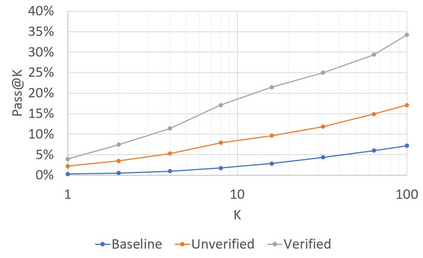

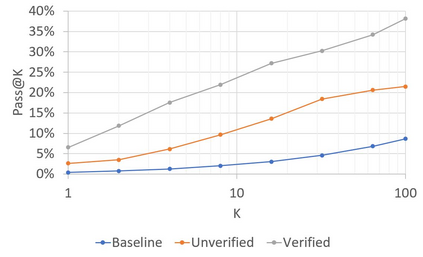

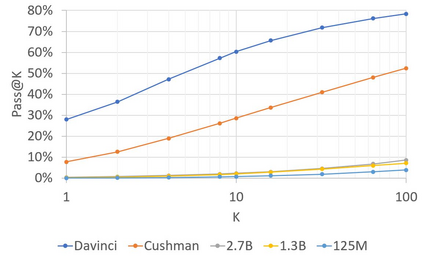

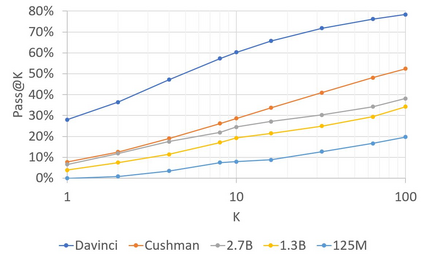

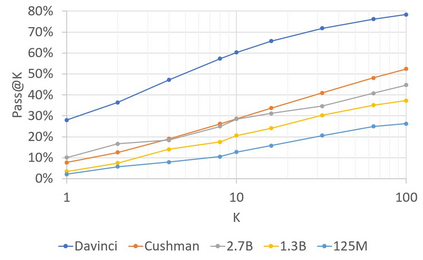

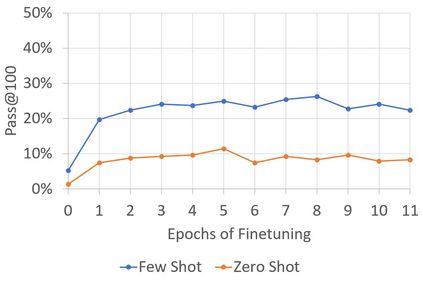

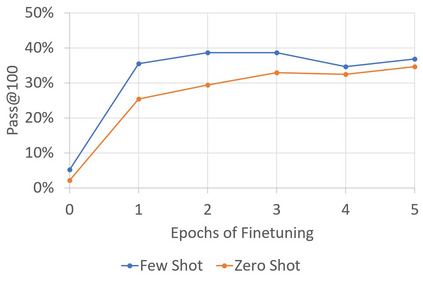

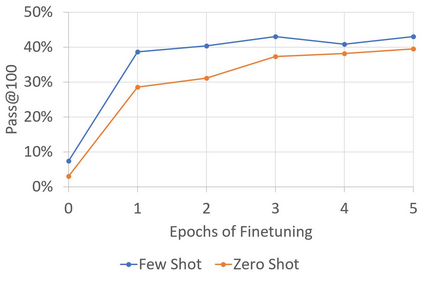

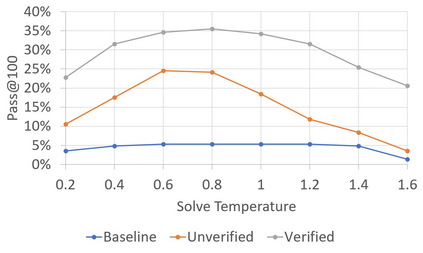

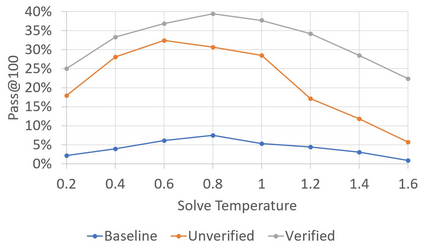

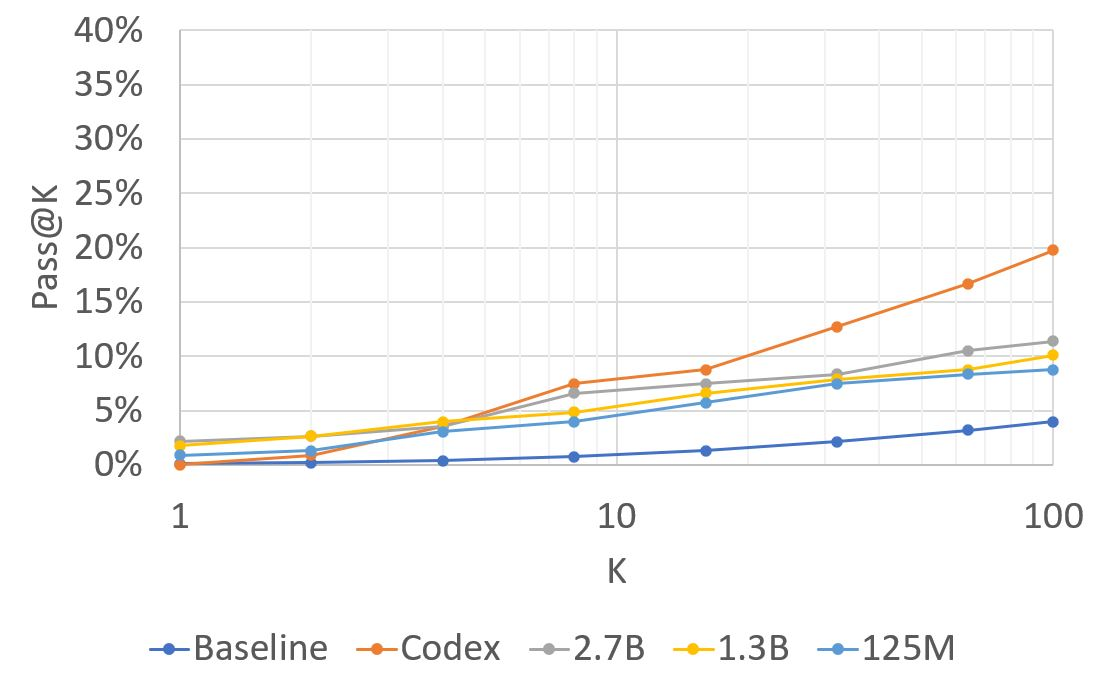

Recent Language Models (LMs) achieve breakthrough performance in code generation when trained on human-authored problems, even solving some competitive-programming problems. Self-play has proven useful in games such as Go, and thus it is natural to ask whether LMs can generate their own instructive programming problems to improve their performance. We show that it is possible for an LM to synthesize programming problems and solutions, which are filtered for correctness by a Python interpreter. The LM's performance is then seen to improve when it is fine-tuned on its own synthetic problems and verified solutions; thus the model 'improves itself' using the Python interpreter. Problems are specified formally as programming puzzles [Schuster et al., 2021], a code-based problem format where solutions can easily be verified for correctness by execution. In experiments on publicly-available LMs, test accuracy more than doubles. This work demonstrates the potential for code LMs, with an interpreter, to generate instructive problems and improve their own performance.

翻译:近期,语言模型在人工编写的问题上训练时取得了突破性的编码生成性能,甚至解决了一些编程竞赛问题。自我对抗在游戏中已经被证明是有用的,因此很自然地会问语言模型能否生成自己的问题来提高性能。我们展示了语言模型能够综合编程问题和解决方案,经Python解释器筛选正确性。在经过自己合成的问题和验证解决方案的微调后,LM的性能得到了提高,这样模型就能够“用Python解释器改进自己”。问题形式上以编程难题[S开头 et al.,2021] 规范化表示,其中解决方案可以通过执行轻松地验证正确性。在公开可用的LM实验中,测试准确率增加了一倍以上。这项工作展示了LM语言模型,配合解释器,生成指导性问题并提高其性能的潜力。