【GitHub项目推荐】文本分类最好的几个深度学习方法 TensorFlow 实践

【导读】文本分类是NLP中常见的任务,近几年来出现了很多基于深度学习相关的方法。比如TextCNN、Attention-Based Bidirection LSTM、对抗学习、自注意力机制等等。因此,进行文本分类这一简单的任务实践是学习不同深度网络比较好的方式,建议大家收藏和学习。

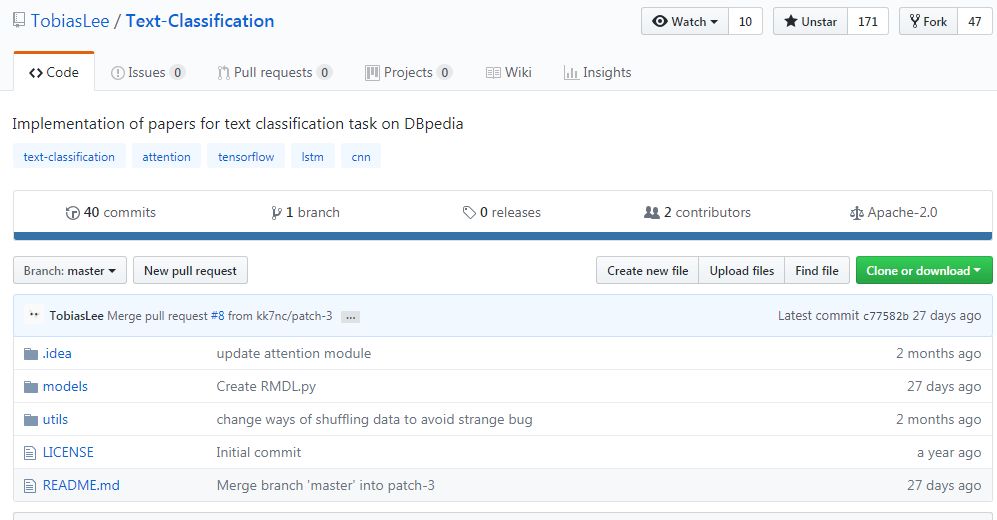

作者:TobiasLee

Github 链接:

https://github.com/TobiasLee/Text-Classification

Text-Classification (文本分类)

Implement some state-of-the-art text classification models with TensorFlow.

Requirement

Python3

TensorFlow >= 1.4

Dataset

You can load the data with

dbpedia = tf.contrib.learn.datasets.load_dataset('dbpedia', test_with_fake_data=FLAGS.test_with_fake_data)

Attention is All Your Need

Paper: Attention Is All You Need

See multi_head.py

Use self-attention where Query = Key = Value = sentence after word embedding

Multihead Attention module is implemented by Kyubyong

IndRNN for Text Classification

Paper: Independently Recurrent Neural Network (IndRNN): Building A Longer and Deeper RNN

IndRNNCell is implemented by batzener

Attention-Based Bidirection LSTM for Text Classification

Paper: Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification

See attn_bi_lstm.py

Hierarchical Attention Networks for Text Classification

Paper: Hierarchical Attention Networks for Document Classification

See attn_lstm_hierarchical.py

Attention module is implemented by ilivans/tf-rnn-attention .

Adversarial Training Methods For Supervised Text Classification

Paper: Adversarial Training Methods For Semi-Supervised Text Classification

See: adversrial_abblstm.py

Convolutional Neural Networks for Sentence Classification

Paper: Convolutional Neural Networks for Sentence Classification

See: cnn.py

RMDL: Random Multimodel Deep Learning for Classification

Paper: RMDL: Random Multimodel Deep Learning for Classification

See: RMDL.py See: RMDL Github

Note: The parameters are not fine-tuned, you can modify the kernel as you want.

Performance

| Model | Test Accuracy | Notes |

|---|---|---|

| Attention-based Bi-LSTM | 98.23 % | |

| HAN | 89.15% | 1080Ti 10 epochs 12 min |

| Adversarial Attention-based Bi-LSTM | 98.5% | AWS p2 2 hours |

| IndRNN | 98.39% | 1080Ti 10 epochs 10 min |

| Attention is All Your Need | 97.81% | 1080Ti 15 epochs 8 min |

| RMDL | 98.91% | 2X Tesla Xp (3 RDLs) |

| CNN | To be tested | To be done |

原文链接:

https://github.com/TobiasLee/Text-Classification

-END-

专 · 知

人工智能领域26个主题知识资料全集获取与加入专知人工智能服务群: 欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请加专知小助手微信(扫一扫如下二维码添加),加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

请关注专知公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知