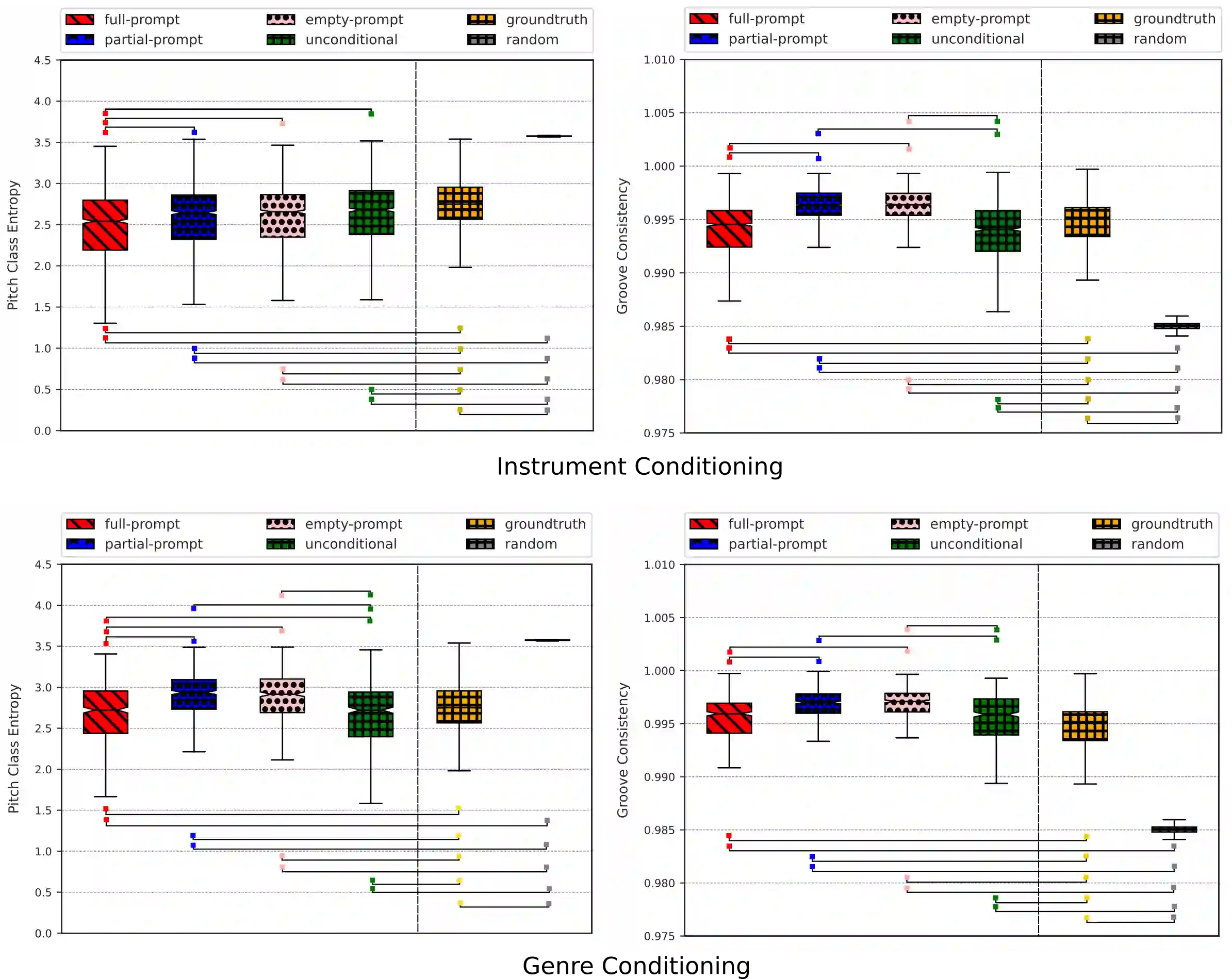

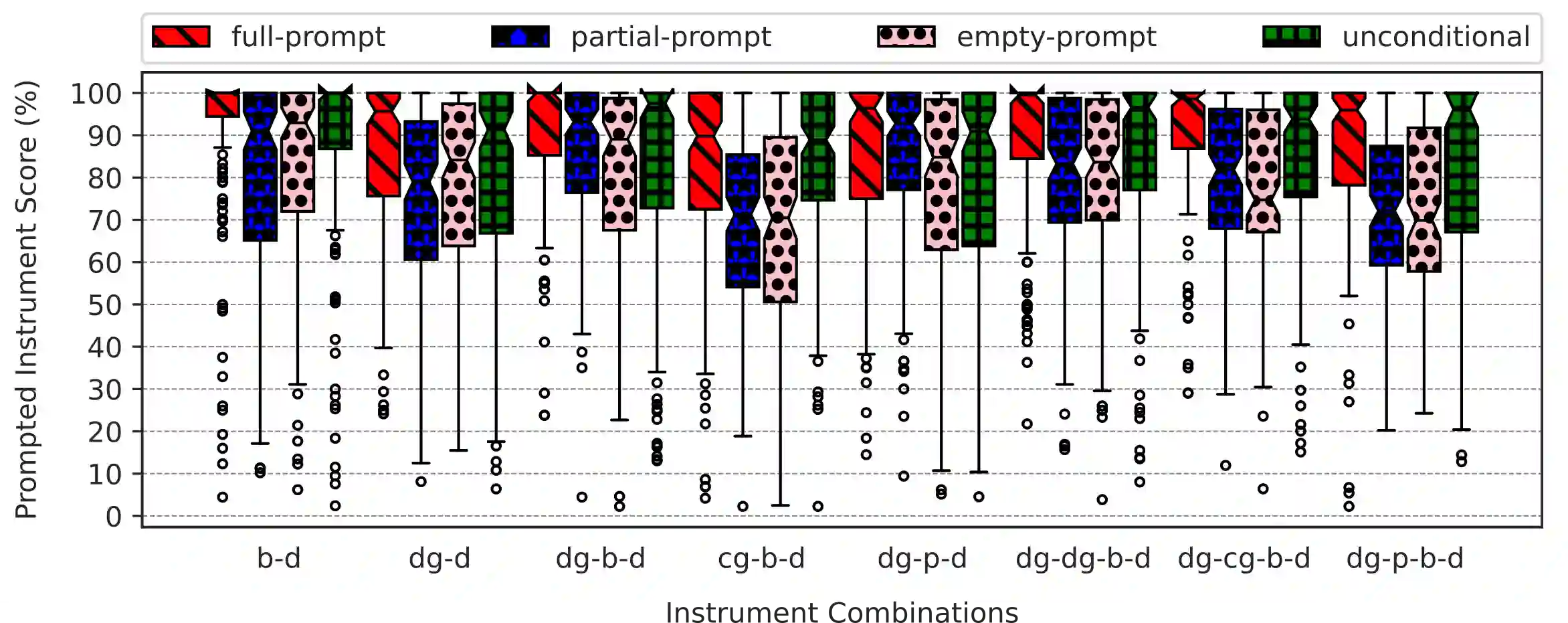

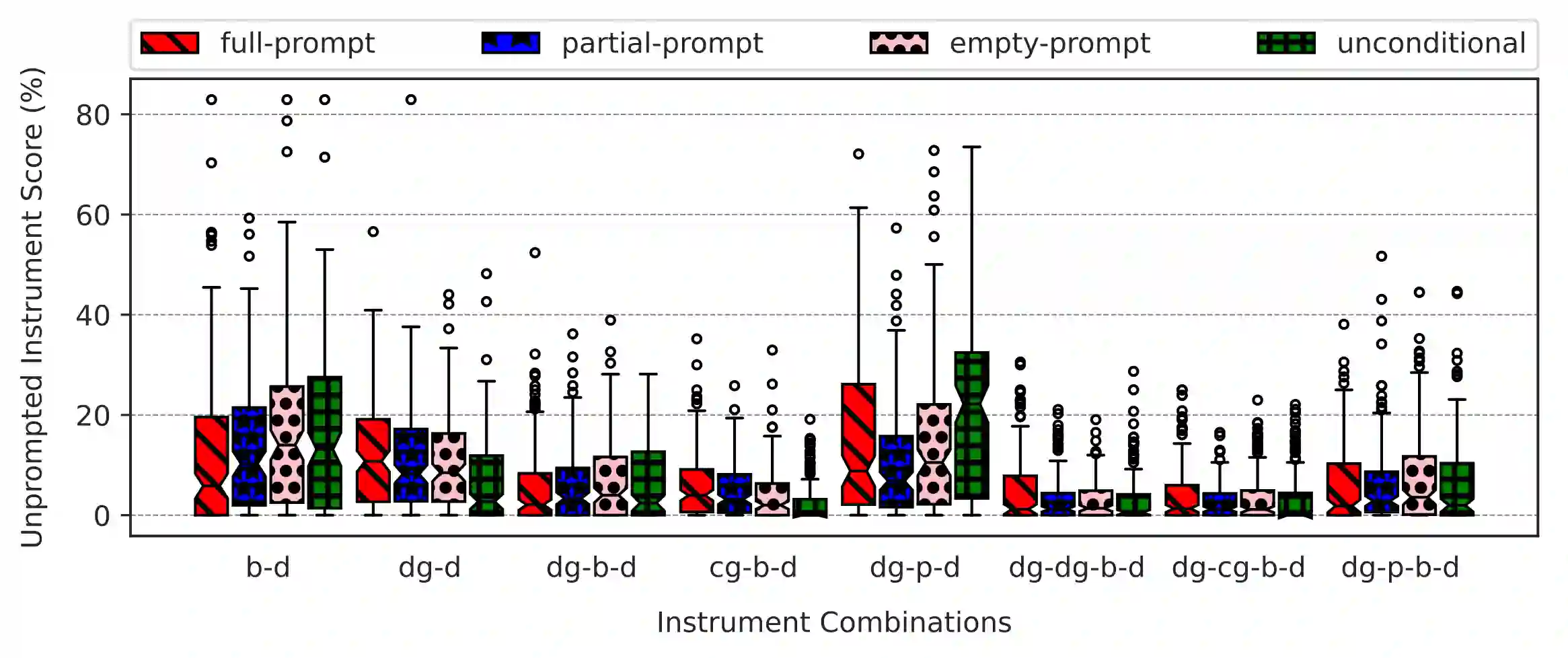

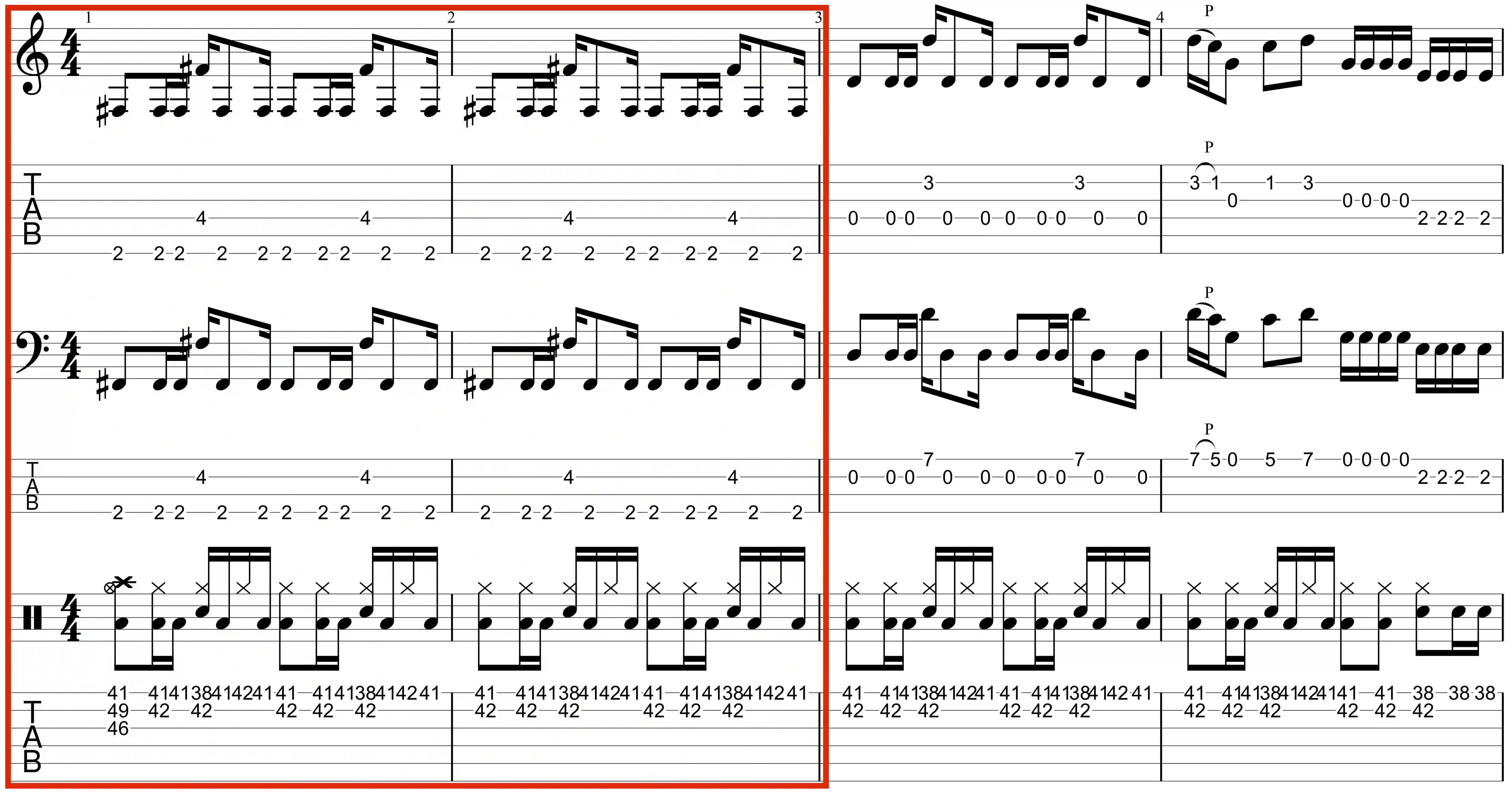

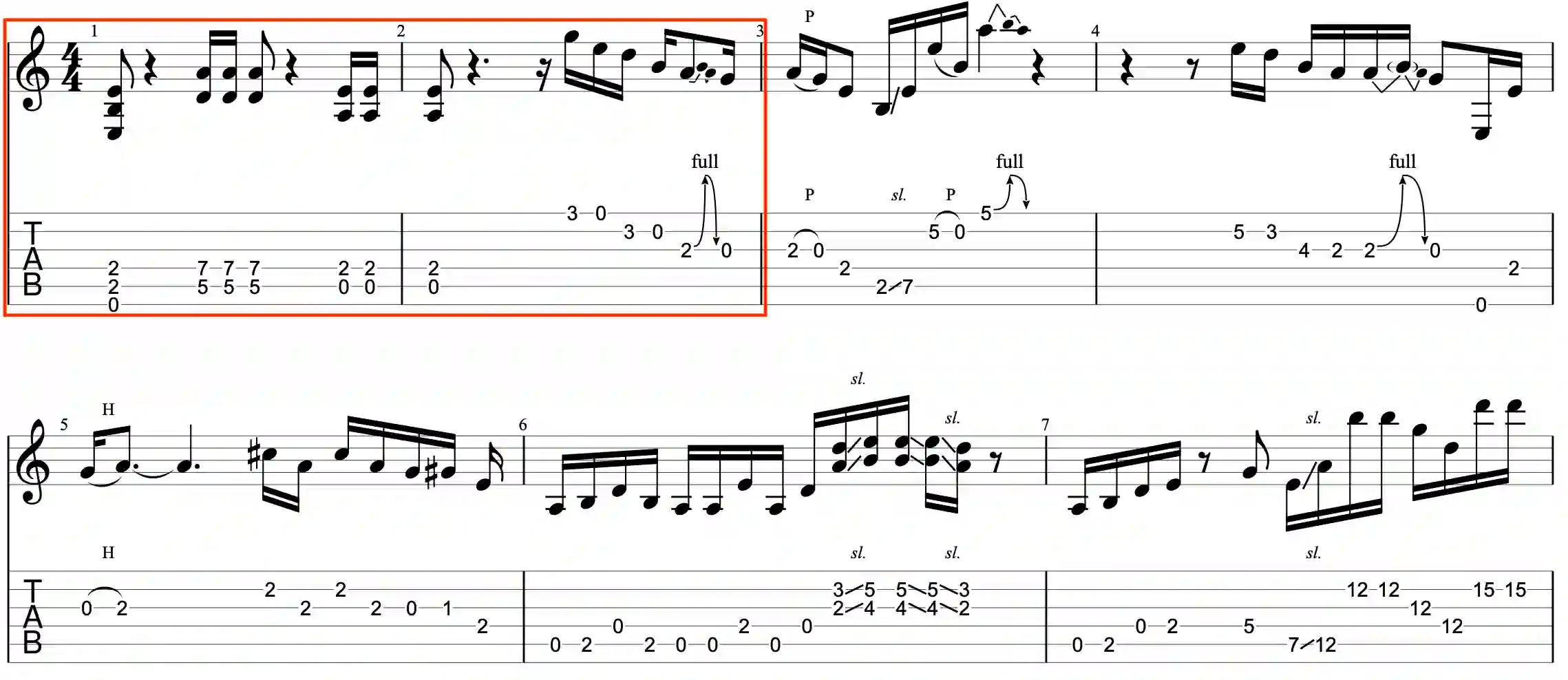

Recently, symbolic music generation with deep learning techniques has witnessed steady improvements. Most works on this topic focus on MIDI representations, but less attention has been paid to symbolic music generation using guitar tablatures (tabs) which can be used to encode multiple instruments. Tabs include information on expressive techniques and fingerings for fretted string instruments in addition to rhythm and pitch. In this work, we use the DadaGP dataset for guitar tab music generation, a corpus of over 26k songs in GuitarPro and token formats. We introduce methods to condition a Transformer-XL deep learning model to generate guitar tabs (GTR-CTRL) based on desired instrumentation (inst-CTRL) and genre (genre-CTRL). Special control tokens are appended at the beginning of each song in the training corpus. We assess the performance of the model with and without conditioning. We propose instrument presence metrics to assess the inst-CTRL model's response to a given instrumentation prompt. We trained a BERT model for downstream genre classification and used it to assess the results obtained with the genre-CTRL model. Statistical analyses evidence significant differences between the conditioned and unconditioned models. Overall, results indicate that the GTR-CTRL methods provide more flexibility and control for guitar-focused symbolic music generation than an unconditioned model.

翻译:最近,具有深层学习技巧的象征性音乐生成稳步改善。关于这个主题的多数工作侧重于MIDI的演示,但较少注意使用吉他塔板(tabs)来编码多种仪器的象征性音乐生成。标签包括了除节奏和音调外,对疲软字符串工具的表达技巧和指头的信息。在这项工作中,我们使用DadaGP数据集来制作吉他标签,在GuitarPro和象征性格式中堆放26k的歌曲。我们引入了一些方法,以设置一个变异器-XL深层学习模型,以根据理想的仪表(Int-CTRL)和genre(gend-CTRL)制作吉他标签(GTR-C)来制作吉他标签(GTR-C)和吉他(genle)来生成吉他。特别控制符号被附在每首歌曲的开始处,我们用且不设任何限制地评估模型的性能。我们提出了仪器存在量度指标来评估Sto-CTRL模型对特定仪表的响应速度。我们训练了下方的模型模型,用一个模型来进行分类,并用它用来评估了下游的模型,用它来评估了下游的模型的模型来评估结果,并且用模型来评估了GTRTRTRL的模型,用模型来评估了G-C的模型的模型的模型的模型,用一个显著的模型,并用了一个基础分析。提供了一个基础的模型的模型的模型的模型,提供了一种不精确的模型。