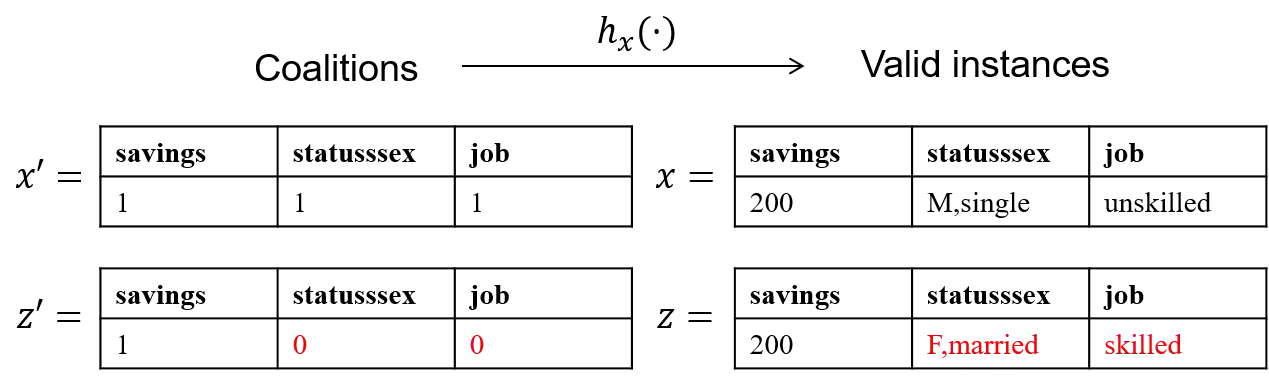

Unintended biases in machine learning (ML) models are among the major concerns that must be addressed to maintain public trust in ML. In this paper, we address process fairness of ML models that consists in reducing the dependence of models on sensitive features, without compromising their performance. We revisit the framework FixOut that is inspired in the approach "fairness through unawareness" to build fairer models. We introduce several improvements such as automating the choice of FixOut's parameters. Also, FixOut was originally proposed to improve fairness of ML models on tabular data. We also demonstrate the feasibility of FixOut's workflow for models on textual data. We present several experimental results that illustrate the fact that FixOut improves process fairness on different classification settings.

翻译:机器学习模式中无意的偏见是维持公众对ML的信任所必须解决的主要问题。 在本文中,我们处理ML模式的流程公正性问题,包括减少模型对敏感特征的依赖,同时不损害其性能。我们重新审视了“通过不知情实现公平”的方法所启发的FixOut框架,以构建更公平的模型。我们引入了若干改进措施,如使FixOut的参数选择自动化。此外,FixOut最初还提议提高表格数据中ML模型的公平性。我们还展示了FixOut对文本数据模型的工作流程的可行性。我们介绍了几个实验结果,说明FixOut会改善不同分类环境的流程公平性。