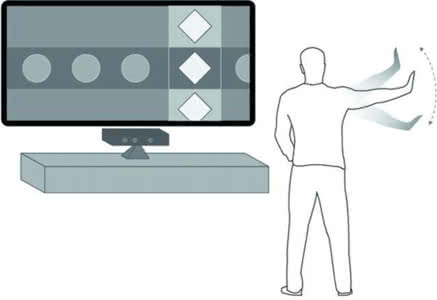

Consumers often react expressively to products such as food samples, perfume, jewelry, sunglasses, and clothing accessories. This research discusses a multimodal affect recognition system developed to classify whether a consumer likes or dislikes a product tested at a counter or kiosk, by analyzing the consumer's facial expression, body posture, hand gestures, and voice after testing the product. A depth-capable camera and microphone system - Kinect for Windows - is utilized. An emotion identification engine has been developed to analyze the images and voice to determine affective state of the customer. The image is segmented using skin color and adaptive threshold. Face, body and hands are detected using the Haar cascade classifier. Canny edges are identified and the lip, body and hand contours are extracted using spatial filtering. Edge count and orientation around the mouth, cheeks, eyes, shoulders, fingers and the location of the edges are used as features. Classification is done by an emotion template mapping algorithm and training a classifier using support vector machines. The real-time performance, accuracy and feasibility for multimodal affect recognition in feedback assessment are evaluated.

翻译:研究讨论了一种多式联运识别系统,通过分析消费者的面部表情、身体姿势、手势和在产品测试后的声音,对消费者在柜台或摊位上测试的产品进行分类,分析消费者喜欢或不喜欢这种产品,分析消费者的面部表情、身体姿势、手势和声音,使用一个深能摄像和麦克风系统-视窗基点;开发了一个情感识别引擎,分析图像和声音,以确定顾客的感性能状态;利用皮肤色和适应性阈值对图像进行分解;使用哈尔级分类器对图像进行面部、身体和手部进行检测;通过空间过滤查明坎尼边缘,利用嘴部、脸部、眼睛、肩部和手部轮廓进行抽取;将嘴部、脸部、眼睛、肩部、手指和边缘位置作为特征使用。通过情感模板绘图算法进行分类,并培训使用辅助矢量机器进行分类;对反馈评估中多式影响识别的实时性、准确性和可行性进行了评估。