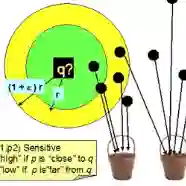

Deep and wide neural networks successfully fit very complex functions today, but dense models are starting to be prohibitively expensive for inference. To mitigate this, one promising direction is networks that activate a sparse subgraph of the network. The subgraph is chosen by a data-dependent routing function, enforcing a fixed mapping of inputs to subnetworks (e.g., the Mixture of Experts (MoE) paradigm in Switch Transformers). However, prior work is largely empirical, and while existing routing functions work well in practice, they do not lead to theoretical guarantees on approximation ability. We aim to provide a theoretical explanation for the power of sparse networks. As our first contribution, we present a formal model of data-dependent sparse networks that captures salient aspects of popular architectures. We then introduce a routing function based on locality sensitive hashing (LSH) that enables us to reason about how well sparse networks approximate target functions. After representing LSH-based sparse networks with our model, we prove that sparse networks can match the approximation power of dense networks on Lipschitz functions. Applying LSH on the input vectors means that the experts interpolate the target function in different subregions of the input space. To support our theory, we define various datasets based on Lipschitz target functions, and we show that sparse networks give a favorable trade-off between number of active units and approximation quality.

翻译:深而宽的神经网络今天成功地适应了非常复杂的功能,但密集的模型开始变得令人望而却步地昂贵,无法令人望而却步。为了减轻这一点,一个有希望的方向是启动网络稀疏的子子图的网络。这个子图是根据数据依赖的路径函数选择的,对子网络的投入进行固定的绘图(例如,切换变换器中的专家混合(MOE)模式)。然而,以前的工作基本上是经验性的,虽然现有的路线功能在实践中运作良好,但并不导致近似功能的理论保障。我们的目标是为稀疏网络的力量提供理论解释。作为我们的第一个贡献,我们提出了一个基于数据依赖的稀疏网络的正式模型,以获取流行结构的突出方面。我们随后引入了一个基于地方敏感散散(LSH)网络的固定路径功能。在代表基于LSH的偏差网络和我们的模型之后,我们证明稀少的网络可以匹配利普西茨功能的近亲近光网络的近光能量。在输入的源码中应用LSH,在输入的源码中,我们定义了不同的目标矢中专家之间的定位系统输入功能。