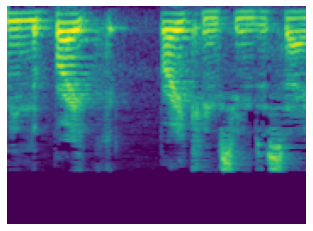

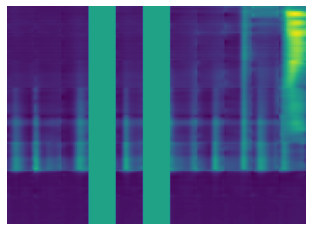

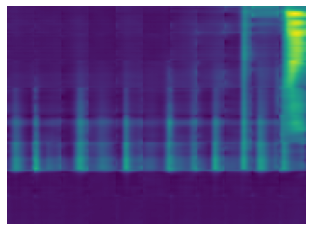

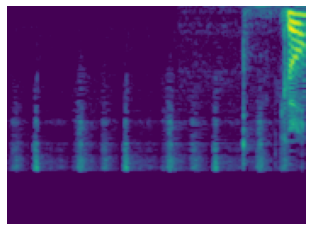

In this work, we present the Textless Vision-Language Transformer (TVLT), where homogeneous transformer blocks take raw visual and audio inputs for vision-and-language representation learning with minimal modality-specific design, and do not use text-specific modules such as tokenization or automatic speech recognition (ASR). TVLT is trained by reconstructing masked patches of continuous video frames and audio spectrograms (masked autoencoding) and contrastive modeling to align video and audio. TVLT attains performance comparable to its text-based counterpart on various multimodal tasks, such as visual question answering, image retrieval, video retrieval, and multimodal sentiment analysis, with 28x faster inference speed and only 1/3 of the parameters. Our findings suggest the possibility of learning compact and efficient visual-linguistic representations from low-level visual and audio signals without assuming the prior existence of text. Our code and checkpoints are available at: https://github.com/zinengtang/TVLT

翻译:在这项工作中,我们介绍了“无文字视觉语言变换器”(TVLT),在这种变压器中,同质变压器块将原始的视觉和音频投入用于视觉和语言演示学习,并采用最低限度模式特定设计,不使用象征性或自动语音识别(ASR)等文本特定模块。 TVLT通过重建连续视频框架和声频光谱图(制成自动编码)的蒙面罩和对比模型来进行培训。 TVLT在各种多式联运任务(如视觉答题、图像检索、视频检索和多式情绪分析)上取得了与文本对应的功能。28x更快的推断速度,只有1/3的参数。我们的调查结果表明,在不假定有文字存在的情况下,可以从低级别的视觉和音频信号中学习紧凑的视觉语言表达方式。我们的代码和检查站可以在以下网址上查到:https://github.com/zinengtang/TVLT)。