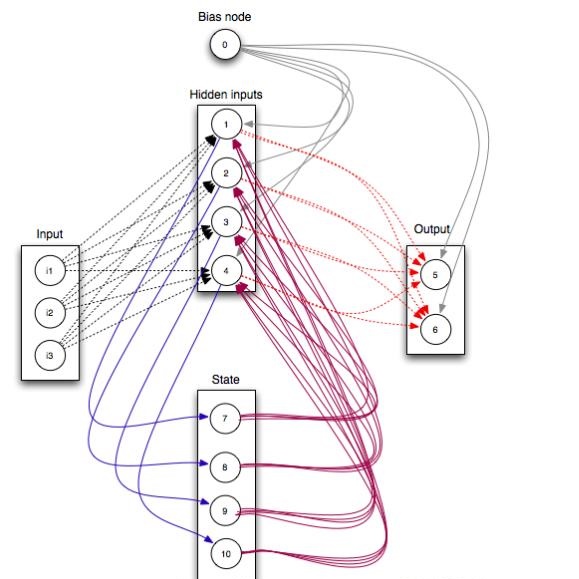

In feed-forward neural networks, dataset-free weight-initialization methods such as LeCun, Xavier (or Glorot), and He initializations have been developed. These methods randomly determine the initial values of weight parameters based on specific distributions (e.g., Gaussian or uniform distributions) without using training datasets. To the best of the authors' knowledge, such a dataset-free weight-initialization method is yet to be developed for restricted Boltzmann machines (RBMs), which are probabilistic neural networks consisting of two layers. In this study, we derive a dataset-free weight-initialization method for Bernoulli--Bernoulli RBMs based on statistical mechanical analysis. In the proposed weight-initialization method, the weight parameters are drawn from a Gaussian distribution with zero mean. The standard deviation of the Gaussian distribution is optimized based on our hypothesis that a standard deviation providing a larger layer correlation (LC) between the two layers improves the learning efficiency. The expression of the LC is derived based on a statistical mechanical analysis. The optimal value of the standard deviation corresponds to the maximum point of the LC. The proposed weight-initialization method is identical to Xavier initialization in a specific case (i.e., when the sizes of the two layers are the same, the random variables of the layers are $\{-1,1\}$-binary, and all bias parameters are zero). The validity of the proposed weight-initialization method is demonstrated in numerical experiments using a toy and real-world datasets.

翻译:在前馈神经网络中,已发展出诸如LeCun、Xavier(或称Glorot)及He初始化等无数据集权重初始化方法。这些方法基于特定分布(例如高斯分布或均匀分布)随机确定权重参数的初始值,无需使用训练数据集。据作者所知,此类无数据集权重初始化方法尚未在受限玻尔兹曼机(RBM)——一种由两层组成的概率神经网络——中得到开发。在本研究中,我们基于统计力学分析推导出适用于伯努利-伯努利RBM的无数据集权重初始化方法。在所提出的权重初始化方法中,权重参数从均值为零的高斯分布中抽取。该高斯分布的标准差基于以下假设进行优化:提供更大层间相关性(LC)的标准差能够提升学习效率。LC的表达式通过统计力学分析推导得出。标准差的最优值对应于LC的极大值点。在特定情况下(即当两层尺寸相同、层随机变量为$\\{-1,1\\}$二值且所有偏置参数为零时),所提出的权重初始化方法与Xavier初始化方法一致。通过使用玩具数据集和真实世界数据集的数值实验,验证了所提出权重初始化方法的有效性。