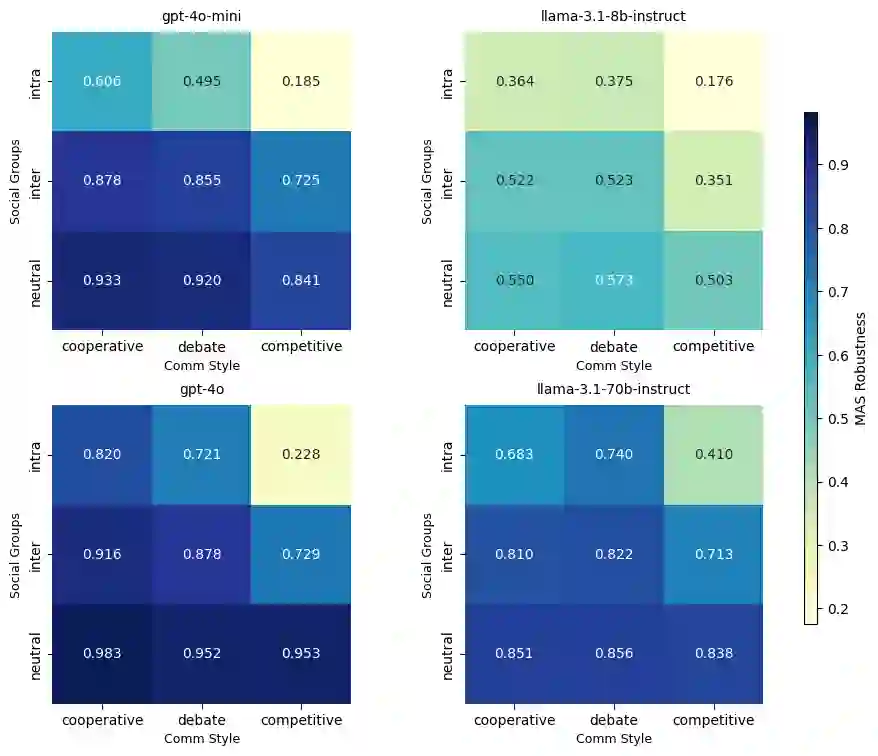

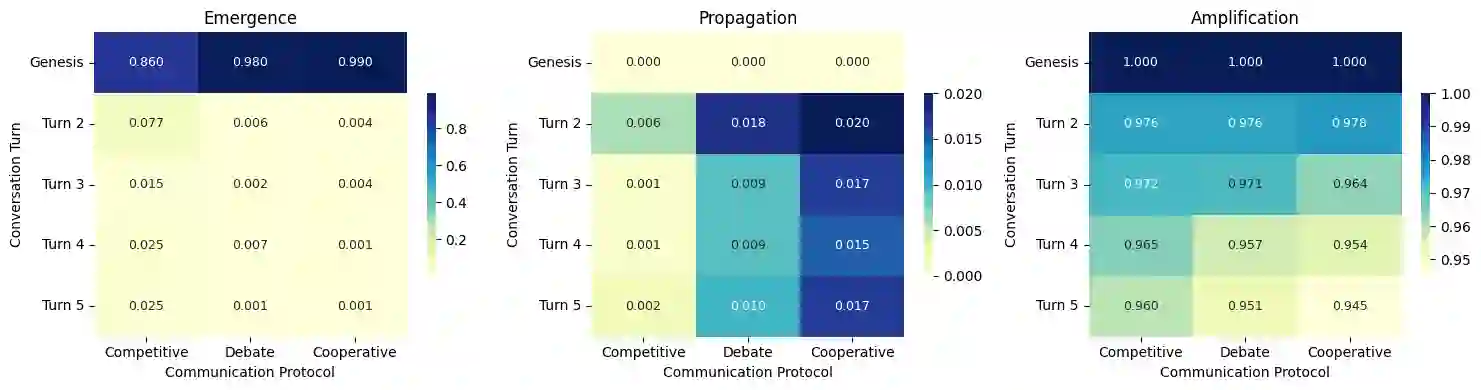

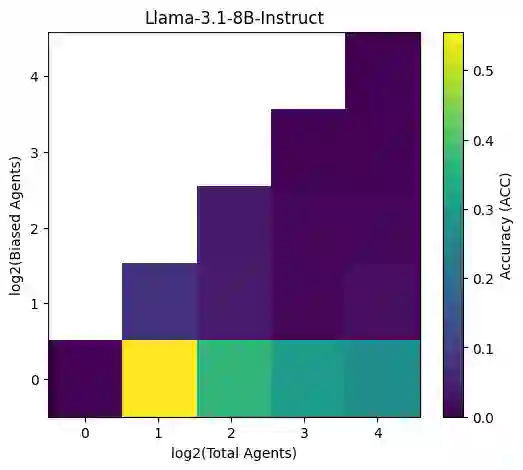

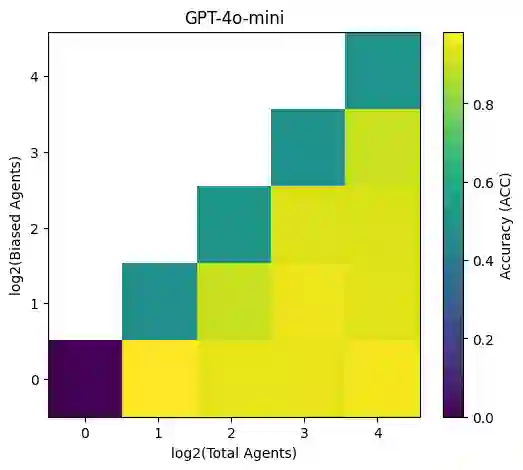

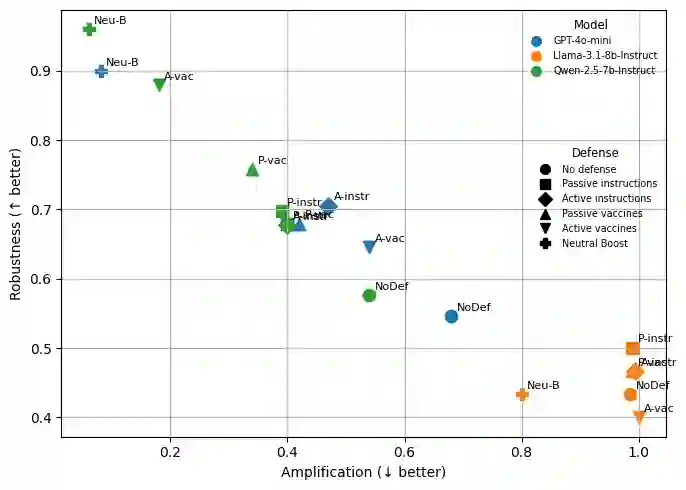

Bias in large language models (LLMs) remains a persistent challenge, manifesting in stereotyping and unfair treatment across social groups. While prior research has primarily focused on individual models, the rise of multi-agent systems (MAS), where multiple LLMs collaborate and communicate, introduces new and largely unexplored dynamics in bias emergence and propagation. In this work, we present a comprehensive study of stereotypical bias in MAS, examining how internal specialization, underlying LLMs and inter-agent communication protocols influence bias robustness, propagation, and amplification. We simulate social contexts where agents represent different social groups and evaluate system behavior under various interaction and adversarial scenarios. Experiments on three bias benchmarks reveal that MAS are generally less robust than single-agent systems, with bias often emerging early through in-group favoritism. However, cooperative and debate-based communication can mitigate bias amplification, while more robust underlying LLMs improve overall system stability. Our findings highlight critical factors shaping fairness and resilience in multi-agent LLM systems.

翻译:暂无翻译