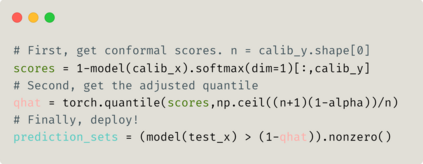

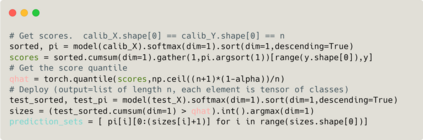

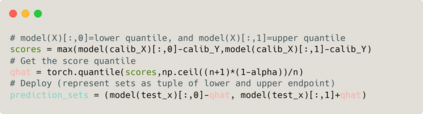

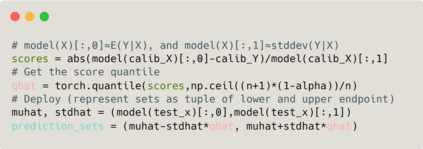

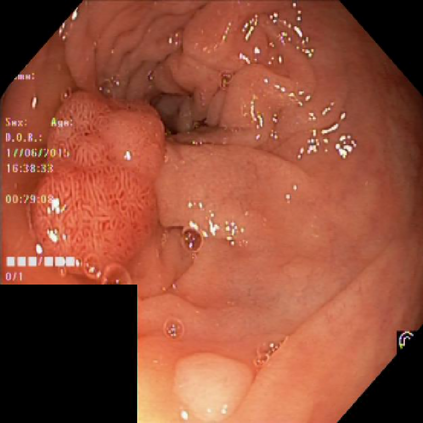

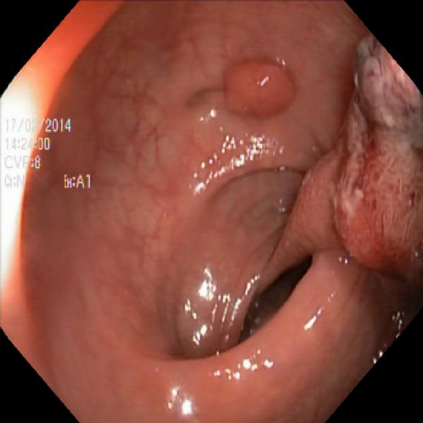

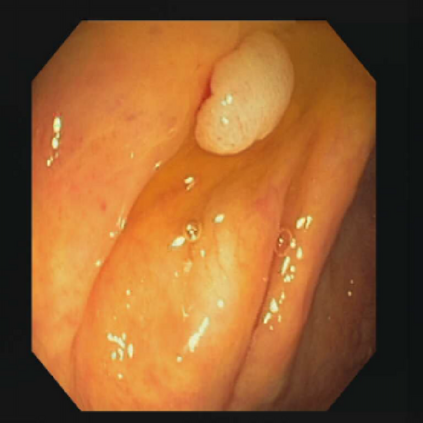

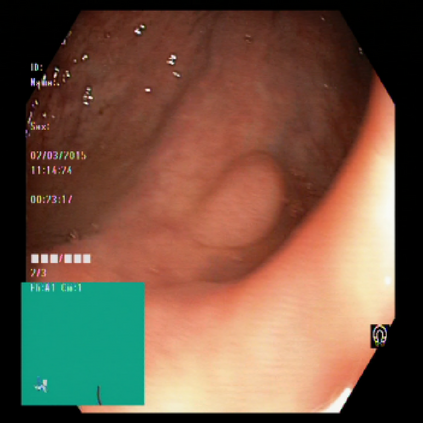

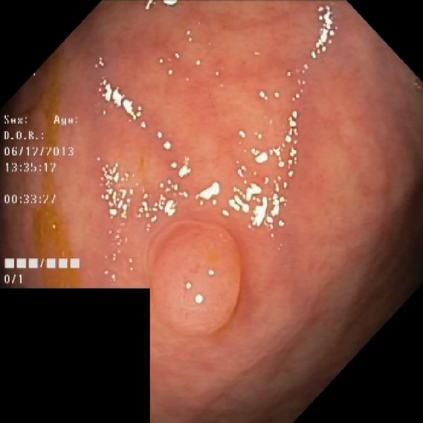

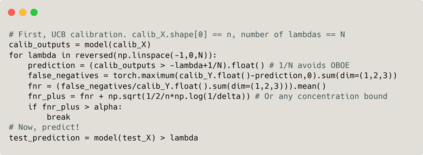

Black-box machine learning learning methods are now routinely used in high-risk settings, like medical diagnostics, which demand uncertainty quantification to avoid consequential model failures. Distribution-free uncertainty quantification (distribution-free UQ) is a user-friendly paradigm for creating statistically rigorous confidence intervals/sets for such predictions. Critically, the intervals/sets are valid without distributional assumptions or model assumptions, with explicit guarantees with finitely many datapoints. Moreover, they adapt to the difficulty of the input; when the input example is difficult, the uncertainty intervals/sets are large, signaling that the model might be wrong. Without much work, one can use distribution-free methods on any underlying algorithm, such as a neural network, to produce confidence sets guaranteed to contain the ground truth with a user-specified probability, such as 90%. Indeed, the methods are easy-to-understand and general, applying to many modern prediction problems arising in the fields of computer vision, natural language processing, deep reinforcement learning, and so on. This hands-on introduction is aimed at a reader interested in the practical implementation of distribution-free UQ, including conformal prediction and related methods, who is not necessarily a statistician. We will include many explanatory illustrations, examples, and code samples in Python, with PyTorch syntax. The goal is to provide the reader a working understanding of distribution-free UQ, allowing them to put confidence intervals on their algorithms, with one self-contained document.

翻译:在高风险环境中,如医学诊断,现在经常使用黑盒机器学习方法,例如医疗诊断,这种方法要求不确定性量化,以避免导致模型失败。无分配的不确定性量化(无分配的UQ)是一个方便用户的范例,用于为这种预测创建统计上严格的信任间隔/设置。关键是,间隔/设置是有效的,没有分配假设或模型假设,有有限的多个数据点的明确保障。此外,它们适应输入困难;当输入实例困难时,不确定性间隔/设置很大,表明模型可能出错。如果不做很多工作,人们可以在任何基本算法上使用无分配方法,例如神经网络,以可靠的可能性(如90%)来生成保证包含地面真相的信任套件。事实上,方法容易理解和笼统,适用于计算机视觉、自然语言处理、深度增强学习等领域中出现的许多现代预测问题。这种实践介绍的目的是让读者对无分配的UQ的实际实施感兴趣,包括符合要求的预测和相关的解释法,我们一定地将一个解释性的文件分发方法纳入统计中。