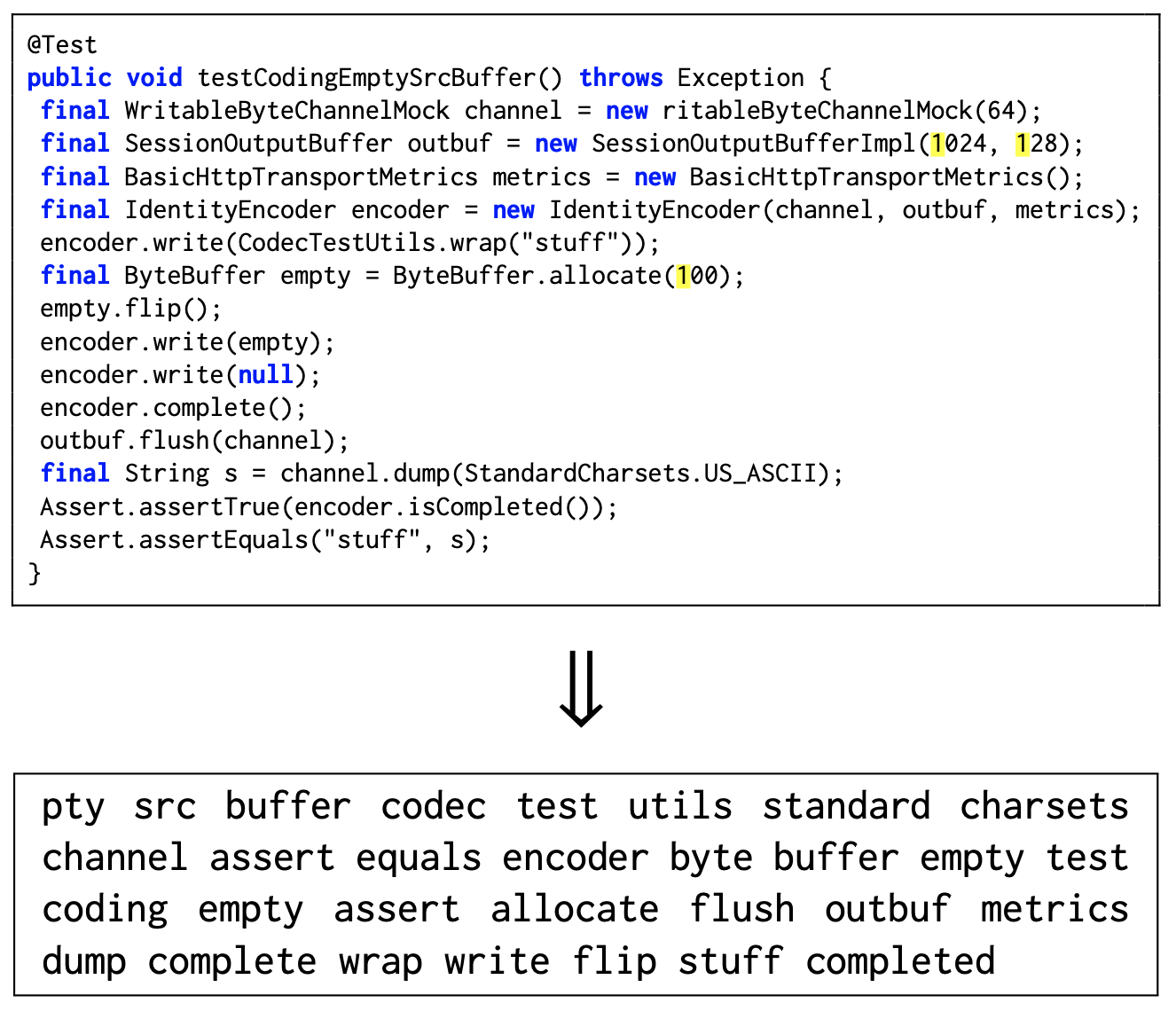

Software systems have been continuously evolved and delivered with high quality due to the widespread adoption of automated tests. A recurring issue hurting this scenario is the presence of flaky tests, a test case that may pass or fail non-deterministically. A promising, but yet lacking more empirical evidence, approach is to collect static data of automated tests and use them to predict their flakiness. In this paper, we conducted an empirical study to assess the use of code identifiers to predict test flakiness. To do so, we first replicate most parts of the previous study of Pinto~et~al.~(MSR~2020). This replication was extended by using a different ML Python platform (Scikit-learn) and adding different learning algorithms in the analyses. Then, we validated the performance of trained models using datasets with other flaky tests and from different projects. We successfully replicated the results of Pinto~et~al.~(2020), with minor differences using Scikit-learn; different algorithms had performance similar to the ones used previously. Concerning the validation, we noticed that the recall of the trained models was smaller, and classifiers presented a varying range of decreases. This was observed in both intra-project and inter-projects test flakiness prediction.

翻译:由于广泛采用自动化测试,软件系统不断得到开发并高质量地交付。影响这一假设情景的一个反复出现的问题是存在片面测试,这是一个可能非决定性地通过或失败的测试案例。一个很有希望但又缺乏更多经验证据的办法是收集自动测试的静态数据,并利用这些数据预测其不毛性。在本文件中,我们进行了一项经验研究,以评估使用代码识别器来预测测试不适性。为了这样做,我们首先复制了先前对Pinto~et~al.~(MSR~202020)的研究的大部分内容。通过使用不同的 ML Python 平台(Scikit-learn)和在分析中添加不同的学习算法,推广了这一复制。然后,我们用其他片度测试和不同项目的数据组验证了经过培训的模型的性能。我们成功地复制了Pinto~et~al.~(2020)的结果,使用Sciki-learn的微差异;不同的算法与以前使用的方法相似。关于验证,我们注意到,在所观测的内部预测中,所观测到的模型和试测范围都较小。