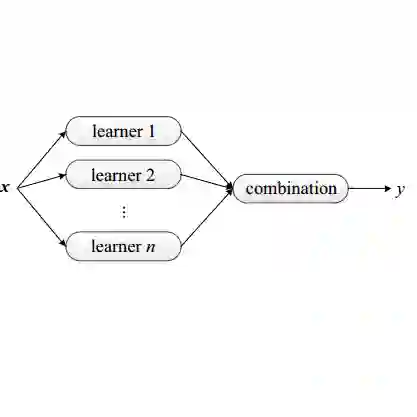

Federated learning (FL) has emerged as an effective approach to address consumer privacy needs. FL has been successfully applied to certain machine learning tasks, such as training smart keyboard models and keyword spotting. Despite FL's initial success, many important deep learning use cases, such as ranking and recommendation tasks, have been limited from on-device learning. One of the key challenges faced by practical FL adoption for DL-based ranking and recommendation is the prohibitive resource requirements that cannot be satisfied by modern mobile systems. We propose Federated Ensemble Learning (FEL) as a solution to tackle the large memory requirement of deep learning ranking and recommendation tasks. FEL enables large-scale ranking and recommendation model training on-device by simultaneously training multiple model versions on disjoint clusters of client devices. FEL integrates the trained sub-models via an over-arch layer into an ensemble model that is hosted on the server. Our experiments demonstrate that FEL leads to 0.43-2.31% model quality improvement over traditional on-device federated learning - a significant improvement for ranking and recommendation system use cases.

翻译:联邦学习(FL)已成为解决消费者隐私需求的有效办法。FL已经成功地应用于某些机器学习任务,如培训智能键盘模型和关键字定位等。尽管FL取得了初步成功,但许多重要的深层次学习使用案例,如排名和建议任务,都从在线学习中受到限制。实用FL采用基于DL的排名和建议所面临的关键挑战之一是现代移动系统无法满足的令人望而却步的资源要求。我们建议FL综合学习(FEL)作为解决深层次学习排名和建议任务的大量记忆要求的一种解决办法。FEL通过同时培训关于客户设备脱节组合的多种模式,使大型排名和建议模式培训成为建议模式。FEL将经过培训的次级模式通过过度结构纳入服务器托管的混合模型中。我们的实验表明,FEL导致0.43-2.31%的示范质量改进超过了传统的分级反馈学习,大大改进了排位和建议系统使用案例。