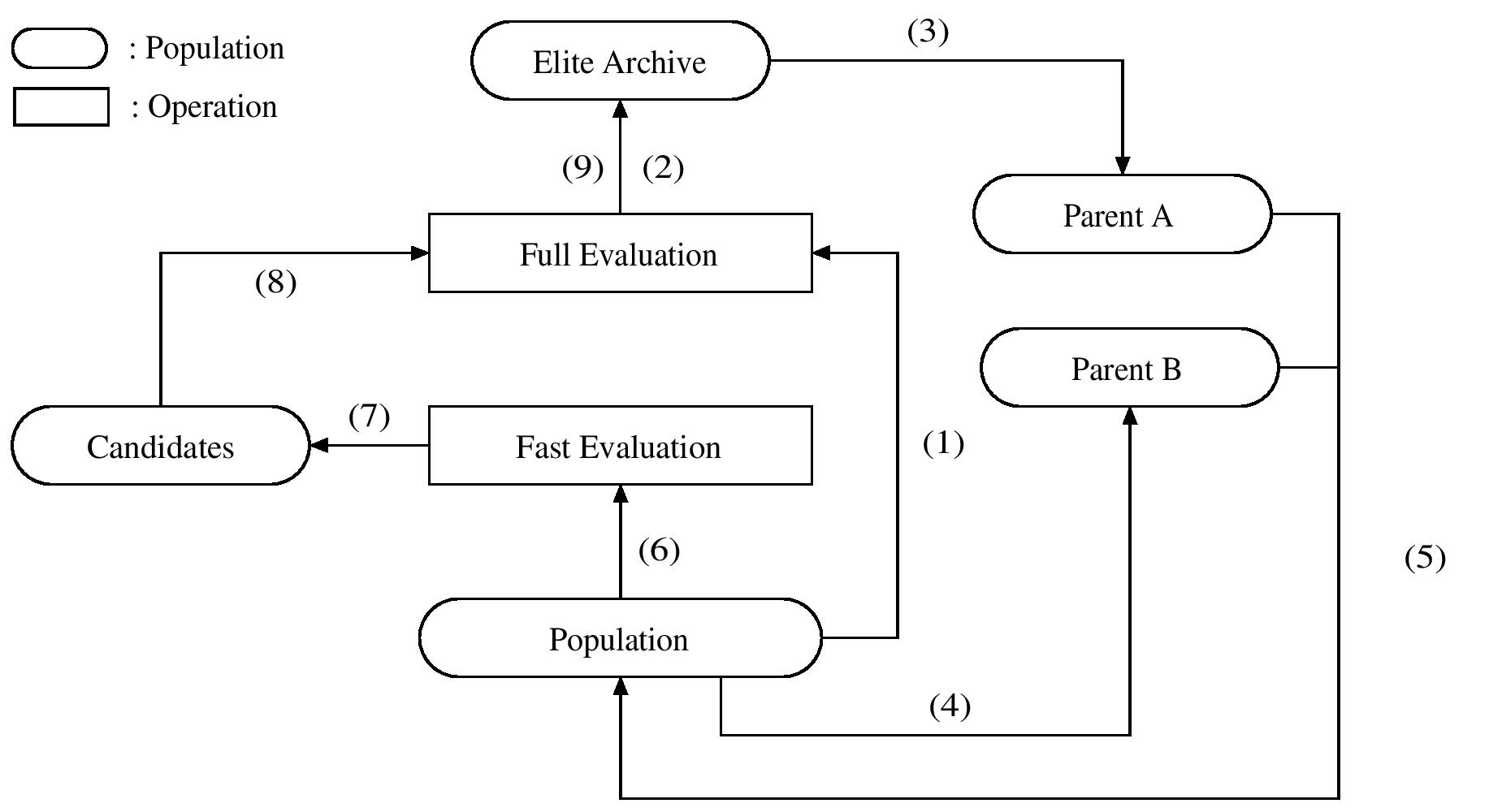

In recent years, graph neural networks (GNNs) have gained increasing attention, as they possess the excellent capability of processing graph-related problems. In practice, hyperparameter optimisation (HPO) is critical for GNNs to achieve satisfactory results, but this process is costly because the evaluations of different hyperparameter settings require excessively training many GNNs. Many approaches have been proposed for HPO, which aims to identify promising hyperparameters efficiently. In particular, the genetic algorithm (GA) for HPO has been explored, which treats GNNs as a black-box model, of which only the outputs can be observed given a set of hyperparameters. However, because GNN models are sophisticated and the evaluations of hyperparameters on GNNs are expensive, GA requires advanced techniques to balance the exploration and exploitation of the search and make the optimisation more effective given limited computational resources. Therefore, we proposed a tree-structured mutation strategy for GA to alleviate this issue. Meanwhile, we reviewed the recent HPO works, which gives room for the idea of tree-structure to develop, and we hope our approach can further improve these HPO methods in the future.

翻译:近年来,平面神经网络(GNN)日益受到越来越多的关注,因为它们拥有处理与图形有关的问题的极好能力。实际上,超参数优化对于全球网络取得令人满意的结果至关重要,但这一过程成本高昂,因为对不同超参数设置的评价要求对许多GNS进行过度培训。许多方法都已经为HPO提出,其目的是高效率地确定有希望的超参数。特别是,已经探索了HPO的基因算法(GA),将GNS作为黑盒模型,只有一组超参数才能观察到其产出。然而,由于GNNS模型十分复杂,对GNNS超参数的评估也十分昂贵,GA需要采用先进技术来平衡搜索的勘探和开发,使优化更加有效,因为计算资源有限。因此,我们为GA提出了一种树结构突变战略来缓解这一问题。与此同时,我们审查了最近的HPO工作,这为树结构的发展提供了空间,我们希望我们的方法今后能够进一步改进这些HPO方法。