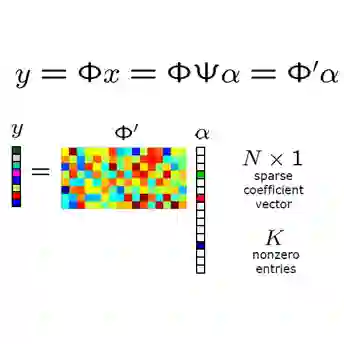

The integration of compressed sensing and parallel imaging (CS-PI) provides a robust mechanism for accelerating MRI acquisitions. However, most such strategies require the explicit formation of either coil sensitivity profiles or a cross-coil correlation operator, and as a result reconstruction corresponds to solving a challenging bilinear optimization problem. In this work, we present an unsupervised deep learning framework for calibration-free parallel MRI, coined universal generative modeling for parallel imaging (UGM-PI). More precisely, we make use of the merits of both wavelet transform and the adaptive iteration strategy in a unified framework. We train a powerful noise conditional score network by forming wavelet tensor as the network input at the training phase. Experimental results on both physical phantom and in vivo datasets implied that the proposed method is comparable and even superior to state-of-the-art CS-PI reconstruction approaches.

翻译:压缩感测和平行成像(CS-PI)的整合为加速MRI的获取提供了一个强有力的机制,然而,大多数这类战略要求明确形成循环灵敏度剖面或跨石油相关操作员,从而进行相应的重建,以解决具有挑战性的双线优化问题。在这项工作中,我们为无校准的平行成像(UGM-PI)提出了一个未经监督的深层次学习框架,为平行成像(UGM-PI)创建了普遍的基因化模型。更确切地说,我们利用了波盘变换和统一框架中的适应性迭代战略的优点。我们通过在培训阶段形成波粒振动器作为网络投入来培养一个强大的噪声有条件评分网络。关于物理幽灵和活性数据集的实验结果意味着,拟议的方法与最先进的CS-PI重建方法具有可比性甚至优越性。