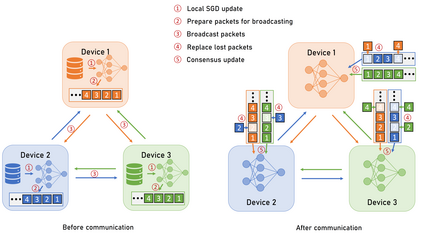

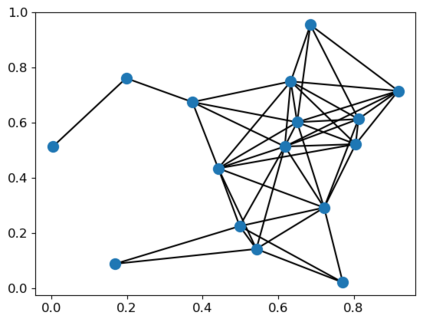

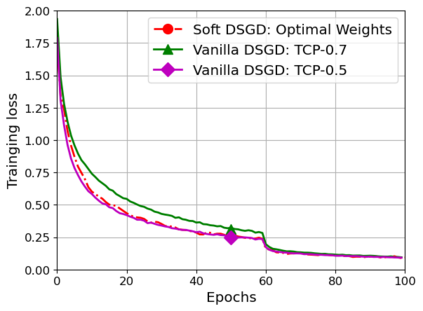

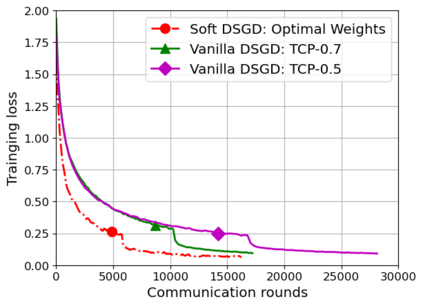

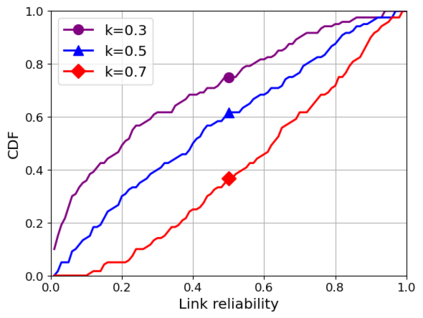

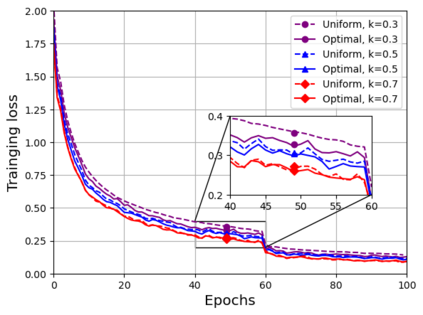

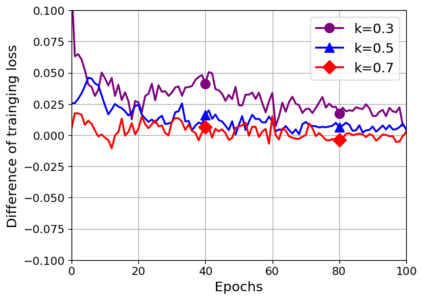

Decentralized federated learning, inherited from decentralized learning, enables the edge devices to collaborate on model training in a peer-to-peer manner without the assistance of a server. However, existing decentralized learning frameworks usually assume perfect communication among devices, where they can reliably exchange messages, e.g., gradients or parameters. But the real-world communication networks are prone to packet loss and transmission errors. Transmission reliability comes with a price. The commonly-used solution is to adopt a reliable transportation layer protocol, e.g., transmission control protocol (TCP), which however leads to significant communication overhead and reduces connectivity among devices that can be supported. For a communication network with a lightweight and unreliable communication protocol, user datagram protocol (UDP), we propose a robust decentralized stochastic gradient descent (SGD) approach, called Soft-DSGD, to address the unreliability issue. Soft-DSGD updates the model parameters with partially received messages and optimizes the mixing weights according to the link reliability matrix of communication links. We prove that the proposed decentralized training system, even with unreliable communications, can still achieve the same asymptotic convergence rate as vanilla decentralized SGD with perfect communications. Moreover, numerical results confirm the proposed approach can leverage all available unreliable communication links to speed up convergence.

翻译:分散化的联邦学习,从分散化学习中继承下来,使边际设备能够在没有服务器协助的情况下,以同侪和同侪的方式合作进行示范培训,然而,现有的分散化学习框架通常在各种设备之间建立完美的通信,它们可以可靠地交换信息,例如梯度或参数。但是,真实世界的通信网络容易发生包装丢失和传输错误。传输的可靠性随价格而来。通常使用的解决办法是采用可靠的运输层协议,例如传输控制协议(TCP),但这种协议会导致大量的通信间接费用,并减少可以支持的设备的连通性。对于一个有轻度和不可靠的通信协议的通信网络,用户数据图表协议(UDP),我们提议采用一种强有力的分散化的分层梯度梯度下降(SGD)方法,称为Soft-DSGD,以解决不可靠的问题。软式DSGD用部分收到的信息更新模型参数,并根据通信连接的可靠性矩阵优化混合重量。我们证明,拟议的分散化培训系统,即使是不可靠的通信,仍然可以实现相同的混合融合速度。