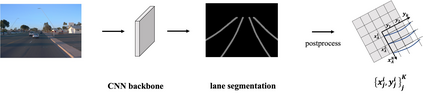

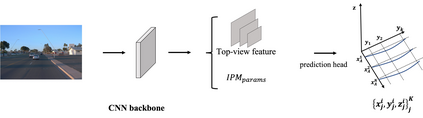

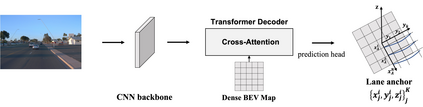

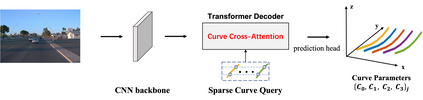

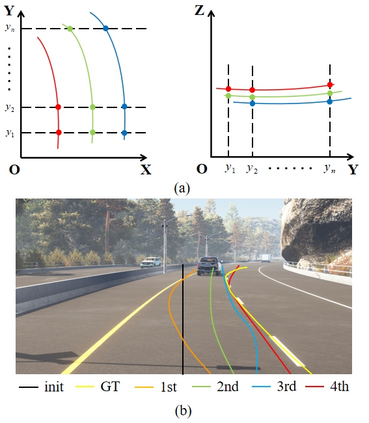

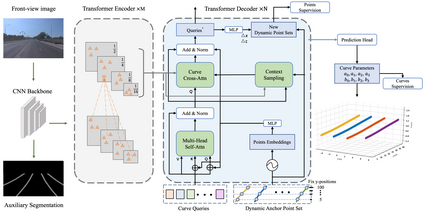

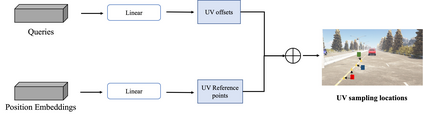

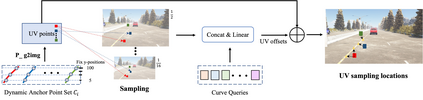

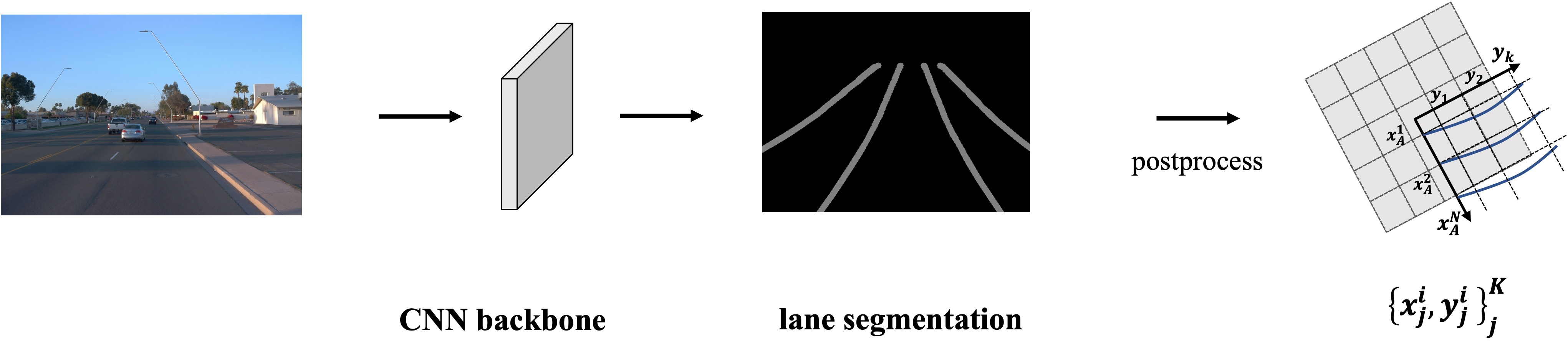

3D lane detection is an integral part of autonomous driving systems. Previous CNN and Transformer-based methods usually first generate a bird's-eye-view (BEV) feature map from the front view image, and then use a sub-network with BEV feature map as input to predict 3D lanes. Such approaches require an explicit view transformation between BEV and front view, which itself is still a challenging problem. In this paper, we propose CurveFormer, a single-stage Transformer-based method that directly calculates 3D lane parameters and can circumvent the difficult view transformation step. Specifically, we formulate 3D lane detection as a curve propagation problem by using curve queries. A 3D lane query is represented by a dynamic and ordered anchor point set. In this way, queries with curve representation in Transformer decoder iteratively refine the 3D lane detection results. Moreover, a curve cross-attention module is introduced to compute the similarities between curve queries and image features. Additionally, a context sampling module that can capture more relative image features of a curve query is provided to further boost the 3D lane detection performance. We evaluate our method for 3D lane detection on both synthetic and real-world datasets, and the experimental results show that our method achieves promising performance compared with the state-of-the-art approaches. The effectiveness of each component is validated via ablation studies as well.

翻译:3D 航道探测是自动驾驶系统的一个组成部分。 先前的CNN 和变换器方法通常首先从前视图像生成鸟眼( BEV) 特征地图,然后使用带有 BEV 特征地图的子网络作为预测 3D 航道的投入。 此类方法需要在 BEV 和 前视之间进行明确的视图转换, 这本身仍是一个具有挑战性的问题。 在本文中, 我们提议Curve Former, 这是一种以单阶段变换器为基础的方法, 直接计算 3D 航道参数, 并可以绕过困难的视图转换步骤。 具体地说, 我们用曲线查询将3D 航道探测作为曲线传播问题。 3D 航道查询由动态和订购的锚点作为代表。 这样, 在变换器解器中进行曲线代表的查询, 迭接地改进 3D 航道探测结果。 此外, 引入了曲线交叉注意模块, 以计算曲线查询和图像特征之间的相似性能。 此外, 提供背景取样模块, 以进一步加强 3D 航道探测的3D 路路道探测方法, 我们的实验性能测试方法通过合成和真实性能和实验结果,, 通过实验结果的实验, 和实验性能和实验性能和实验性能分析结果, 的实验性能和实验性能。