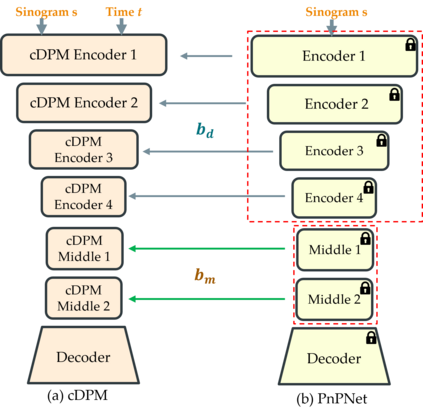

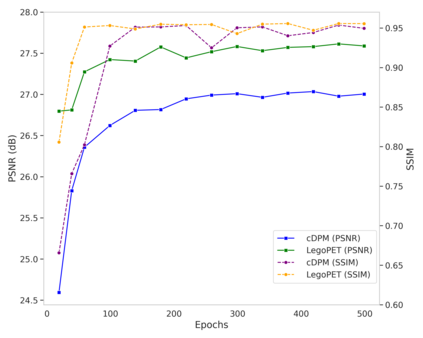

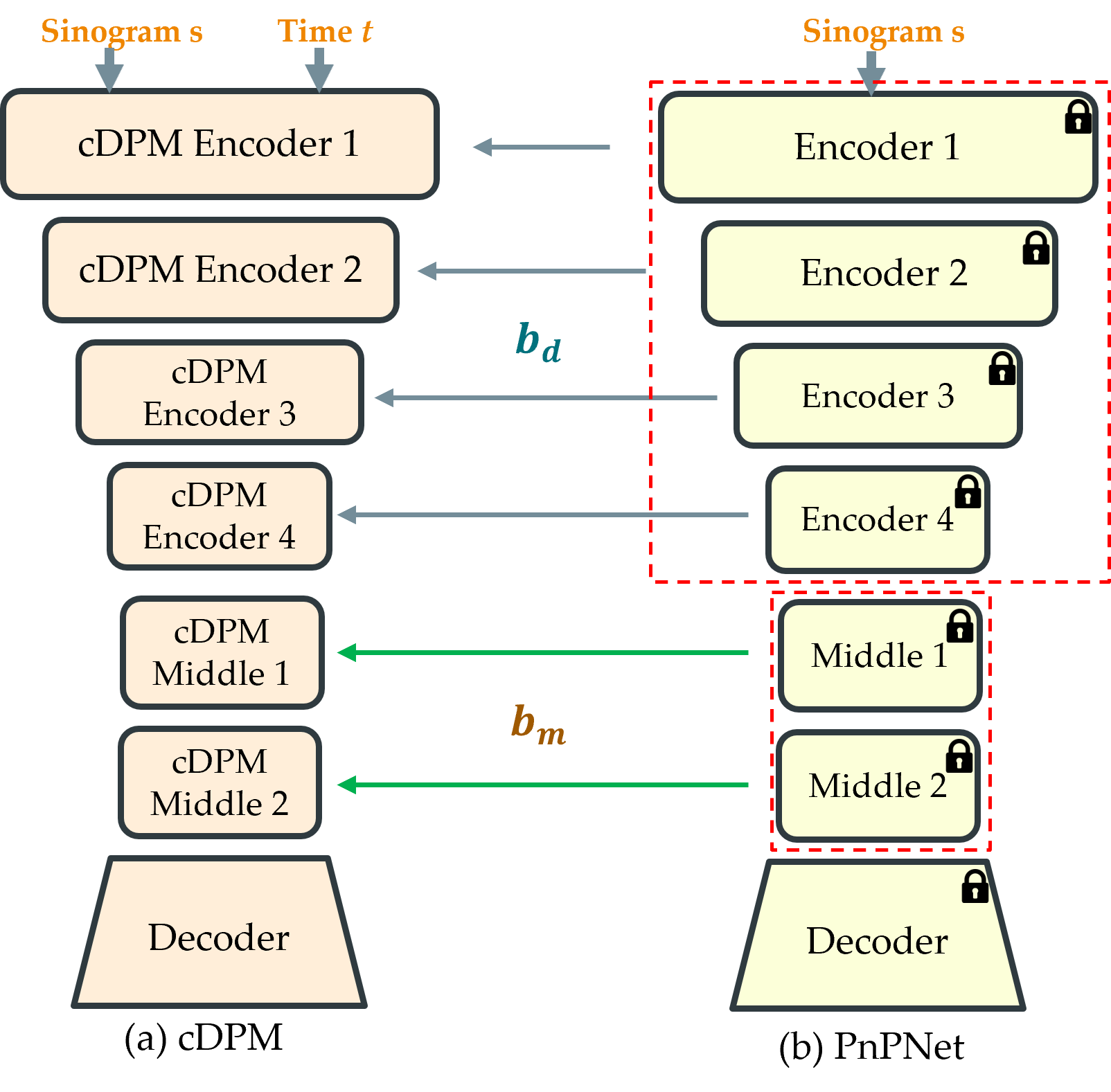

Positron emission tomography (PET) is widely utilized for cancer detection due to its ability to visualize functional and biological processes in vivo. PET images are usually reconstructed from histogrammed raw data (sinograms) using traditional iterative techniques (e.g., OSEM, MLEM). Recently, deep learning (DL) methods have shown promise by directly mapping raw sinogram data to PET images. However, DL approaches that are regression-based or GAN-based often produce overly smoothed images or introduce various artifacts respectively. Image-conditioned diffusion probabilistic models (cDPMs) are another class of likelihood-based DL techniques capable of generating highly realistic and controllable images. While cDPMs have notable strengths, they still face challenges such as maintain correspondence and consistency between input and output images when they are from different domains (e.g., sinogram vs. image domain) as well as slow convergence rates. To address these limitations, we introduce LegoPET, a hierarchical feature guided conditional diffusion model for high-perceptual quality PET image reconstruction from sinograms. We conducted several experiments demonstrating that LegoPET not only improves the performance of cDPMs but also surpasses recent DL-based PET image reconstruction techniques in terms of visual quality and pixel-level PSNR/SSIM metrics. Our code is available at https://github.com/yransun/LegoPET.

翻译:暂无翻译