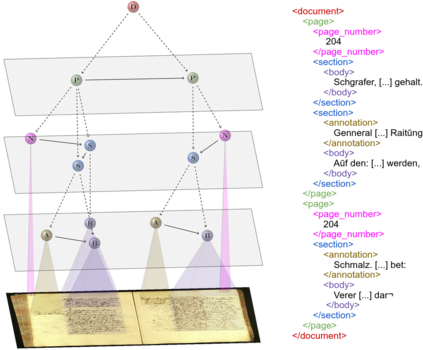

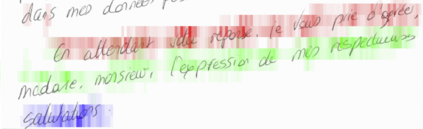

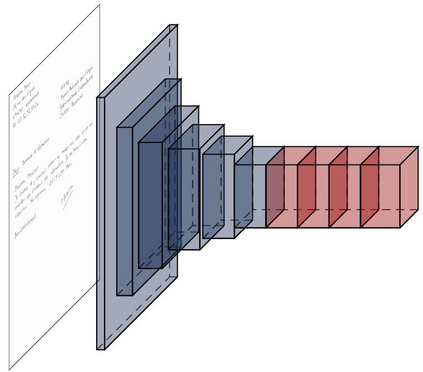

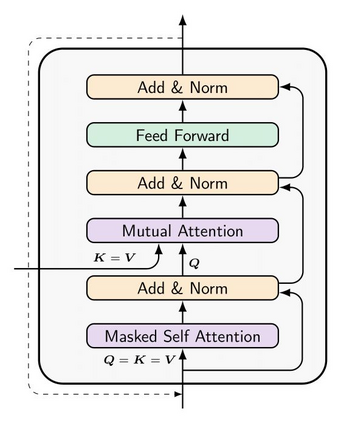

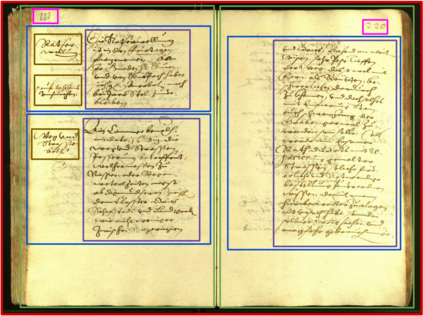

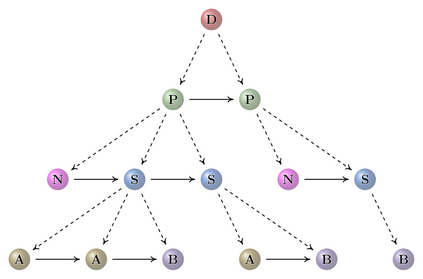

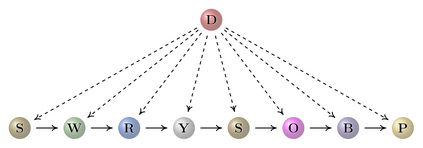

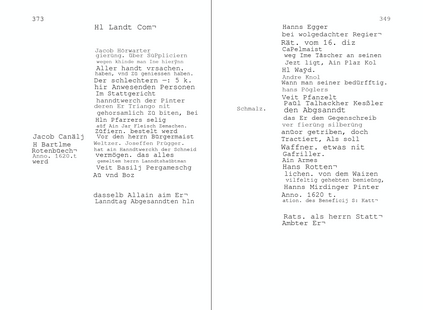

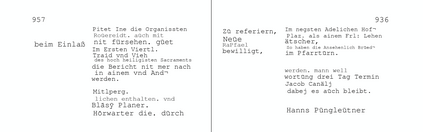

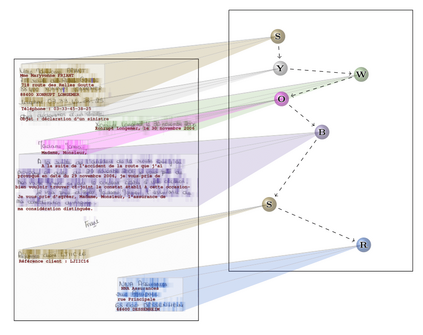

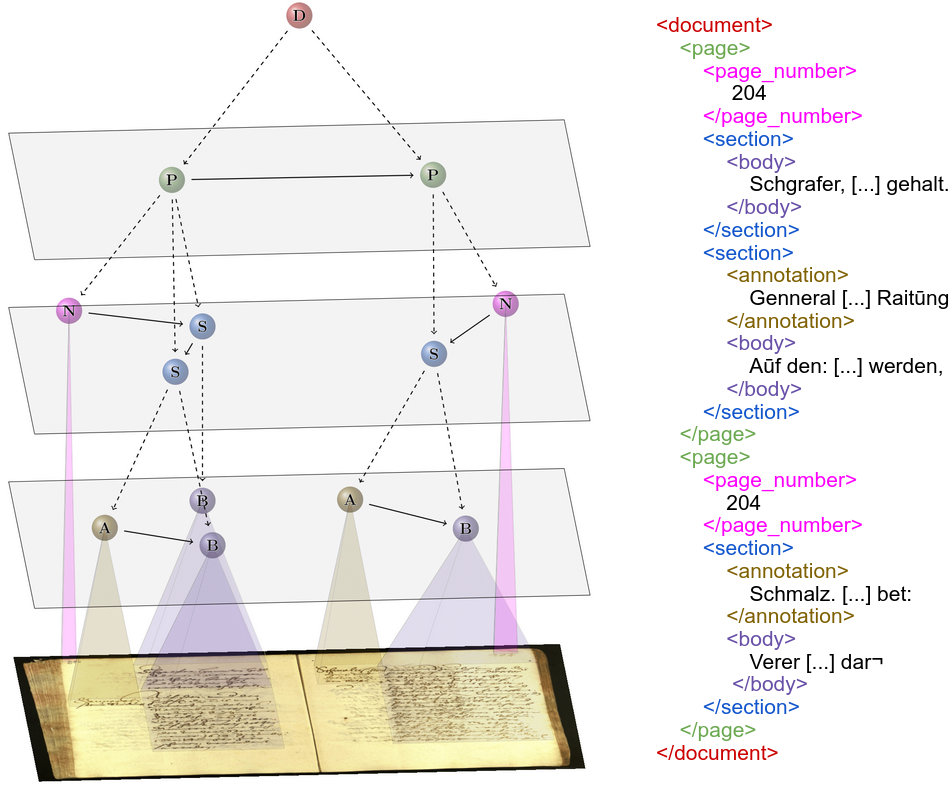

Unconstrained handwritten text recognition is a challenging computer vision task. It is traditionally handled by a two-step approach, combining line segmentation followed by text line recognition. For the first time, we propose an end-to-end segmentation-free architecture for the task of handwritten document recognition: the Document Attention Network. In addition to text recognition, the model is trained to label text parts using begin and end tags in an XML-like fashion. This model is made up of an FCN encoder for feature extraction and a stack of transformer decoder layers for a recurrent token-by-token prediction process. It takes whole text documents as input and sequentially outputs characters, as well as logical layout tokens. Contrary to the existing segmentation-based approaches, the model is trained without using any segmentation label. We achieve competitive results on the READ 2016 dataset at page level, as well as double-page level with a CER of 3.43% and 3.70%, respectively. We also provide results for the RIMES 2009 dataset at page level, reaching 4.54% of CER. We provide all source code and pre-trained model weights at https://github.com/FactoDeepLearning/DAN.

翻译:不受限制的手写文本识别是一项具有挑战性的计算机愿景任务。 它传统上由两步方法处理, 结合线条分割, 并辅之以文字线辨识。 我们第一次为手写文件识别任务建议一个不端到端的分解结构: 文件注意网络。 除了文本识别, 模型还受过培训, 使用类似XML的起始和结尾标签对文本部分进行标签。 这个模型由用于特征提取的FCN编码器组成, 以及一组变压器解码层组成, 用于经常性的逐字式预测进程。 它将整个文本文档作为输入和顺序输出字符, 以及逻辑布局符号。 与现有的分解法不同, 该模型在不使用任何分解标签的情况下受到培训。 我们在READ 2016 数据集页面层次上取得了竞争性结果, 以及双页级别, CER 分别为3.43% 和3. 70% 。 我们还为页面级别的 RIMES 2009 数据集提供了结果, 达到CER 4.54 % 。 我们提供所有源代码, 和在 http://FADAR/ agrestrain 重量前的模型。