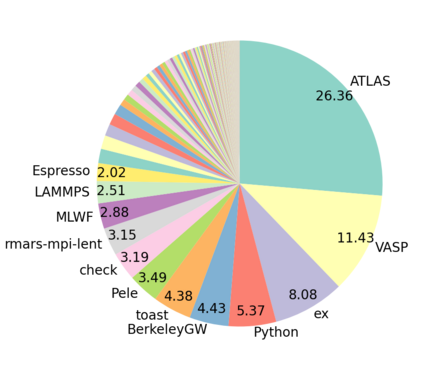

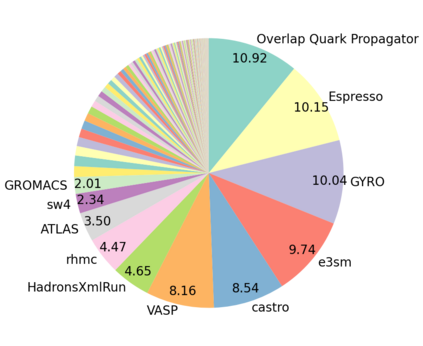

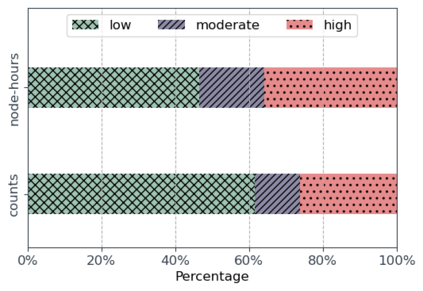

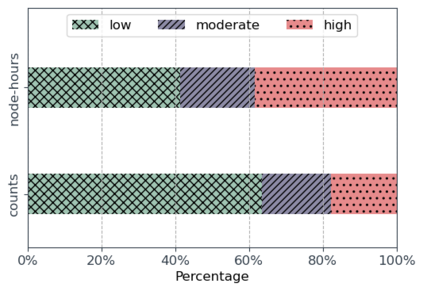

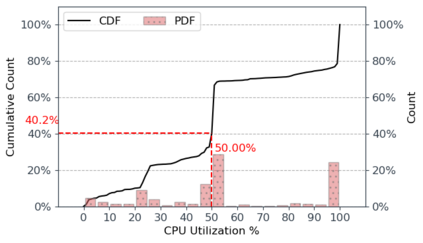

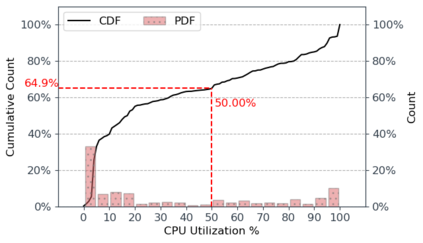

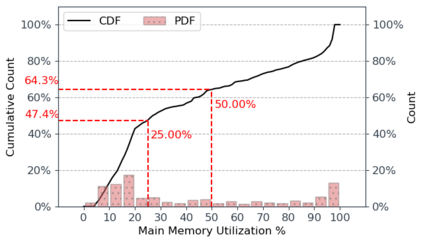

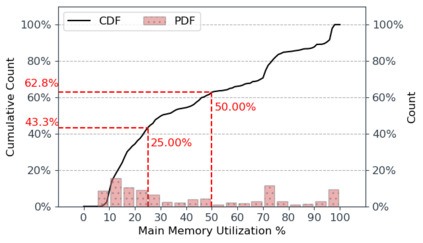

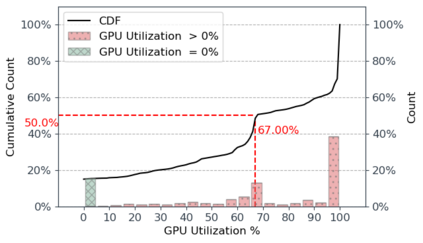

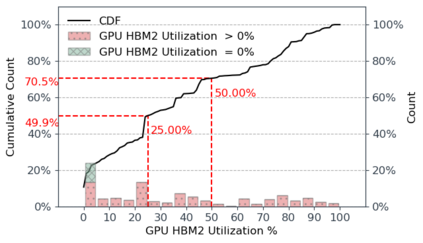

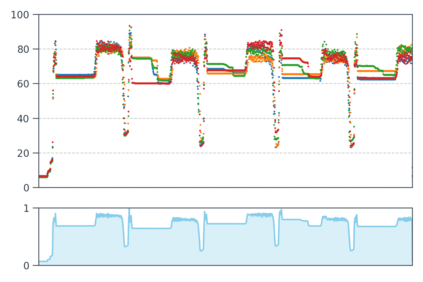

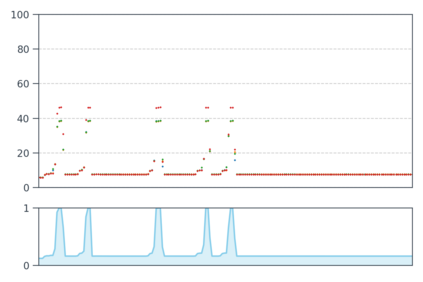

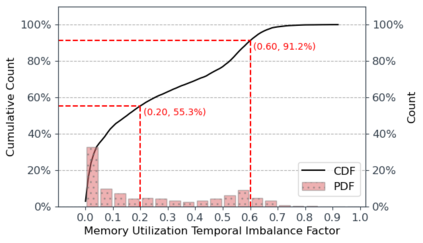

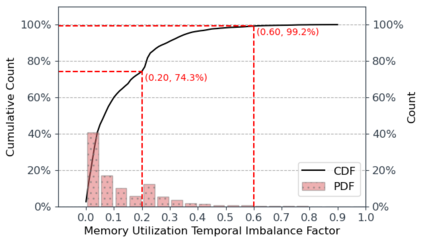

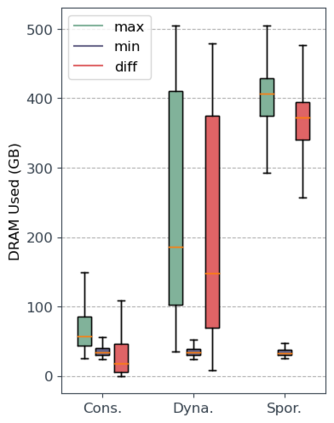

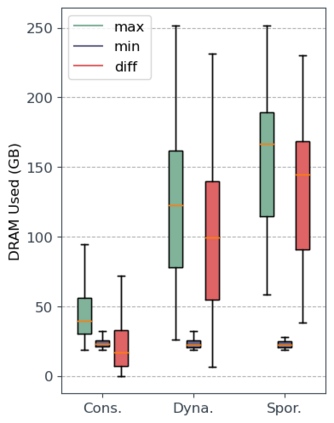

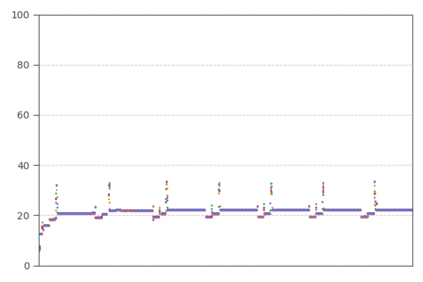

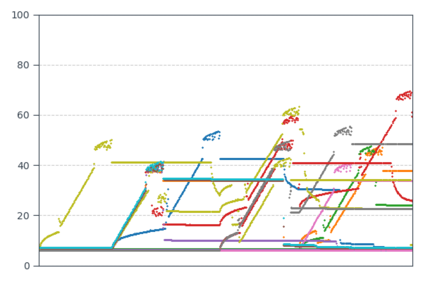

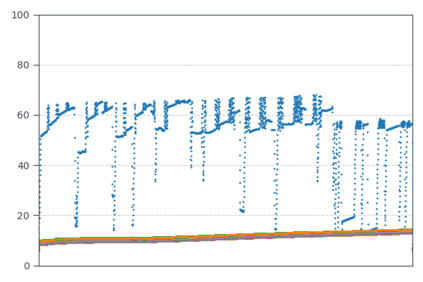

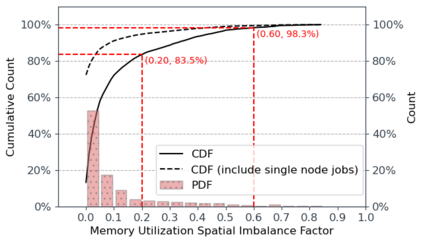

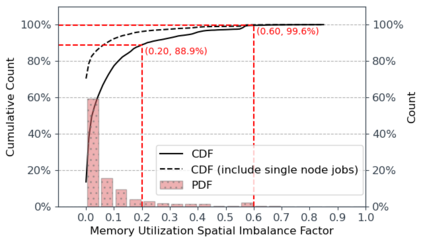

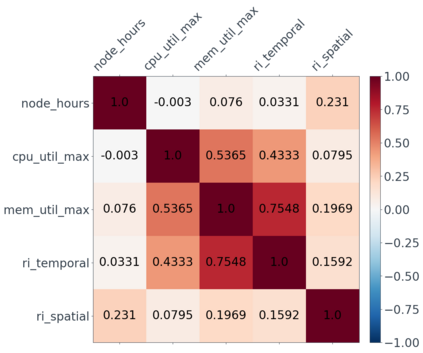

Resource demands of HPC applications vary significantly. However, it is common for HPC systems to primarily assign resources on a per-node basis to prevent interference from co-located workloads. This gap between the coarse-grained resource allocation and the varying resource demands can lead to HPC resources being not fully utilized. In this study, we analyze the resource usage and application behavior of NERSC's Perlmutter, a state-of-the-art open-science HPC system with both CPU-only and GPU-accelerated nodes. Our one-month usage analysis reveals that CPUs are commonly not fully utilized, especially for GPU-enabled jobs. Also, around 64% of both CPU and GPU-enabled jobs used 50% or less of the available host memory capacity. Additionally, about 50% of GPU-enabled jobs used up to 25% of the GPU memory, and the memory capacity was not fully utilized in some ways for all jobs. While our study comes early in Perlmutter's lifetime thus policies and application workload may change, it provides valuable insights on performance characterization, application behavior, and motivates systems with more fine-grain resource allocation.

翻译:HPC应用程序的资源需求差异很大。 但是,HPC系统通常主要以每个节点为基础分配资源,以防止共同分担的工作量受到干扰。 粗略资源分配与不同资源需求之间的这一差距可能导致HPC资源没有得到充分利用。 在本研究中,我们分析了NERSC的Perlmutter(一个只有CPU和GPU加速节点的先进开放科技HPC系统)的资源使用和应用行为。 我们一个月的使用分析显示,CPU通常没有得到充分利用,特别是GPU驱动的工作。此外,大约64%的CPU和GPU驱动的工作使用了50%或更少的现有主机存储能力。此外,大约50%的GPUPU辅助工作用于25%的GPU记忆,而记忆能力在某些方面并没有被充分利用于所有工作。 我们的研究在Permutter的生命周期初期即开始,因此政策和应用工作量可能会发生变化,它对业绩定性、应用行为和激励资源配置更为精细的系统提供了宝贵的见解。</s>