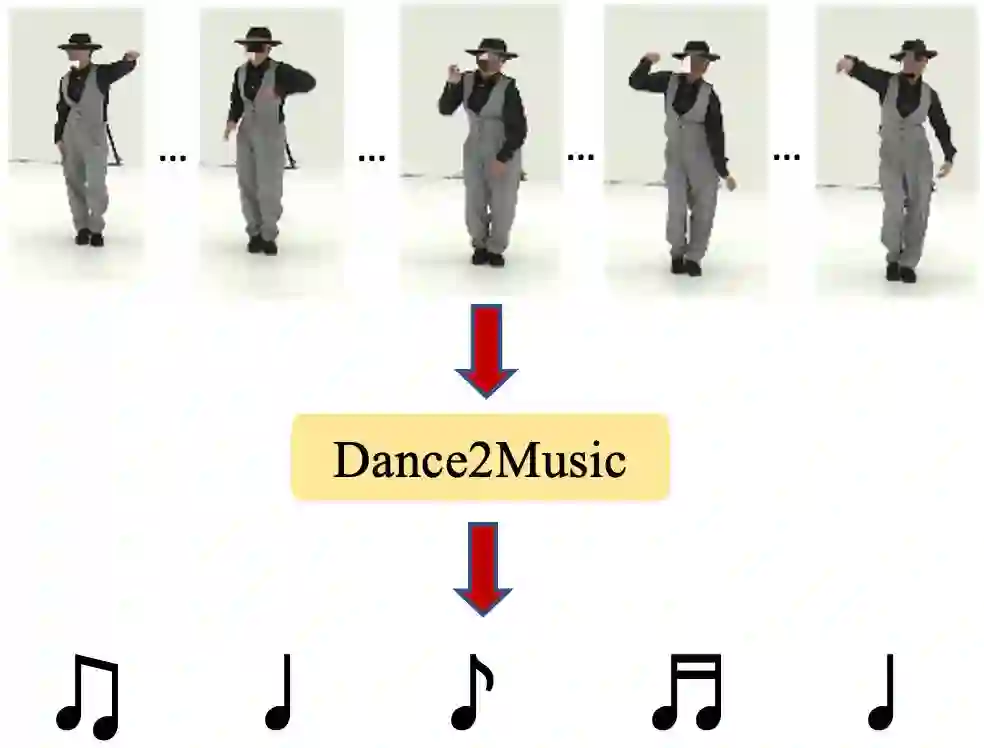

Dance and music typically go hand in hand. The complexities in dance, music, and their synchronisation make them fascinating to study from a computational creativity perspective. While several works have looked at generating dance for a given music, automatically generating music for a given dance remains under-explored. This capability could have several creative expression and entertainment applications. We present some early explorations in this direction. We present a search-based offline approach that generates music after processing the entire dance video and an online approach that uses a deep neural network to generate music on-the-fly as the video proceeds. We compare these approaches to a strong heuristic baseline via human studies and present our findings. We have integrated our online approach in a live demo! A video of the demo can be found here: https://sites.google.com/view/dance2music/live-demo.

翻译:舞蹈和音乐通常是携手并进的。 舞蹈、音乐及其同步的复杂性使他们从计算创造性的角度来学习。 虽然有好几项作品都着眼于为特定音乐创造舞蹈, 但为特定舞蹈自动创造音乐仍然未得到充分探索。 这种能力可以有若干创造性的表达和娱乐应用。 我们在这方面展示了一些早期探索。 我们展示了一种基于搜索的离线方法,在处理整个舞蹈视频之后产生音乐, 以及一种使用深层神经网络在视频过程中在飞行中产生音乐的在线方法。 我们通过人类研究将这些方法与强健的超常基线进行比较, 并展示我们的调查结果。 我们已经将我们的在线方法整合到现场演示中! 演示的视频可以在这里找到 https://sites.gogle. com/view/dance2 live-dem2/live-demo。