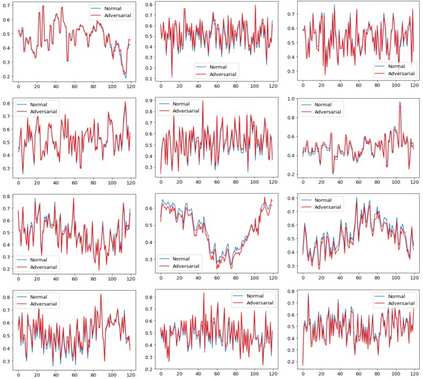

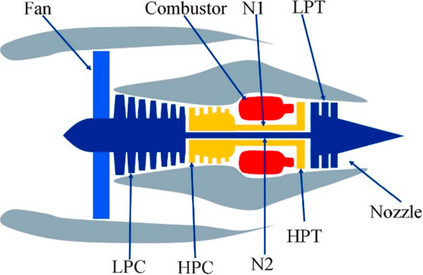

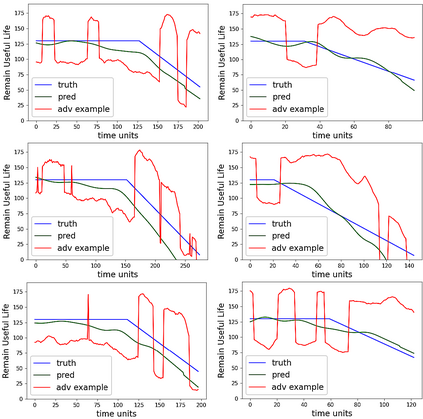

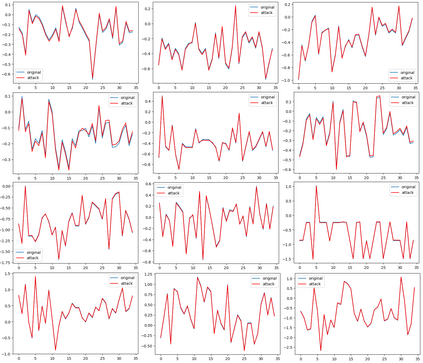

With proliferation of deep learning (DL) applications in diverse domains, vulnerability of DL models to adversarial attacks has become an increasingly interesting research topic in the domains of Computer Vision (CV) and Natural Language Processing (NLP). DL has also been widely adopted to diverse PHM applications, where data are primarily time-series sensor measurements. While those advanced DL algorithms/models have resulted in an improved PHM algorithms' performance, the vulnerability of those PHM algorithms to adversarial attacks has not drawn much attention in the PHM community. In this paper we attempt to explore the vulnerability of PHM algorithms. More specifically, we investigate the strategies of attacking PHM algorithms by considering several unique characteristics associated with time-series sensor measurements data. We use two real-world PHM applications as examples to validate our attack strategies and to demonstrate that PHM algorithms indeed are vulnerable to adversarial attacks.

翻译:随着不同领域的深层次学习应用(DL)的激增,DL模式在对抗性攻击面前的脆弱性已成为计算机视野和自然语言处理(NLP)领域一个越来越有趣的研究课题。DL还被广泛应用于多种PHM应用,其中数据主要是时间序列传感器测量数据。虽然这些先进的DL算法/模型提高了PHM算法的性能,但这些PHM算法对对抗性攻击的脆弱性并没有在PHM社区引起多大的注意。在本文中,我们试图探索PHM算法的脆弱性。更具体地说,我们通过考虑与时间序列传感器测量数据相关的几个独特特征来调查攻击PHM算法的战略。我们用两个真实世界的PHM应用作为实例来验证我们的攻击战略,并证明PHM算法确实容易受到对抗性攻击。