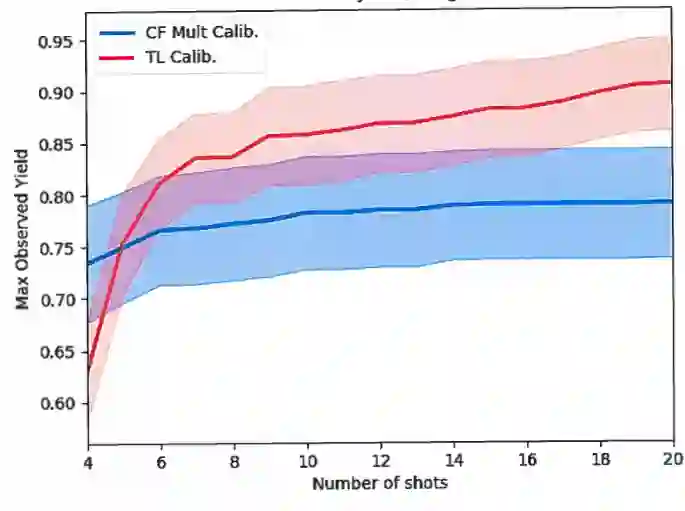

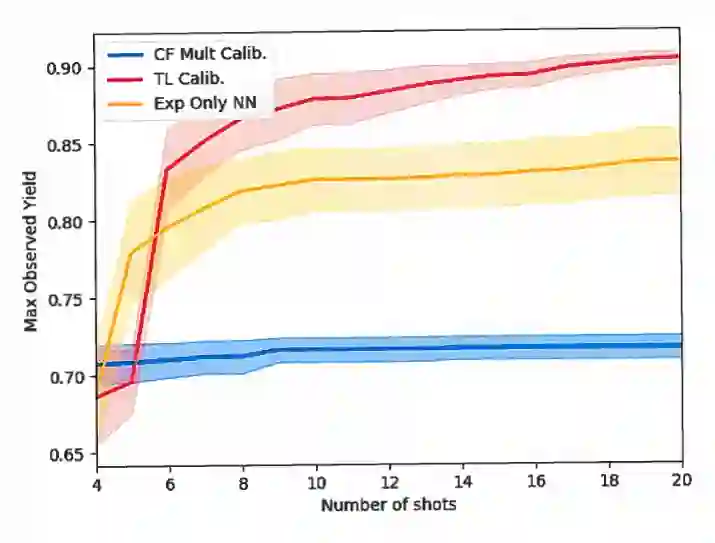

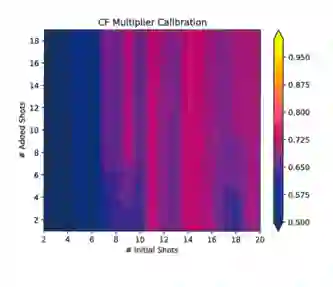

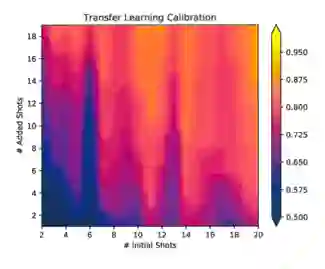

Transfer learning is a promising approach to creating predictive models that incorporate simulation and experimental data into a common framework. In this technique, a neural network is first trained on a large database of simulations, then partially retrained on sparse sets of experimental data to adjust predictions to be more consistent with reality. Previously, this technique has been used to create predictive models of Omega and NIF inertial confinement fusion (ICF) experiments that are more accurate than simulations alone. In this work, we conduct a transfer learning driven hypothetical ICF campaign in which the goal is to maximize experimental neutron yield via Bayesian optimization. The transfer learning model achieves yields within 5% of the maximum achievable yield in a modest-sized design space in fewer than 20 experiments. Furthermore, we demonstrate that this method is more efficient at optimizing designs than traditional model calibration techniques commonly employed in ICF design. Such an approach to ICF design could enable robust optimization of experimental performance under uncertainty.

翻译:转移学习是创建预测模型,将模拟和实验数据纳入共同框架的一个很有希望的方法。在这个技术中,神经网络首先在大型模拟数据库中接受培训,然后在零星的实验数据上进行部分再培训,以调整预测,使之更符合现实。以前,这一技术被用来创建Omega和NIF惯性集成试验的预测模型,比单是模拟实验更准确。在这项工作中,我们开展了一个转移学习驱动的假定ICF运动,其目标是通过Bayesian优化最大限度地提高实验中子的产量。转移学习模型在不到20个试验中,在一个小型设计空间中达到最高可达到的产量的5%。此外,我们证明这种方法比ICF设计中通常使用的传统模型校准技术更高效地优化设计。这种ICF设计方法可以使不确定情况下的实验性表现得到有力的优化。