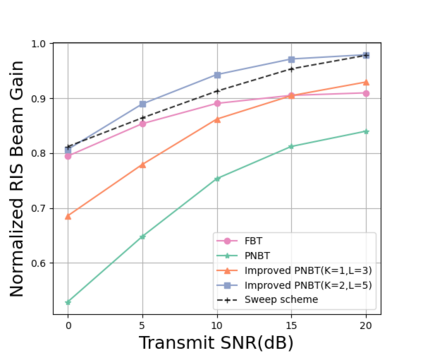

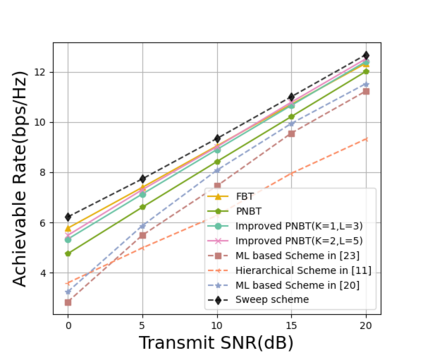

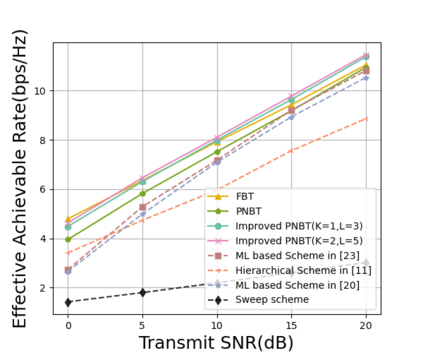

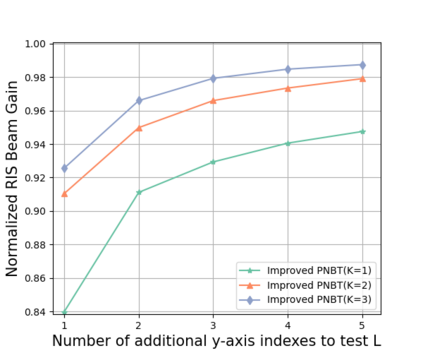

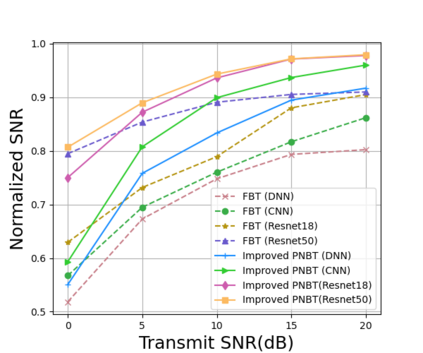

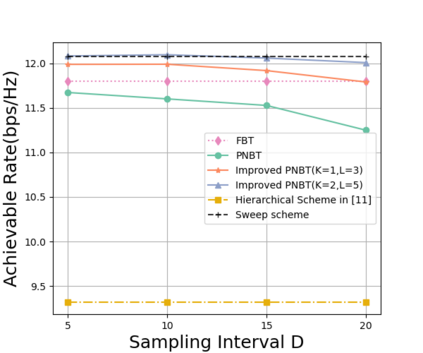

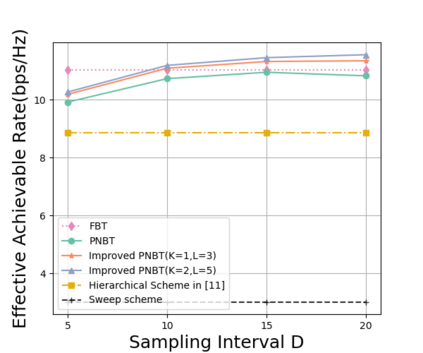

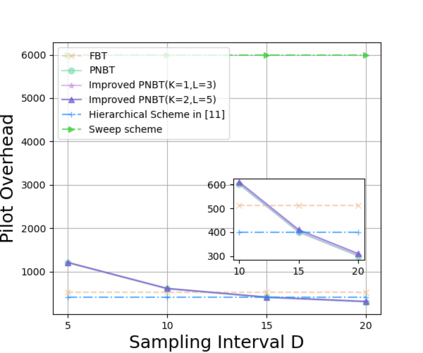

Extremely large-scale reconfigurable intelligent surface (XL-RIS) has recently been proposed and is recognized as a promising technology that can further enhance the capacity of communication systems and compensate for severe path loss . However, the pilot overhead of beam training in XL-RIS-assisted wireless communication systems is enormous because the near-field channel model needs to be taken into account, and the number of candidate codewords in the codebook increases dramatically accordingly. To tackle this problem, we propose two deep learning-based near-field beam training schemes in XL-RIS-assisted communication systems, where deep residual networks are employed to determine the optimal near-field RIS codeword. Specifically, we first propose a far-field beam-based beam training (FBT) scheme in which the received signals of all far-field RIS codewords are fed into the neural network to estimate the optimal near-field RIS codeword. In order to further reduce the pilot overhead, a partial near-field beam-based beam training (PNBT) scheme is proposed, where only the received signals corresponding to the partial near-field XL-RIS codewords are served as input to the neural network. Moreover, we further propose an improved PNBT scheme to enhance the performance of beam training by fully exploring the neural network's output. Finally, simulation results show that the proposed schemes outperform the existing beam training schemes and can reduce the beam sweeping overhead by approximately 95%.

翻译:最近提出了极为大规模可重新整合的智能表面(XL-RIS)的建议,并被公认为一种大有希望的技术,可以进一步加强通信系统的能力,弥补道路的严重丢失。然而,XL-RIS辅助无线通信系统的光束培训试点间接费用巨大,因为需要考虑到近地频道模式,而且代码簿中候选人编码词的数量也相应大幅增加。为了进一步减少试点间接费用,我们提议在XL-RIS辅助通信系统中采用部分近地光束培训(PNBT)计划,其中使用深地残余网络来确定最佳的近地光学系统编码词。具体地说,我们首先提出一个远地光束光束培训(FBT)计划,将所有远地光学编码的信号输入神经网络,以估计最佳的近地光线码词数。为了进一步减少试点间接费用,我们提议的一个部分的近地光线性培训计划(PNBBT)计划只能减少与部分近地光线性网络的信号。最后,我们提议改进的NBS-L-IS网络产出计划将进一步升级,作为我们改进的NB-L-L-L-ISDFADFS-S-S-S-S-S-S-S-S-S-S-SLAR-S-S-S-SLARV-S-S-S-S-S-S-S-S-S-S-S-S-S-S-SV-S-S-S-S-S-S-S-S-SL-S-S-SL-SL-SL-SD-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-