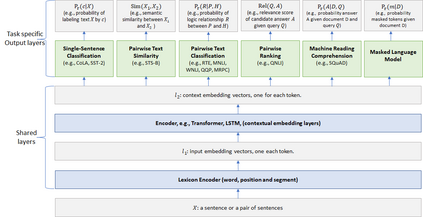

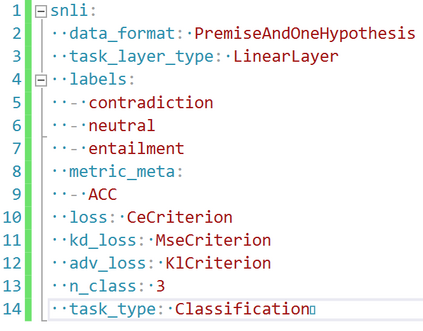

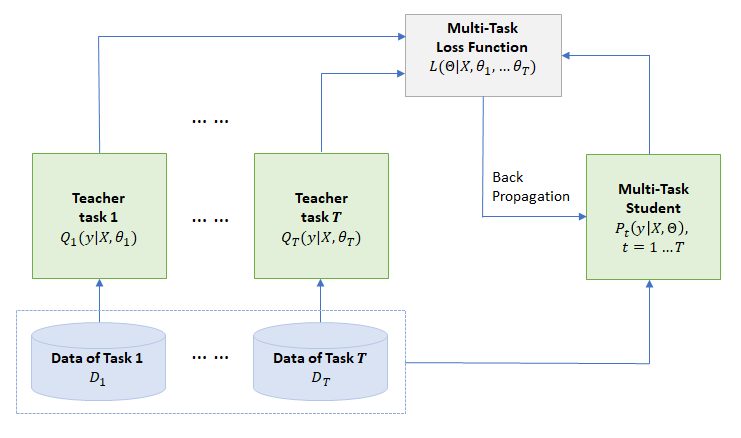

We present MT-DNN, an open-source natural language understanding (NLU) toolkit that makes it easy for researchers and developers to train customized deep learning models. Built upon PyTorch and Transformers, MT-DNN is designed to facilitate rapid customization for a broad spectrum of NLU tasks, using a variety of objectives (classification, regression, structured prediction) and text encoders (e.g., RNNs, BERT, RoBERTa, UniLM). A unique feature of MT-DNN is its built-in support for robust and transferable learning using the adversarial multi-task learning paradigm. To enable efficient production deployment, MT-DNN supports multi-task knowledge distillation, which can substantially compress a deep neural model without significant performance drop. We demonstrate the effectiveness of MT-DNN on a wide range of NLU applications across general and biomedical domains. The software and pre-trained models will be publicly available at https://github.com/namisan/mt-dnn.

翻译:我们介绍了开放源码的自然语言理解工具MT-DNN,这是研究人员和开发商易于培训定制的深层次学习模式的开放源码工具包。MT-DNN建在PyTorrch和变异器上,目的是利用各种目标(分类、回归、结构化预测)和文字编码器(例如,RNNS、BERT、RoBERTA、ULM),促进快速定制广泛的自然语言理解(MT-DNN),使研究人员和开发商易于培训定制的深层次学习模式。MT-DNN的独有特征是利用对抗性多塔斯克学习模式支持强力和可转让的学习。为了能够高效地进行生产部署,MT-DNN支持多任务知识蒸馏,这可以大大压缩一个深度神经模型,而不会显著的性能下降。我们展示了MT-DNNN在一般领域和生物医学领域广泛应用NLU的效能。软件和预先训练模式将在https://github.com/namisan/mt-dn-dnn上公开提供。