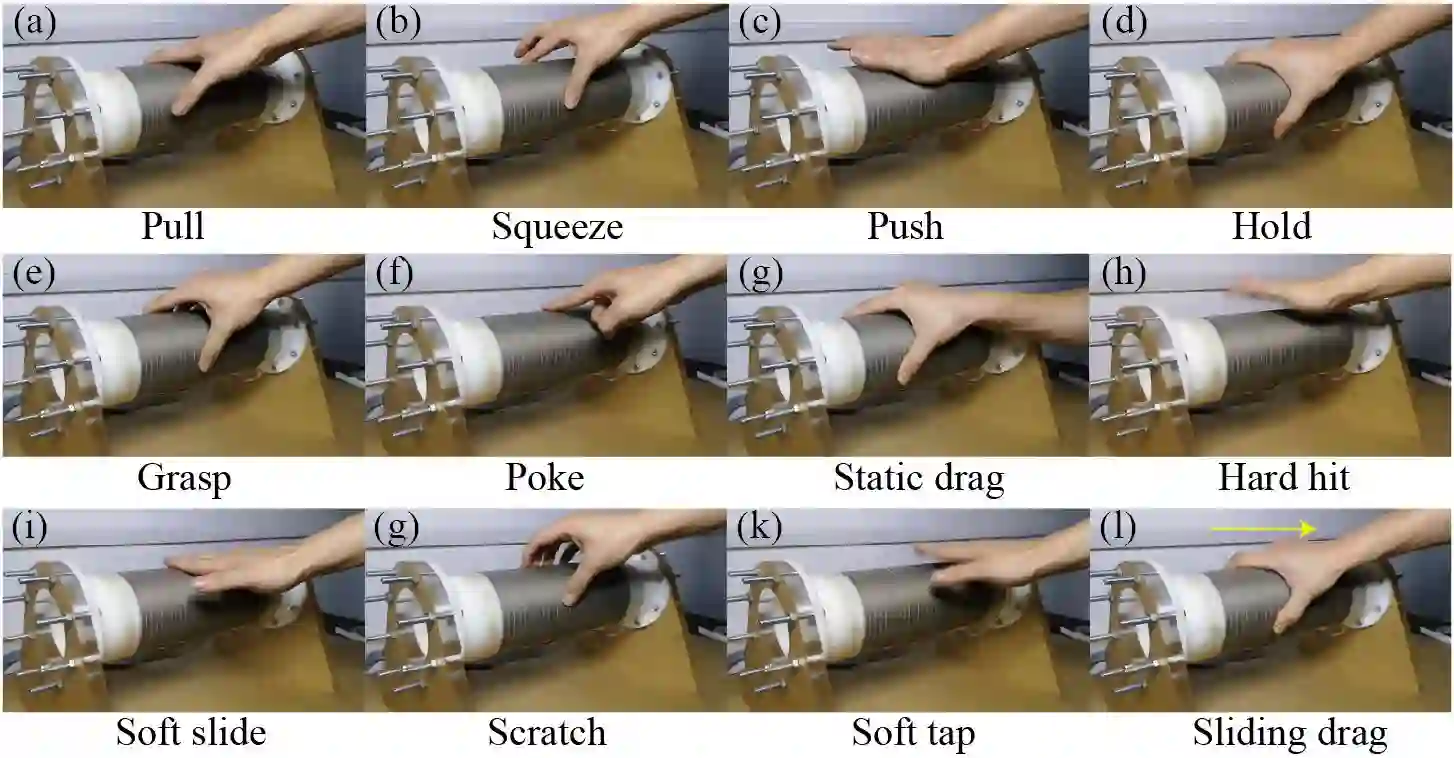

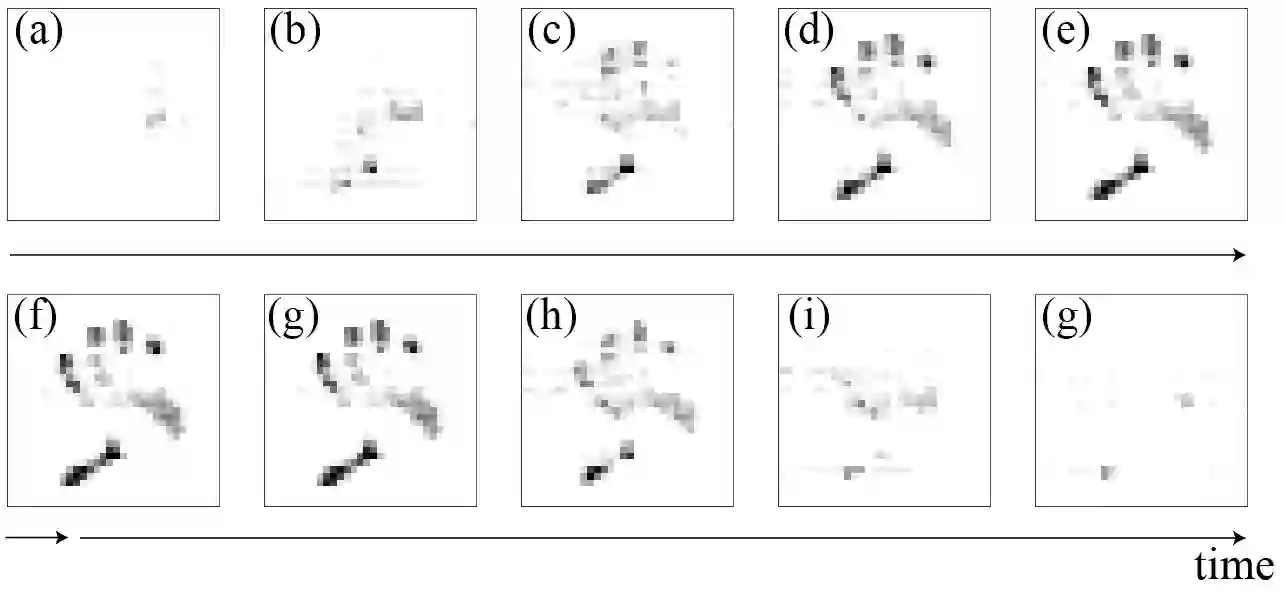

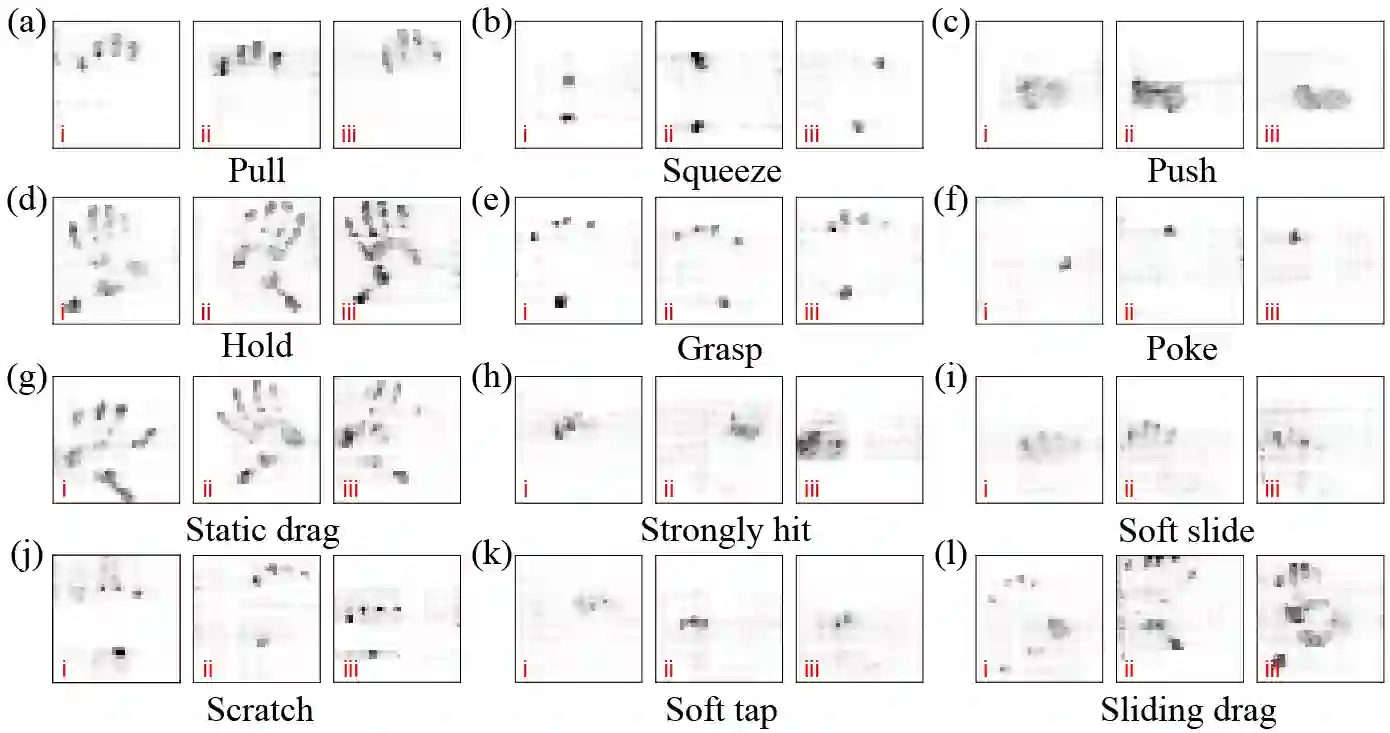

Advanced service robots require superior tactile intelligence to guarantee human-contact safety and to provide essential supplements to visual and auditory information for human-robot interaction, especially when a robot is in physical contact with a human. Tactile intelligence is an essential capability of perception and recognition from tactile information, based on the learning from a large amount of tactile data and the understanding of the physical meaning behind the data. This report introduces a recently collected and organized dataset "TacAct" that encloses real-time pressure distribution when a human subject touches the arms of a nursing-care robot. The dataset consists of information from 50 subjects who performed a total of 24,000 touch actions. Furthermore, the details of the dataset are described, the data are preliminarily analyzed, and the validity of the collected information is tested through a convolutional neural network LeNet-5 classifying different types of touch actions. We believe that the TacAct dataset would be more than beneficial for the community of human interactive robots to understand the tactile profile under various circumstances.

翻译:高级服务机器人需要高级触觉智能,以保障人类接触安全,并为人类-机器人互动的视觉和听觉信息提供基本补充,特别是当机器人与人发生身体接触时。触觉智能是一种从触觉信息中感知和识别的基本能力,其依据是从大量触觉数据中学习的知识和对数据物理含义的理解。本报告介绍了最近收集和整理的数据集“触觉”,该数据集包含当人体主体接触护理机器人的臂膀时的实时压力分布。数据集由总共进行了24 000次触摸行动的50个主体的信息组成。此外,还描述了数据集的细节,对数据进行了初步分析,通过对不同类型的触摸行动进行演进神经网络LeNet-5的测试所收集的信息的有效性。我们认为,Tacact数据集对于人类互动机器人群体了解不同情况下的触摸特征来说,将更有利。