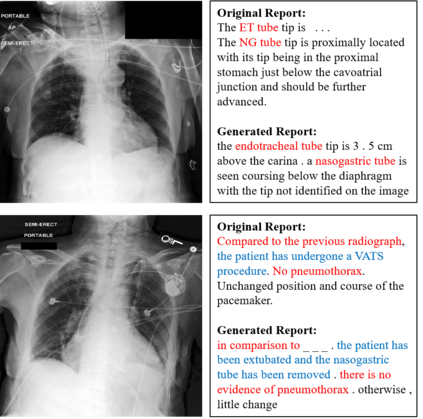

Recently a number of studies demonstrated impressive performance on diverse vision-language multi-modal tasks such as image captioning and visual question answering by extending the BERT architecture with multi-modal pre-training objectives. In this work we explore a broad set of multi-modal representation learning tasks in the medical domain, specifically using radiology images and the unstructured report. We propose Medical Vision Language Learner (MedViLL) which adopts a Transformer-based architecture combined with a novel multimodal attention masking scheme to maximize generalization performance for both vision-language understanding tasks (image-report retrieval, disease classification, medical visual question answering) and vision-language generation task (report generation). By rigorously evaluating the proposed model on four downstream tasks with two chest X-ray image datasets (MIMIC-CXR and Open-I), we empirically demonstrate the superior downstream task performance of MedViLL against various baselines including task-specific architectures.

翻译:最近一些研究显示,通过扩大BERT结构,实现多模式培训前目标,使BERT结构具有多模式化的目标,从而在多种愿景-语言多模式任务(如图像说明和直观回答)上取得了令人印象深刻的业绩。在这项工作中,我们探索了医疗领域一系列广泛的多模式的学习任务,特别是使用放射图像和无结构的报告。我们建议医学愿景语言学习者(MedVill)采用基于变压器的架构,同时采用新颖的多模式,掩盖关注计划,以最大限度地实现愿景-语言理解任务(图像-报告检索、疾病分类、医学视觉问题回答)和愿景-语言生成任务(报告生成)的概括性业绩。我们严格评估了四大下游任务的拟议模式,包括两套胸X光图像数据集(MI-CXR和Open-I),我们从经验上展示了MedVill公司在各种基线(包括特定任务的架构)下游任务的高级业绩。