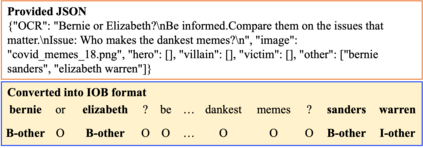

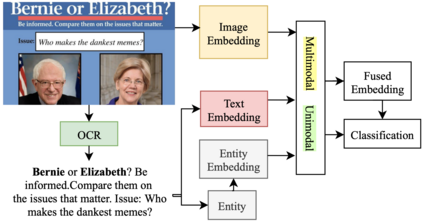

Harmful or abusive online content has been increasing over time, raising concerns for social media platforms, government agencies, and policymakers. Such harmful or abusive content can have major negative impact on society, e.g., cyberbullying can lead to suicides, rumors about COVID-19 can cause vaccine hesitance, promotion of fake cures for COVID-19 can cause health harms and deaths. The content that is posted and shared online can be textual, visual, or a combination of both, e.g., in a meme. Here, we describe our experiments in detecting the roles of the entities (hero, villain, victim) in harmful memes, which is part of the CONSTRAINT-2022 shared task, as well as our system for the task. We further provide a comparative analysis of different experimental settings (i.e., unimodal, multimodal, attention, and augmentation). For reproducibility, we make our experimental code publicly available. \url{https://github.com/robi56/harmful_memes_block_fusion}

翻译:长期以来,有害或滥用的在线内容不断增加,引起对社交媒体平台、政府机构和决策者的关注,此类有害或滥用内容可能对社会产生重大负面影响,例如,网络欺凌可能导致自杀,关于COVID-19的传闻可能导致疫苗失常,推广COVID-19的假疗法可造成健康伤害和死亡,在线发布和共享的内容可以是文字、视觉或两者的结合,例如,在网膜中。这里,我们描述了我们在发现作为COTRAINT-2022共同任务一部分的实体(英雄、恶棍、受害者)在有害Memes中的作用方面的实验,以及我们的任务系统。我们还对不同的实验环境(即单式、多式、多式、关注和增强)进行了比较分析。为了重新教育,我们公开我们的实验代码。