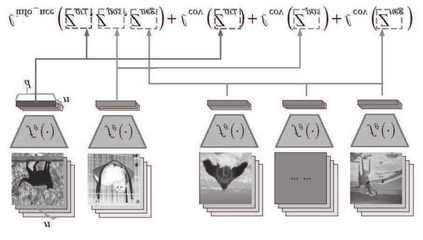

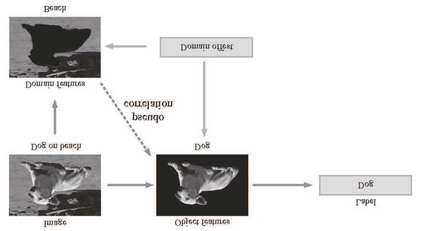

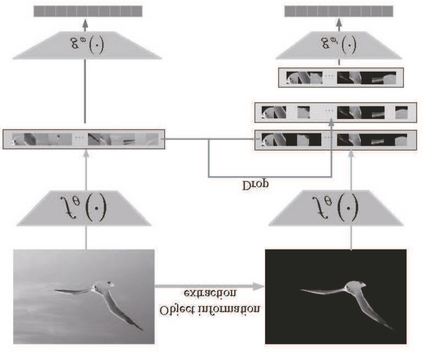

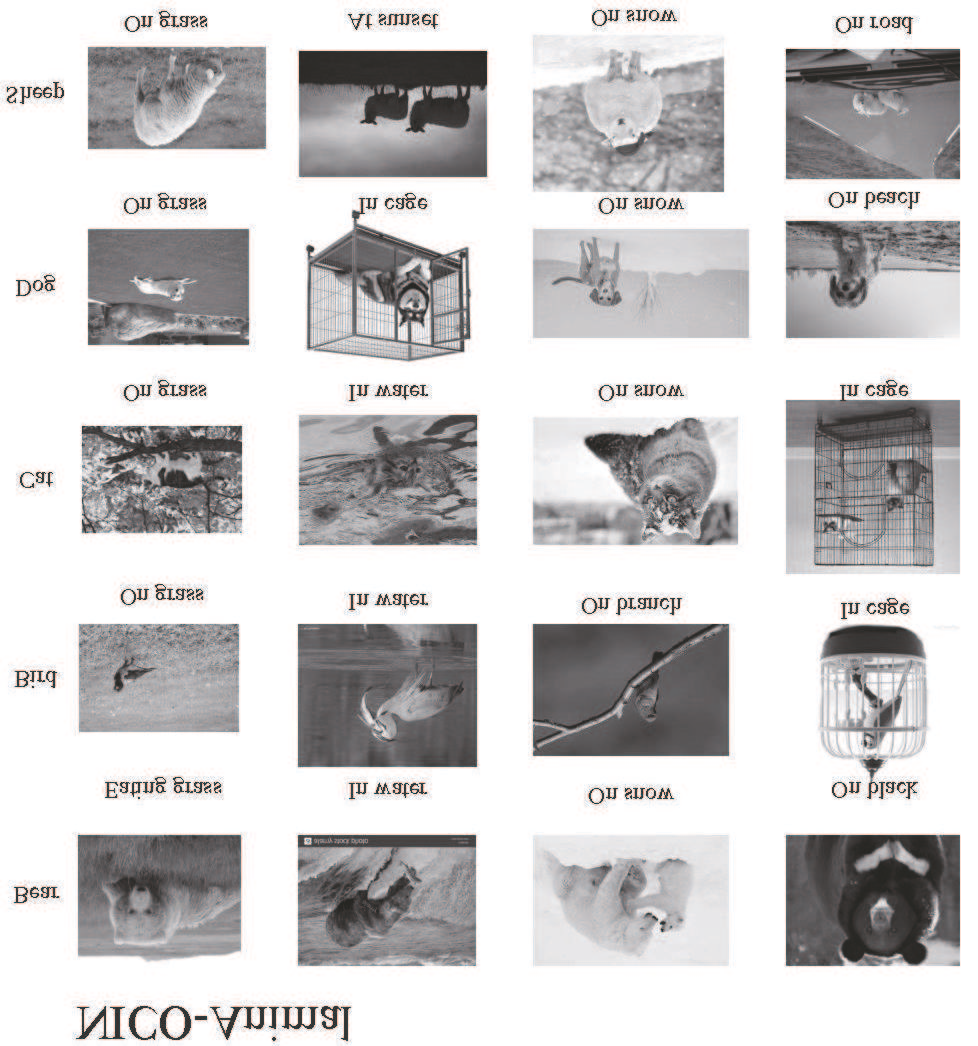

This paper develops a new EiHi net to solve the out-of-distribution (OoD) generalization problem in deep learning. EiHi net is a model learning paradigm that can be blessed on any visual backbone. This paradigm can change the previous learning method of the deep model, namely find out correlations between inductive sample features and corresponding categories, which suffers from pseudo correlations between indecisive features and labels. We fuse SimCLR and VIC-Reg via explicitly and dynamically establishing the original - positive - negative sample pair as a minimal learning element, the deep model iteratively establishes a relationship close to the causal one between features and labels, while suppressing pseudo correlations. To further validate the proposed model, and strengthen the established causal relationships, we develop a human-in-the-loop strategy, with few guidance samples, to prune the representation space directly. Finally, it is shown that the developed EiHi net makes significant improvements in the most difficult and typical OoD dataset Nico, compared with the current SOTA results, without any domain ($e.g.$ background, irrelevant features) information.

翻译:本文开发了一个新的 Eihi 网, 以解决深层学习中的分配( OoD) 普遍化问题。 Eihi 网是一个示范学习模式, 任何视觉骨干都可以幸存。 这个模式可以改变深层模型以前的学习方法, 即找出感应样本特征和对应类别之间的相互关系, 而这些特征和标签之间有不精确的特征和标签之间的假关系。 我们通过明确和动态地将SimCLR和ICV-Reg结合成SimHi网和VIC-Reg, 将原始的- 正- 负样配为最小的学习元素, 深层模型迭代地建立了与特性和标签之间因果关系密切的关系, 压制假相。 为了进一步验证拟议的模型, 并加强既有的因果关系, 我们开发了人类在圈内的战略, 且没有指导样本, 直接利用代表空间。 最后, 我们发现, 开发的 Eihi 网与当前SOTA结果相比, 与目前最困难和最典型的 OOD 数据标准( 如 $ 背景, 不相关的特征) ) 显著的改善 。