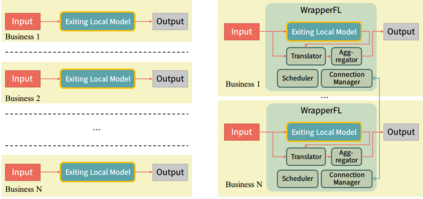

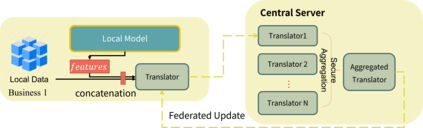

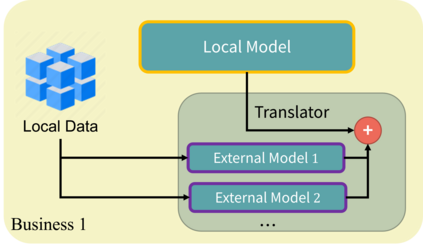

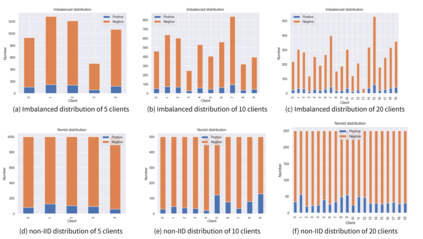

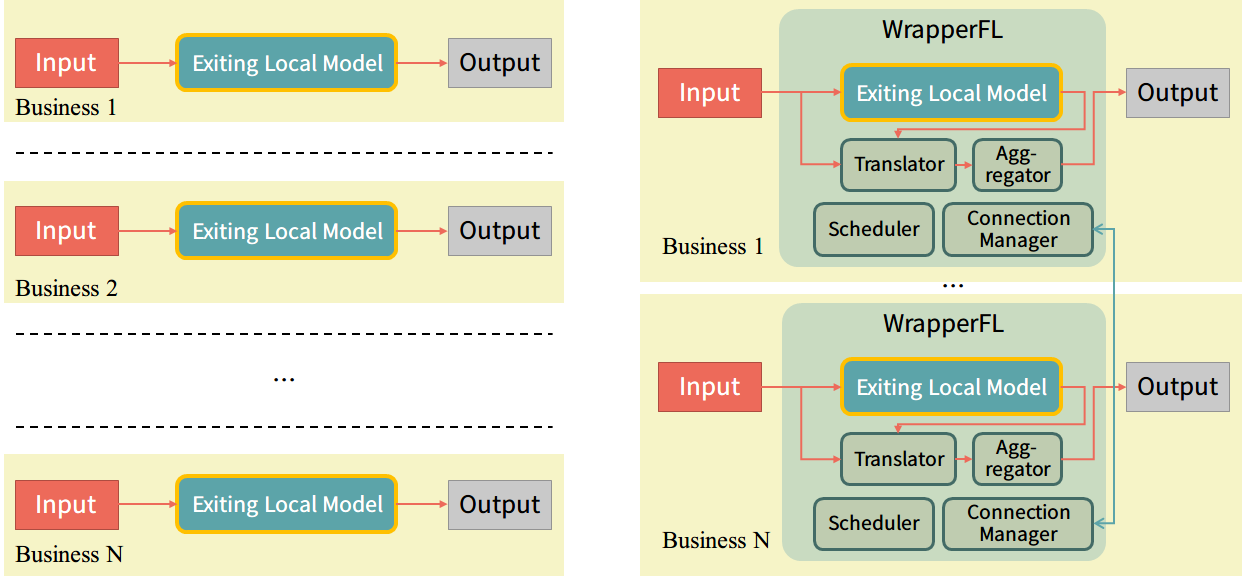

Federated learning, as a privacy-preserving collaborative machine learning paradigm, has been gaining more and more attention in the industry. With the huge rise in demand, there have been many federated learning platforms that allow federated participants to set up and build a federated model from scratch. However, exiting platforms are highly intrusive, complicated, and hard to integrate with built machine learning models. For many real-world businesses that already have mature serving models, existing federated learning platforms have high entry barriers and development costs. This paper presents a simple yet practical federated learning plug-in inspired by ensemble learning, dubbed WrapperFL, allowing participants to build/join a federated system with existing models at minimal costs. The WrapperFL works in a plug-and-play way by simply attaching to the input and output interfaces of an existing model, without the need of re-development, significantly reducing the overhead of manpower and resources. We verify our proposed method on diverse tasks under heterogeneous data distributions and heterogeneous models. The experimental results demonstrate that WrapperFL can be successfully applied to a wide range of applications under practical settings and improves the local model with federated learning at a low cost.

翻译:联邦学习作为一种保护隐私的合作机器学习范例,在行业中日益受到越来越多的关注。随着需求大幅上升,许多联邦学习平台使得联邦参与者能够从零开始建立和构建一个联合模式。然而,联邦学习平台具有高度侵扰性、复杂性,难以与建筑的机器学习模式融合。对于许多已经具备成熟服务模式的现实世界企业来说,现有的联邦学习平台有很高的进入壁垒和发展成本。本文件展示了一个简单而实用的、由共同学习启发的、假冒的包页式FLL,允许参与者以最低的成本建立/加入一个以现有模式组成的联合系统。包页FL的工作方式是插插插座和播放,简单地附加现有模式的投入和产出界面,而不需要再开发,大大减少人力和资源的间接费用。我们核实了我们提出的在混杂数据分发和混合模式下开展不同任务的方法。实验结果表明,包页可以成功地在实际环境中以低成本应用广泛的应用,并改进本地模式。