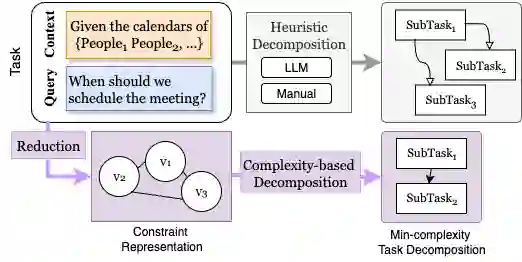

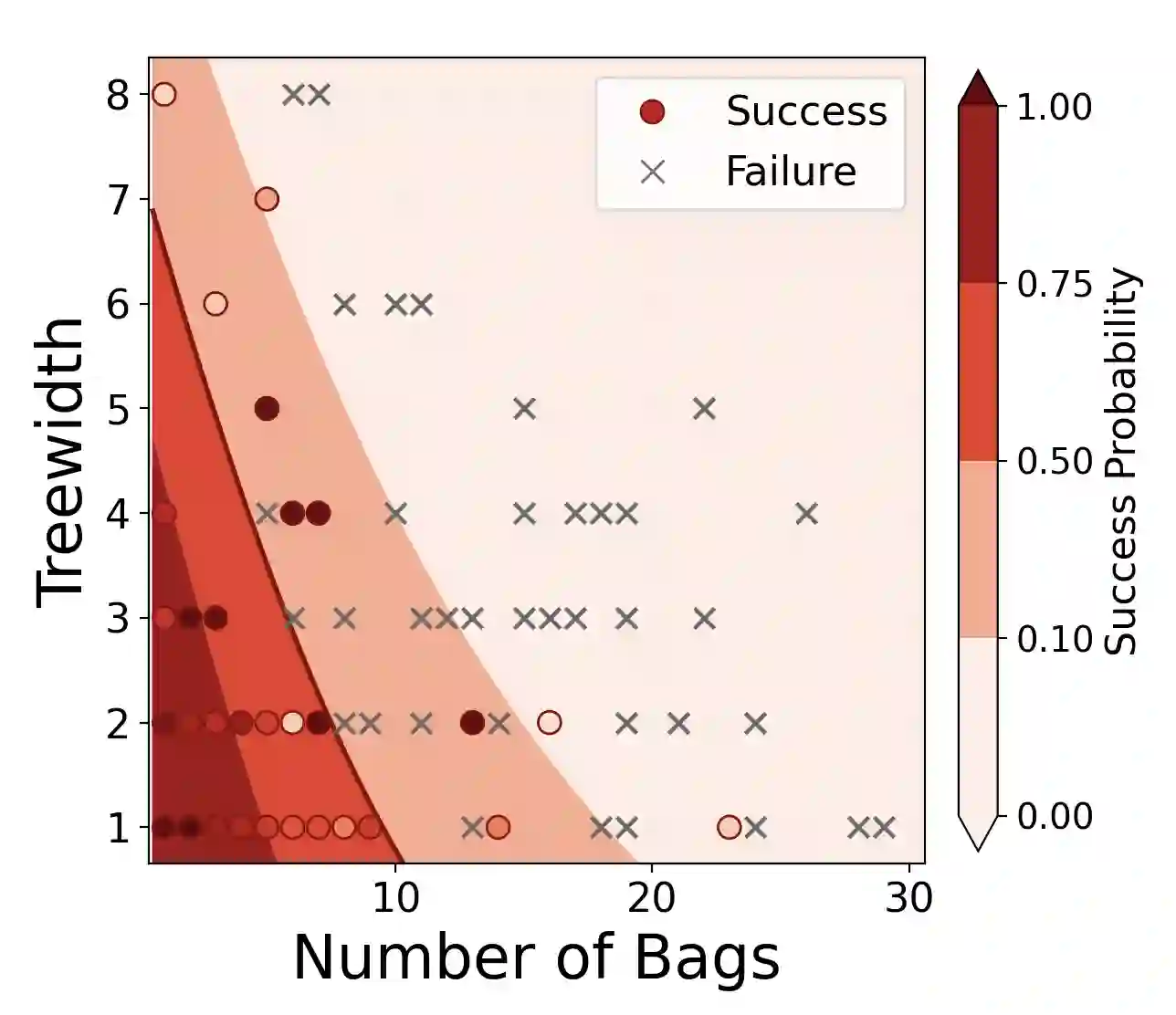

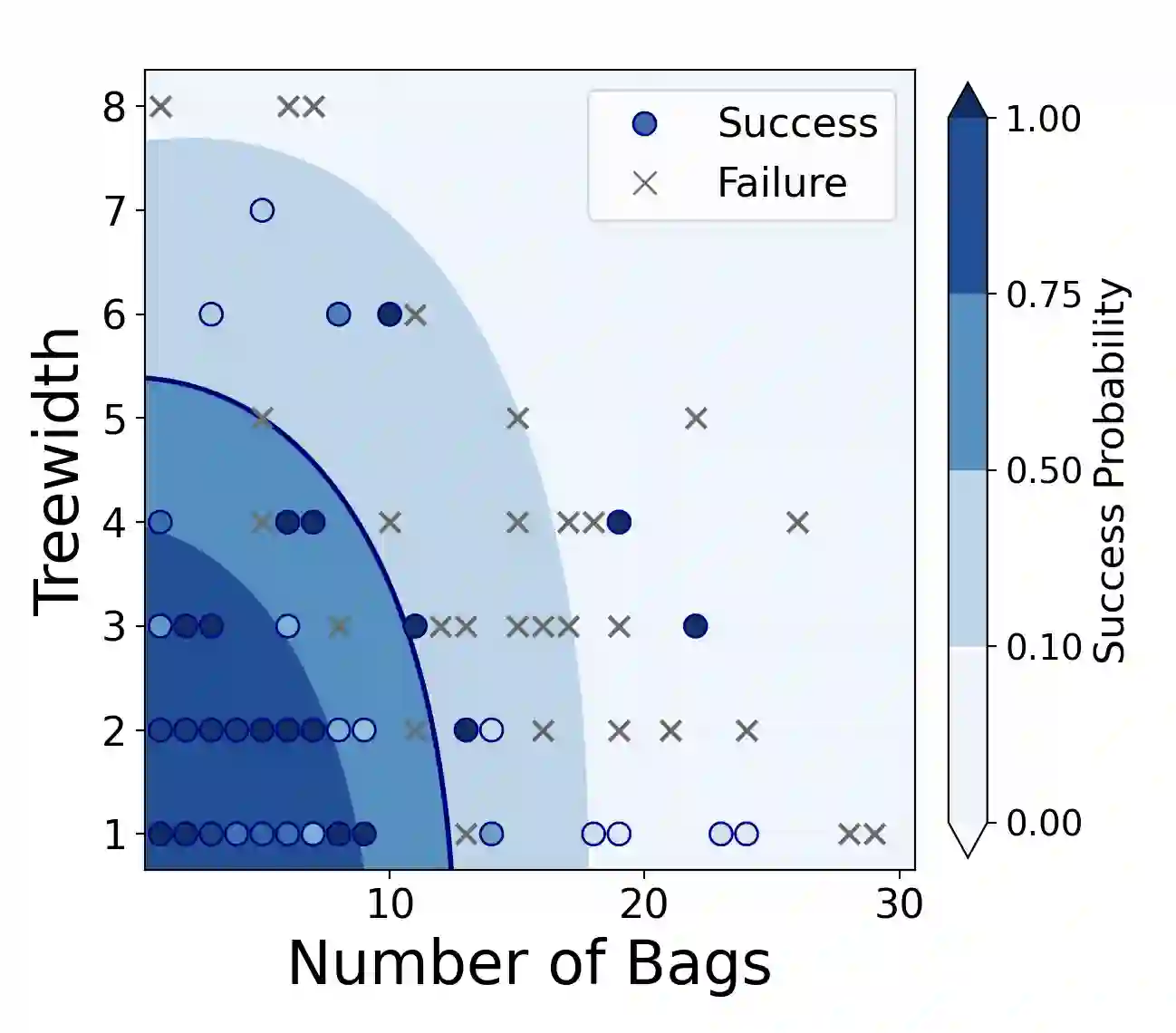

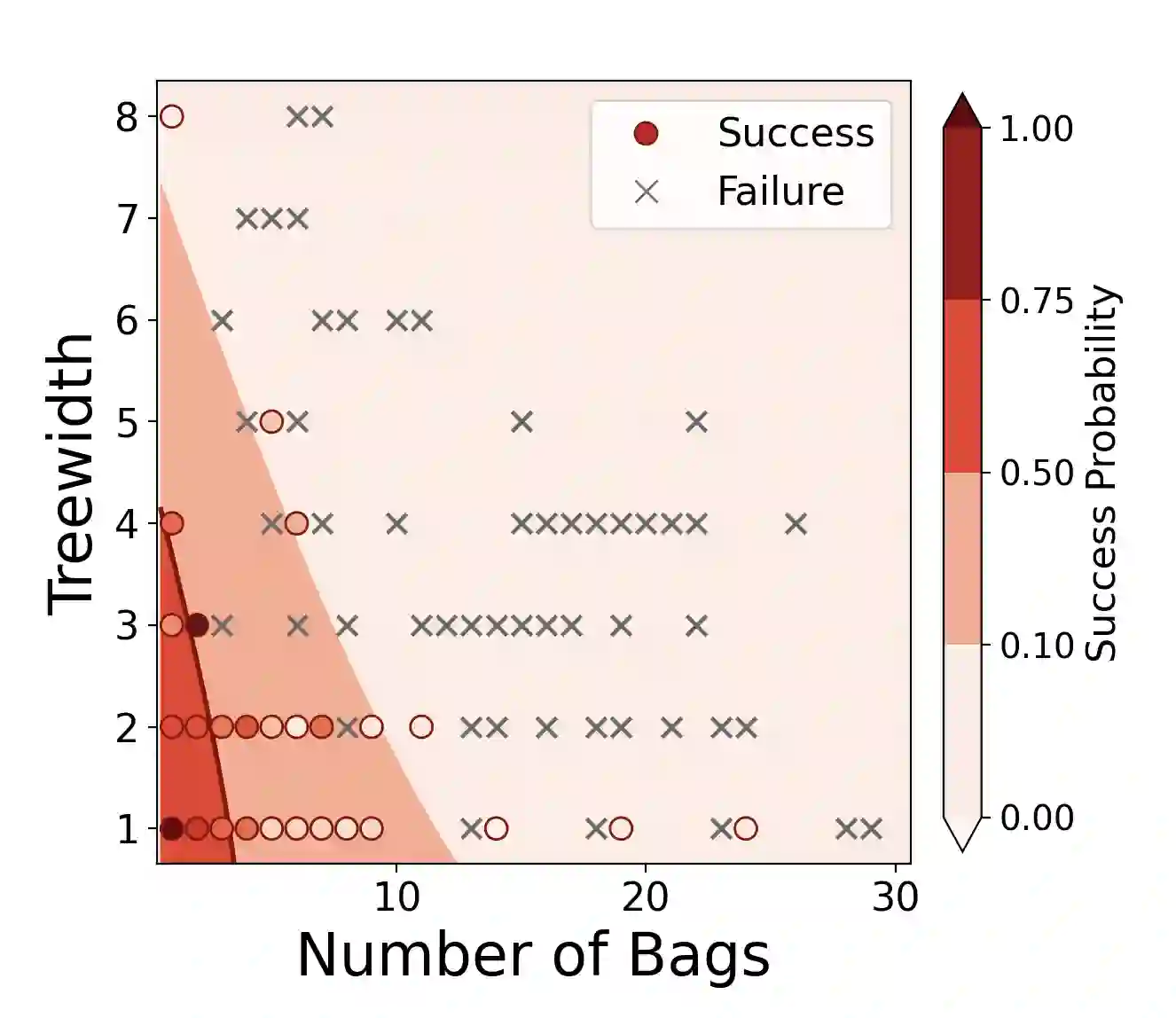

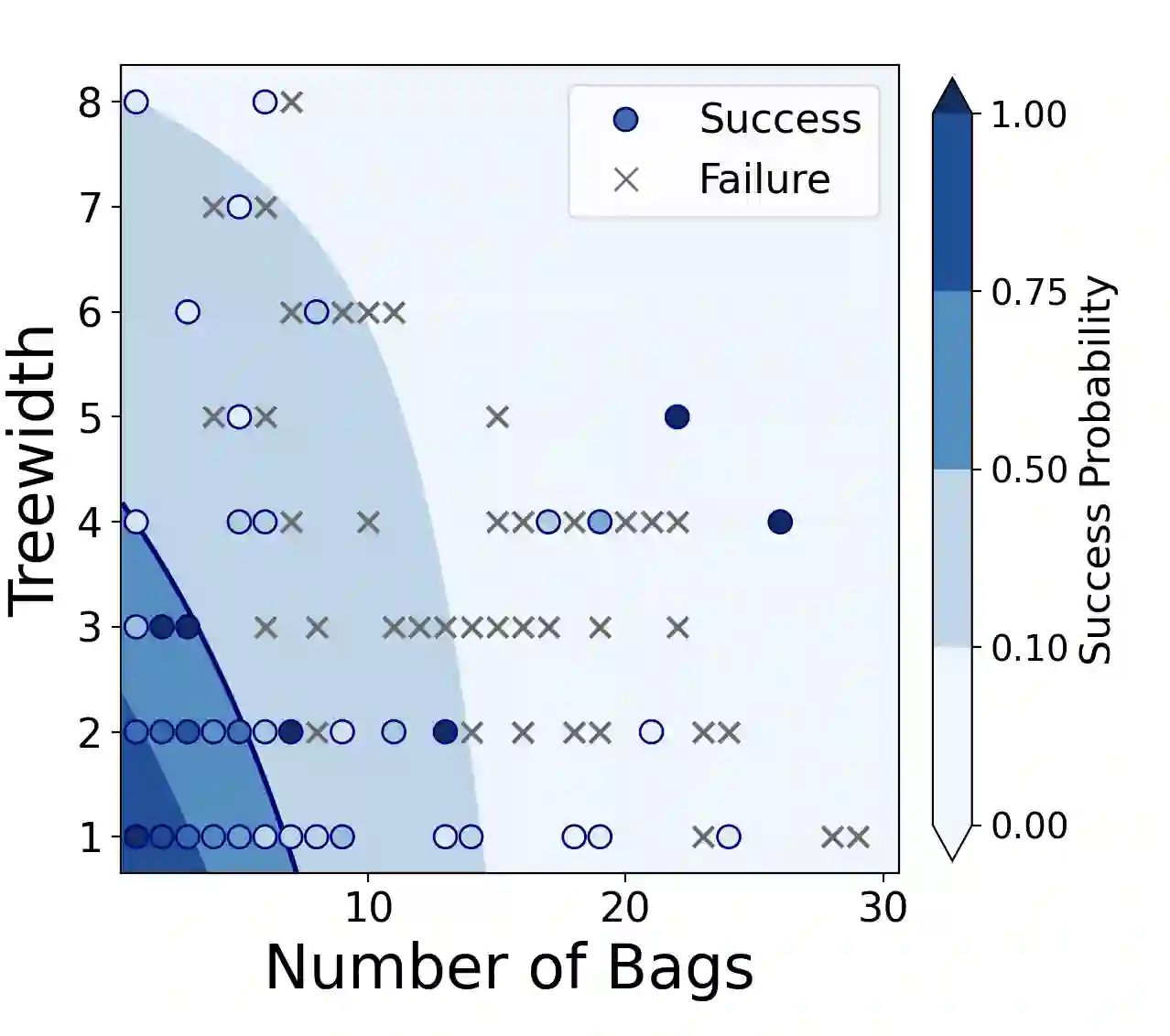

Large Language Models (LLMs) suffer from reliability issues on complex tasks, as existing decomposition methods are heuristic and rely on agent or manual decomposition. This work introduces a novel, systematic decomposition framework that we call Analysis of CONstraint-Induced Complexity (ACONIC), which models the task as a constraint problem and leveraging formal complexity measures to guide decomposition. On combinatorial (SATBench) and LLM database querying tasks (Spider), we find that by decomposing the tasks following the measure of complexity, agent can perform considerably better (10-40 percentage point).

翻译:大型语言模型(LLM)在处理复杂任务时存在可靠性问题,因为现有的分解方法多为启发式,且依赖于智能体或人工分解。本文提出了一种新颖的系统性分解框架,我们称之为约束诱导复杂性分析(ACONIC)。该框架将任务建模为约束问题,并利用形式化的复杂性度量来指导分解。在组合问题(SATBench)和大语言模型数据库查询任务(Spider)上的实验表明,通过依据复杂性度量对任务进行分解,智能体的性能可显著提升(10-40个百分点)。