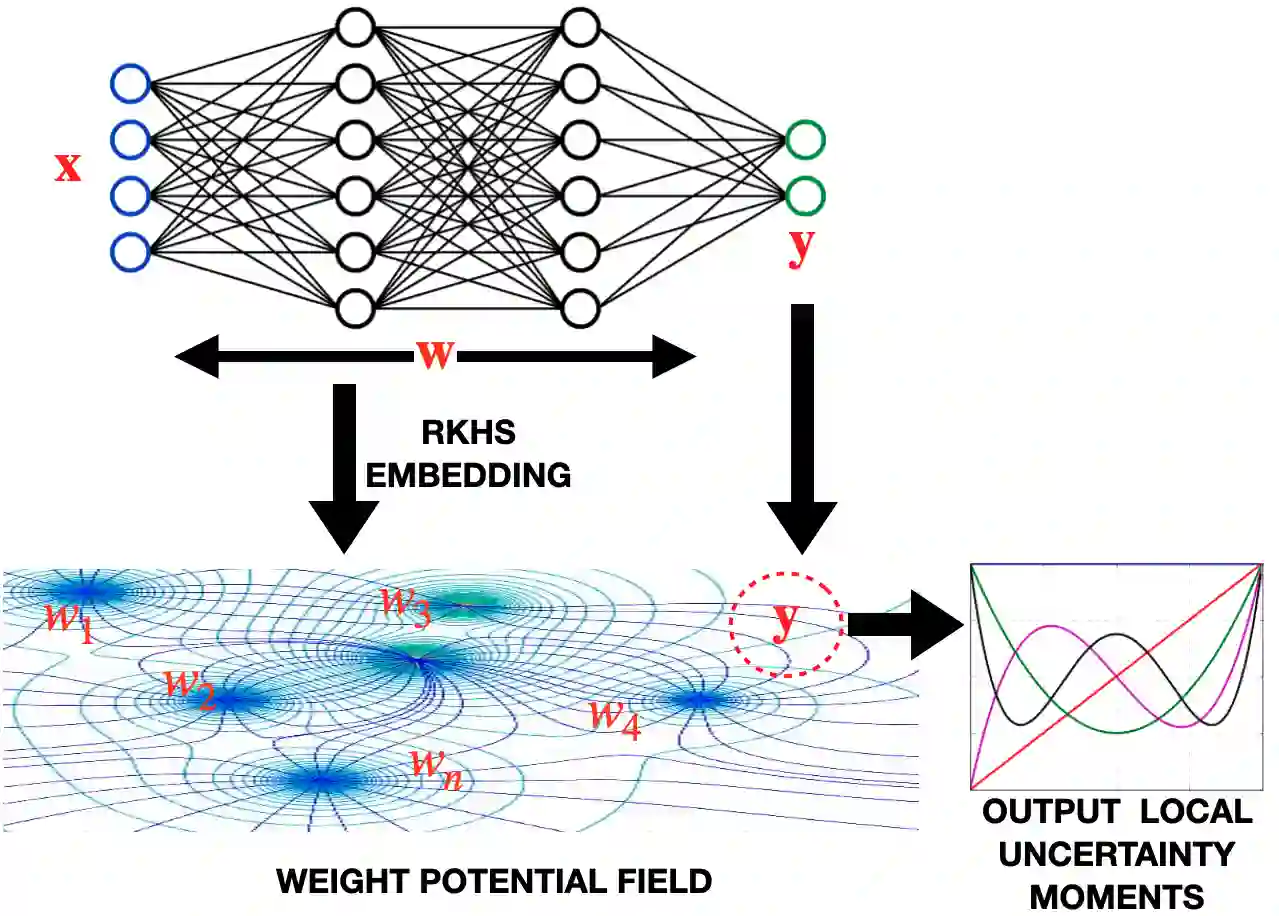

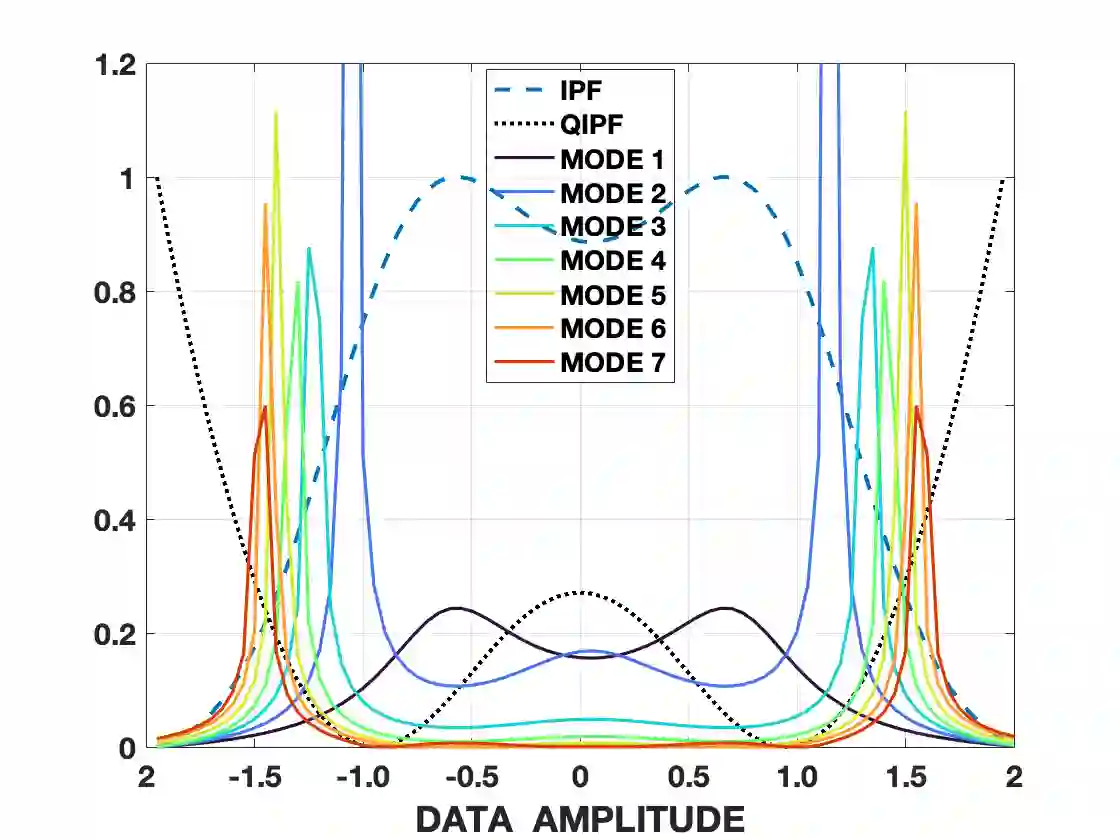

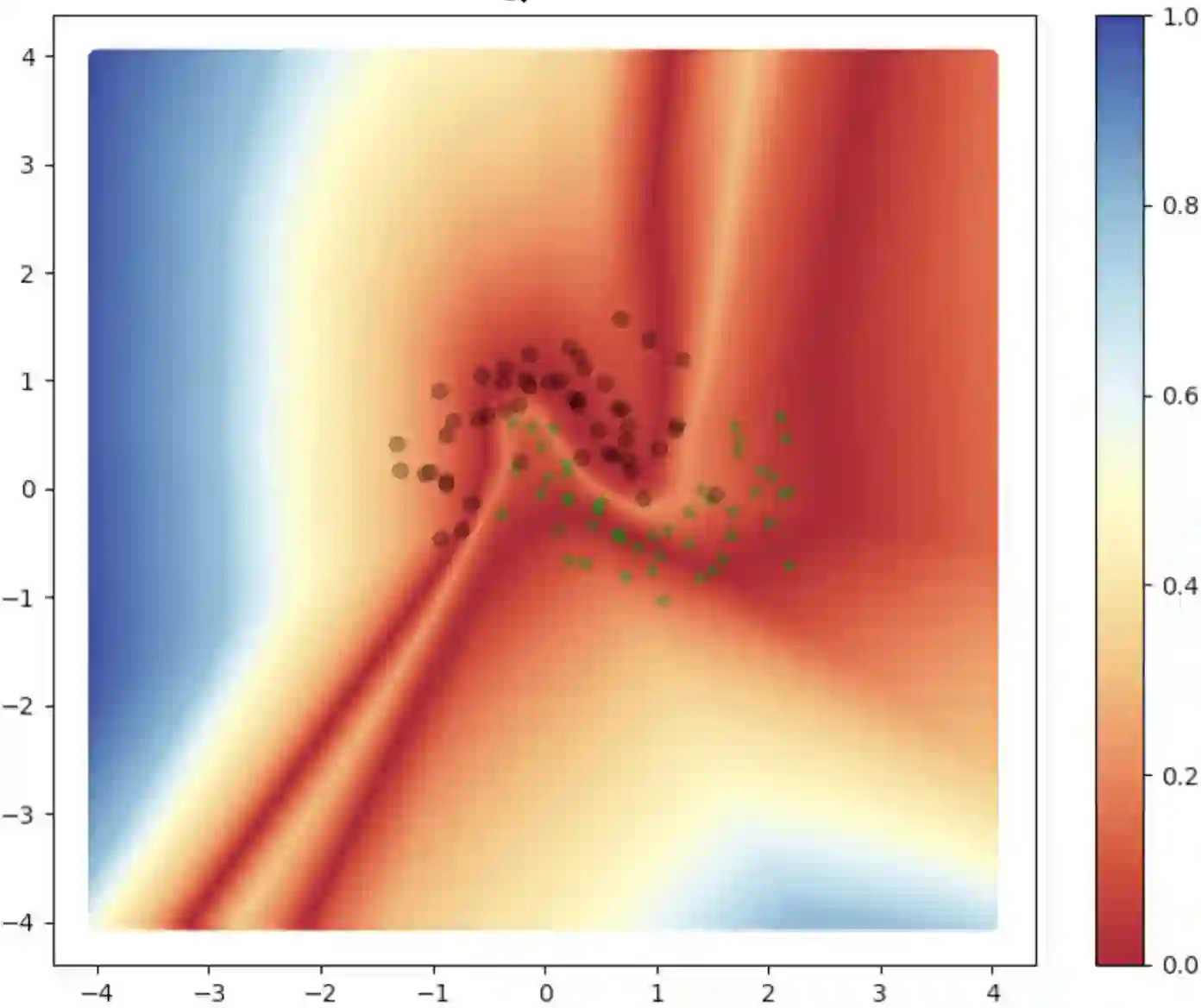

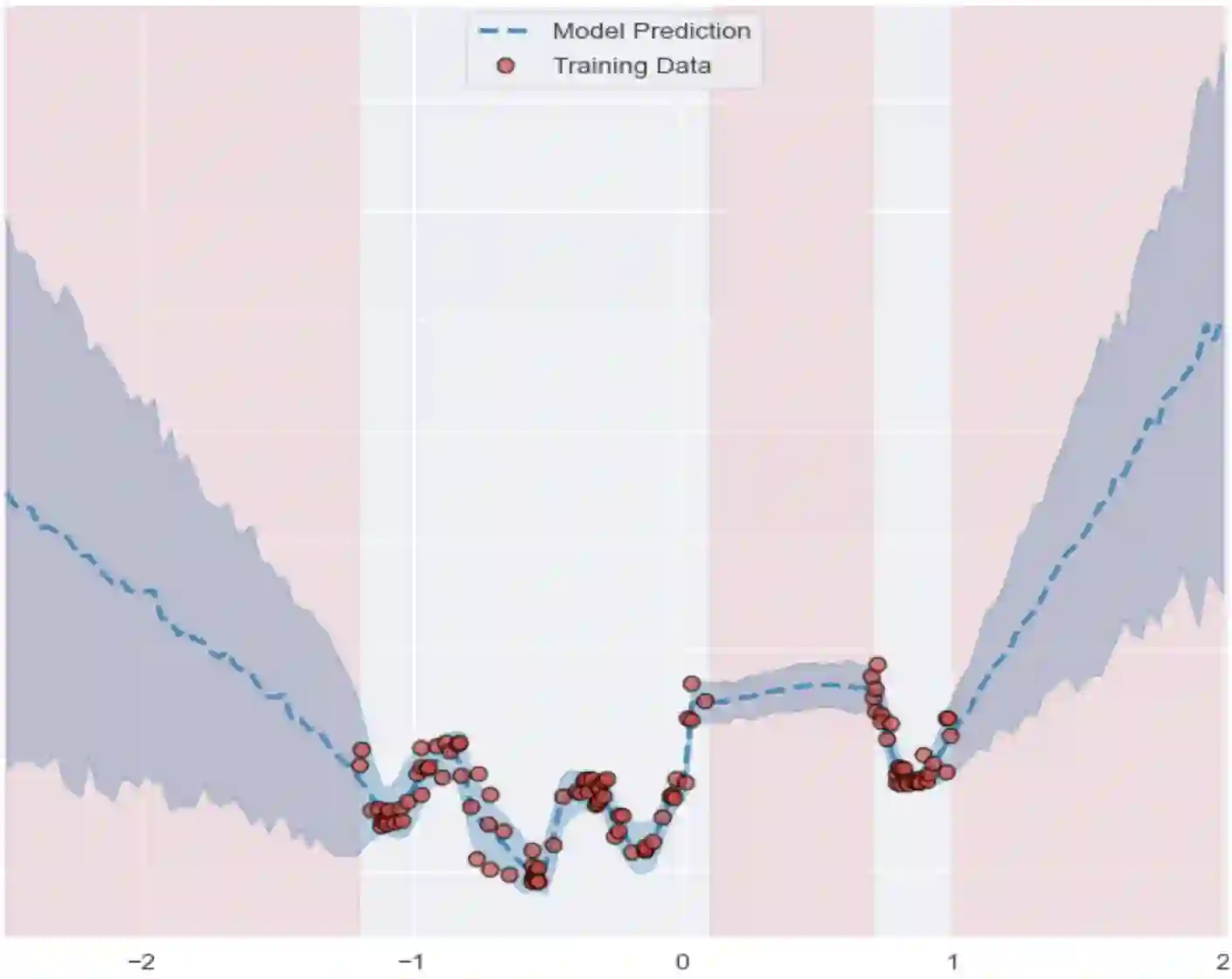

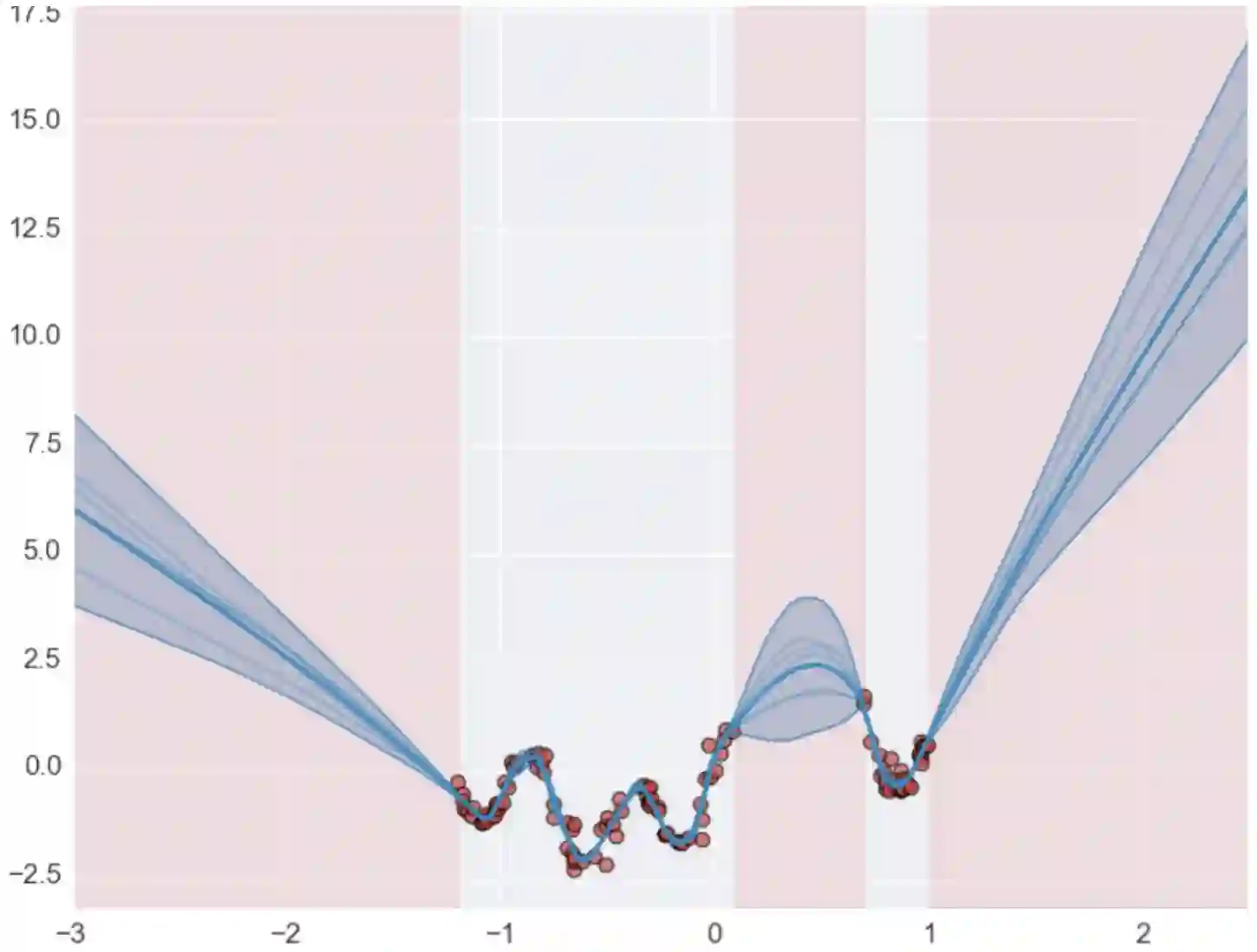

We propose a framework for single-shot predictive uncertainty quantification of a neural network that replaces the conventional Bayesian notion of weight probability density function (PDF) with a functional defined on the model weights in a reproducing kernel Hilbert space (RKHS). The resulting RKHS based analysis yields a potential field based interpretation of the model weight PDF, which allows us to use a perturbation theory based approach in the RKHS to formulate a moment decomposition problem over the model weight-output uncertainty. We show that the extracted moments from this approach automatically decompose the weight PDF around the local neighborhood of the specified model output and determine with great sensitivity the local heterogeneity and anisotropy of the weight PDF around a given model prediction output. Consequently, these functional moments provide much sharper estimates of model predictive uncertainty than the central stochastic moments characterized by Bayesian and ensemble methods. We demonstrate this experimentally by evaluating the error detection capability of the model uncertainty quantification methods on test data that has undergone a covariate shift away from the training PDF learned by the model. We find our proposed measure for uncertainty quantification to be significantly more precise and better calibrated than baseline methods on various benchmark datasets, while also being much faster to compute.

翻译:我们建议一个单一的神经网络预测不确定性量化框架,以一个功能定义,取代传统的巴伊西亚体重概率密度功能概念(PDF),取代传统的巴伊西亚体重概率密度功能概念(PDF),其功能以复制核心Hilbert空间(RKHS)的模型重量值为定义。因此,基于RKHS的分析产生了对模型重量PDF的潜在实地解释,从而使我们能够在RKHS中采用以扰动理论为基础的模型参数法,以针对模型重量-产出不确定性定出一个瞬间分解问题。我们表明,从这一方法中提取的瞬间将重量PDF自动分解到特定模型输出的周围的周围的重量PDF,并非常敏感地确定在某个特定模型预测输出的模型周围的模型偏差和重量的偏移。因此,这些功能时刻对模型预测不确定性的估算要比Bayesian和堆积方法所描述的中央分解时更清晰。我们通过评估模型的不确定性定量方法的误测度能力来进行这一实验性论证,该模型的计算方法已经从培训阶段从PDFFS转移,而我们提出的精确的测算方法比模型的精确的精确的精确度要好。