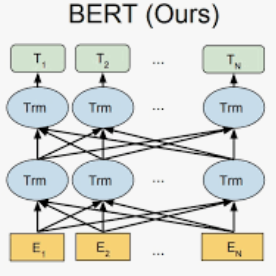

Many extractive question answering models are trained to predict start and end positions of answers. The choice of predicting answers as positions is mainly due to its simplicity and effectiveness. In this study, we hypothesize that when the distribution of the answer positions is highly skewed in the training set (e.g., answers lie only in the k-th sentence of each passage), QA models predicting answers as positions can learn spurious positional cues and fail to give answers in different positions. We first illustrate this position bias in popular extractive QA models such as BiDAF and BERT and thoroughly examine how position bias propagates through each layer of BERT. To safely deliver position information without position bias, we train models with various de-biasing methods including entropy regularization and bias ensembling. Among them, we found that using the prior distribution of answer positions as a bias model is very effective at reducing position bias, recovering the performance of BERT from 37.48% to 81.64% when trained on a biased SQuAD dataset.

翻译:许多解答问题的模型都经过培训,可以预测答案的起始和结束位置。预测答案作为位置的选择主要是因为其简单性和有效性。在本研究中,我们假设,当回答位置的分布在培训组合中高度偏斜时(例如,答案只存在于每个段落的 k 句子中),质量评估模型预测答案,因为位置可以学习虚假的定位提示,不能在不同位置上回答。我们首先在流行的采掘QA模型(如BiDAF和BERT)中说明这一立场的偏差,并彻底检查位置偏差如何在BERT的每一层中传播。为了安全地提供位置信息,我们用各种不偏差的方法对模型进行培训,包括昆虫的正规化和偏差组合。其中,我们发现,使用先前的回答位置分布作为偏差模型非常有效地减少了定位偏差,在接受有偏差的 SQUAD数据集的培训时,BERT的表现从37.48%恢复到81.64%。