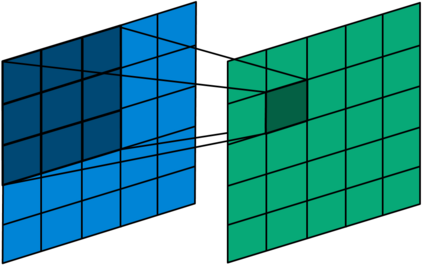

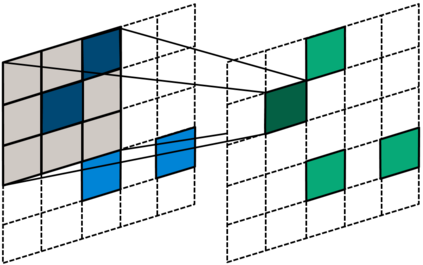

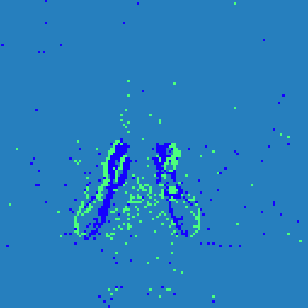

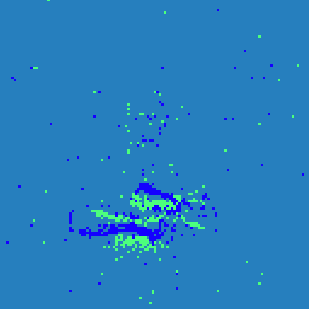

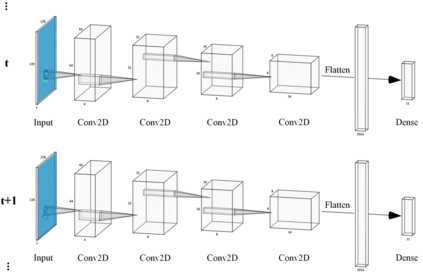

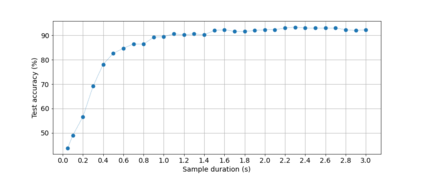

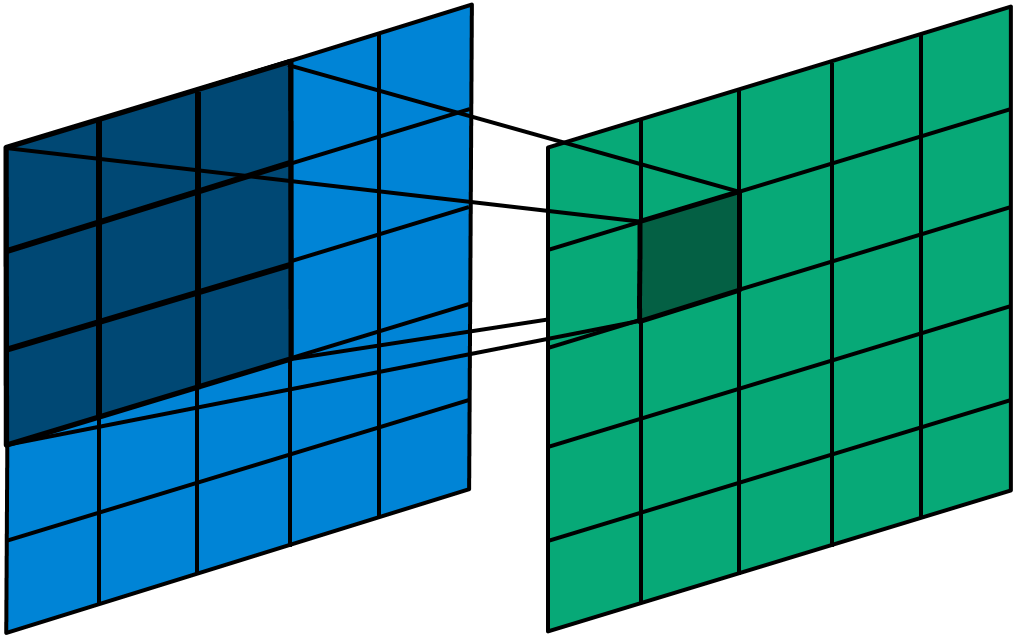

Convolutional neural networks (CNNs) are now the de facto solution for computer vision problems thanks to their impressive results and ease of learning. These networks are composed of layers of connected units called artificial neurons, loosely modeling the neurons in a biological brain. However, their implementation on conventional hardware (CPU/GPU) results in high power consumption, making their integration on embedded systems difficult. In a car for example, embedded algorithms have very high constraints in term of energy, latency and accuracy. To design more efficient computer vision algorithms, we propose to follow an end-to-end biologically inspired approach using event cameras and spiking neural networks (SNNs). Event cameras output asynchronous and sparse events, providing an incredibly efficient data source, but processing these events with synchronous and dense algorithms such as CNNs does not yield any significant benefits. To address this limitation, we use spiking neural networks (SNNs), which are more biologically realistic neural networks where units communicate using discrete spikes. Due to the nature of their operations, they are hardware friendly and energy-efficient, but training them still remains a challenge. Our method enables the training of sparse spiking convolutional neural networks directly on event data, using the popular deep learning framework PyTorch. The performances in terms of accuracy, sparsity and training time on the popular DVS128 Gesture Dataset make it possible to use this bio-inspired approach for the future embedding of real-time applications on low-power neuromorphic hardware.

翻译:计算机神经网络(CNNs)是目前计算机神经神经网络(CNNs)事实上解决计算机神经眼问题的办法,这要归功于其令人印象深刻的成果和学习的方便性。这些网络由一系列连结单位组成,称为人工神经元,在生物大脑中松散地模拟神经元。然而,在常规硬件(CPU/GPU)上实施这些网络会导致高电耗,使其难以融入嵌入系统。例如,在汽车中,嵌入式算法在能量、延缓性和准确性方面受到非常高的制约。为了设计更高效的计算机神经视觉算法,我们建议采用一个端到端的生物启发性方法,利用事件相机和突发的神经网络(SNNNS)来启动生物启发性方法。事件摄影机的输出过于紧凑和分散性,提供了非常高效的数据源源,但是用同步和密集的算法处理这些事件并没有产生任何重大的好处。为了解决这一限制,我们使用更符合生物现实的神经网络(SNNNNWs) 网络,因为其操作的性质,它们使用硬性和节能性能性能性在深度的网络上使得我们的数据能够直接学习数据。