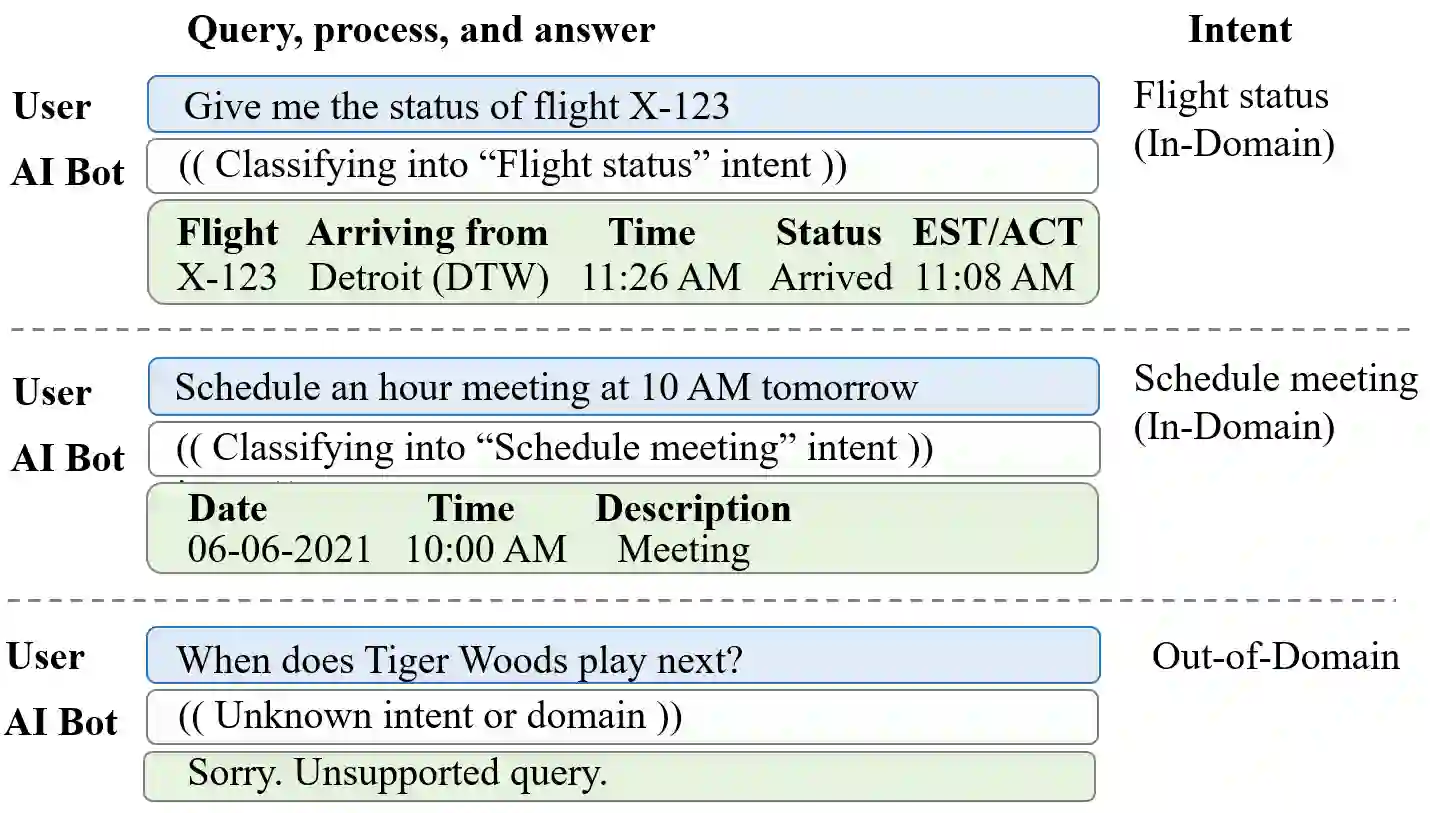

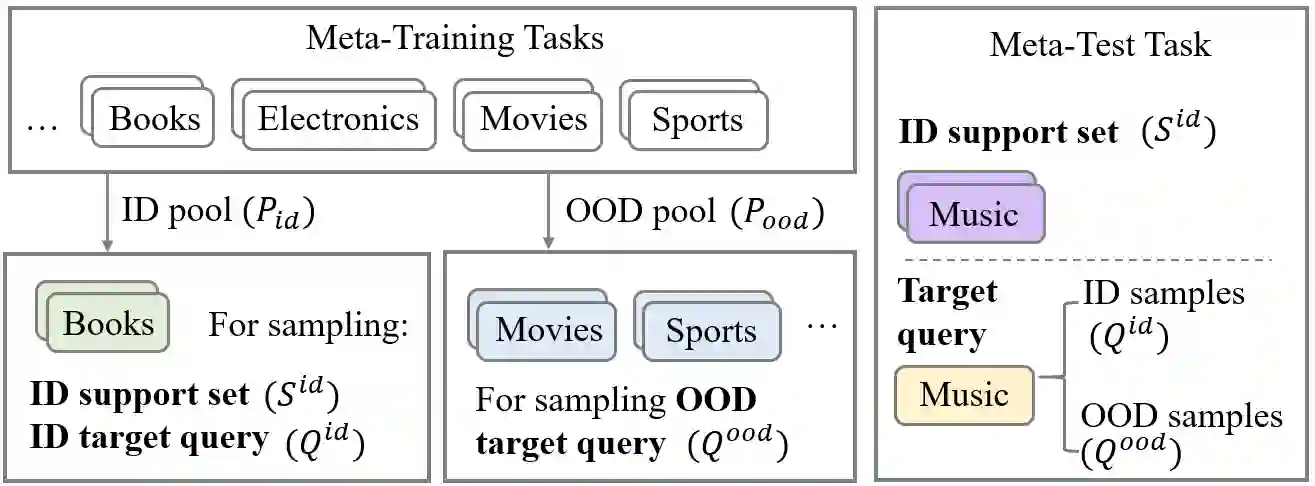

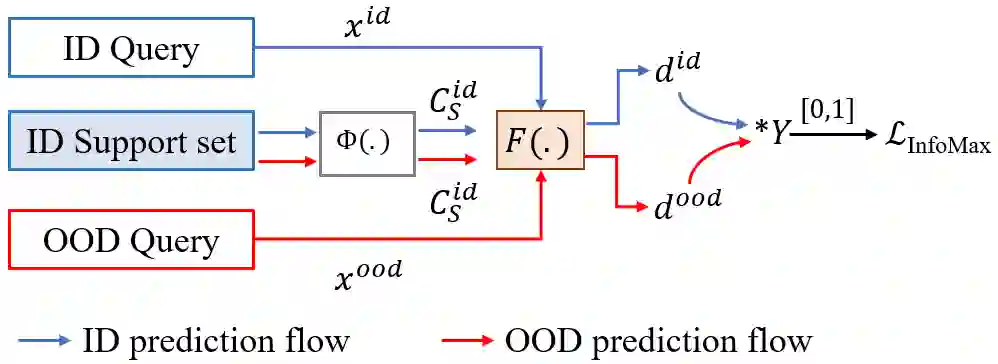

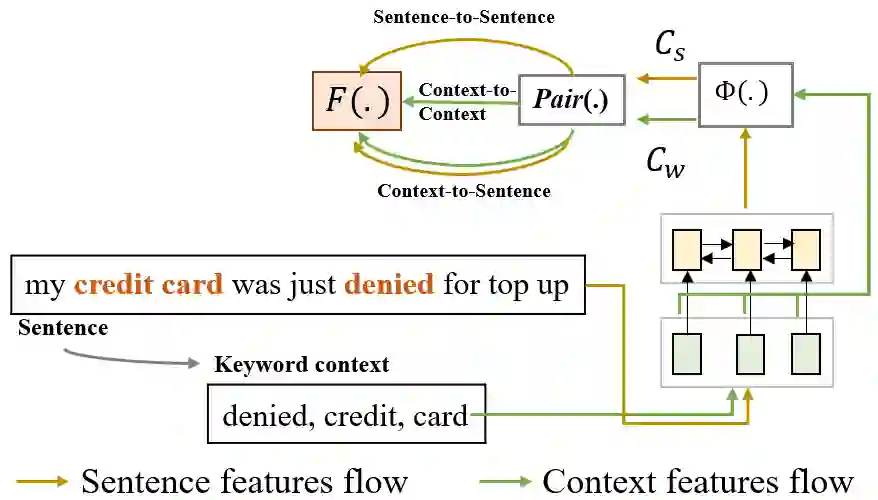

The ability to detect Out-of-Domain (OOD) inputs has been a critical requirement in many real-world NLP applications. For example, intent classification in dialogue systems. The reason is that the inclusion of unsupported OOD inputs may lead to catastrophic failure of systems. However, it remains an empirical question whether current methods can tackle such problems reliably in a realistic scenario where zero OOD training data is available. In this study, we propose ProtoInfoMax, a new architecture that extends Prototypical Networks to simultaneously process in-domain and OOD sentences via Mutual Information Maximization (InfoMax) objective. Experimental results show that our proposed method can substantially improve performance up to 20% for OOD detection in low resource settings of text classification. We also show that ProtoInfoMax is less prone to typical overconfidence errors of Neural Networks, leading to more reliable prediction results.

翻译:检测外部输入的能力是许多实际 NLP 应用程序的关键要求。 例如,对话系统中的意向分类。 原因是,纳入无支持 OOD 输入可能导致系统灾难性故障。 然而,仍然是一个经验性问题,即如果零OOD 培训数据存在,目前的方法是否能够可靠地在现实情况下解决此类问题。 在本研究中,我们提议了ProtoInfoMax,这是一个新的结构,通过相互信息最大化(InfoMax)目标,将原型网络扩展至同时处理内部和OOOD 句。实验结果显示,在文本分类的低资源设置下,我们拟议方法可以大大改进在OOD探测的性能,达到20%。 我们还表明,ProtoInfoMax 不太容易发生神经网络典型的过度信任错误,导致更可靠的预测结果。