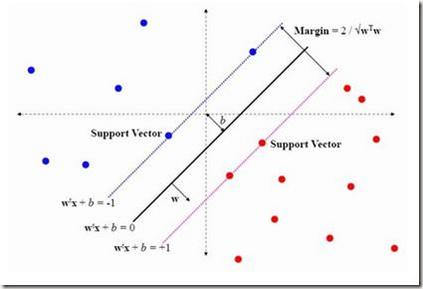

Speech recognition (SR) systems such as Siri or Google Now have become an increasingly popular human-computer interaction method, and have turned various systems into voice controllable systems(VCS). Prior work on attacking VCS shows that the hidden voice commands that are incomprehensible to people can control the systems. Hidden voice commands, though hidden, are nonetheless audible. In this work, we design a completely inaudible attack, DolphinAttack, that modulates voice commands on ultrasonic carriers (e.g., f > 20 kHz) to achieve inaudibility. By leveraging the nonlinearity of the microphone circuits, the modulated low frequency audio commands can be successfully demodulated, recovered, and more importantly interpreted by the speech recognition systems. We validate DolphinAttack on popular speech recognition systems, including Siri, Google Now, Samsung S Voice, Huawei HiVoice, Cortana and Alexa. By injecting a sequence of inaudible voice commands, we show a few proof-of-concept attacks, which include activating Siri to initiate a FaceTime call on iPhone, activating Google Now to switch the phone to the airplane mode, and even manipulating the navigation system in an Audi automobile. We propose hardware and software defense solutions. We validate that it is feasible to detect DolphinAttack by classifying the audios using supported vector machine (SVM), and suggest to re-design voice controllable systems to be resilient to inaudible voice command attacks.

翻译:Siri 或 Google Now 等语音识别系统(SR), 诸如Siri 或 Google Now 等系统已成为日益流行的人类计算机互动方法,并已将各种系统转化为声音控制系统(VCS) 。 之前对 VCS 的袭击工作显示, 人们无法理解的隐藏语音指令可以成功地控制这些系统。 隐藏的语音指令虽然隐藏着,但仍然可以听得见。 在这项工作中, 我们设计了一个完全无法令人信任的攻击, DolphinAttack, 调制超声波载器( 例如, f > 20 kHz ) 的语音指令, 以实现不透明性。 通过利用麦克风电路的非线性声音控制系统, 调制的低频音频音频指令可以成功地被降压、 恢复, 更重要的是被语音识别系统解释。 我们验证了DolphinAtack在流行语音识别系统上, 包括Siri、 Google HIV 、 变动智能智能智能智能系统, 和智能智能智能智能智能智能智能系统, 。 我们提议对数字智能系统进行硬控系统进行硬控。