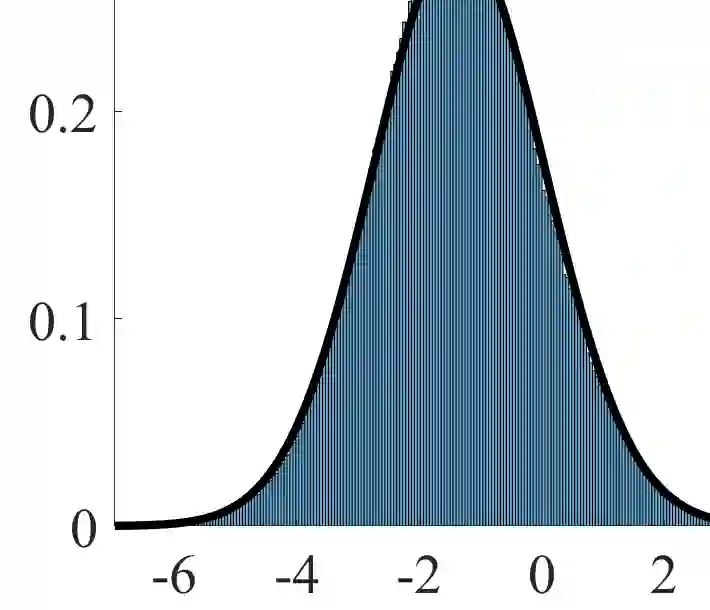

Calibration is defined as the ratio of the average predicted click rate to the true click rate. The optimization of calibration is essential to many online advertising recommendation systems because it directly affects the downstream bids in ads auctions and the amount of money charged to advertisers. Despite its importance, calibration optimization often suffers from a problem called "maximization bias". Maximization bias refers to the phenomenon that the maximum of predicted values overestimates the true maximum. The problem is introduced because the calibration is computed on the set selected by the prediction model itself. It persists even if unbiased predictions can be achieved on every datapoint and worsens when covariate shifts exist between the training and test sets. To mitigate this problem, we theorize the quantification of maximization bias and propose a variance-adjusting debiasing (VAD) meta-algorithm in this paper. The algorithm is efficient, robust, and practical as it is able to mitigate maximization bias problems under covariate shifts, neither incurring additional online serving costs nor compromising the ranking performance. We demonstrate the effectiveness of the proposed algorithm using a state-of-the-art recommendation neural network model on a large-scale real-world dataset.

翻译:校准优化对于许多在线广告建议系统至关重要,因为它直接影响到广告拍卖的下游出价和广告商收取的金额。尽管它很重要,校准优化往往会遇到一个名为“最大化偏差”的问题。 最大化偏差是指预测值的最大值高于真实最大值。 之所以出现这一问题,是因为校准是根据预测模型本身选定的数据集来计算的。 即使在每个数据点上都能够实现公正预测,当培训和测试组之间出现对等变化时,校准也是不可或缺的。为了缓解这一问题,我们对最大化偏差的量化进行理论化,并提议在本文件中采用差异调整偏差(VAD)元对等法。算法是高效、稳健和实用的,因为它能够减轻共变差下的最大偏差问题,既不会带来额外的在线服务成本,也不会损害排名绩效。我们用一个州-州-州-州-州-州-州-州级数据网络在大规模数据模型上展示了拟议算法的有效性。